Listen To Our Podcast🎧

%20%20(1).png)

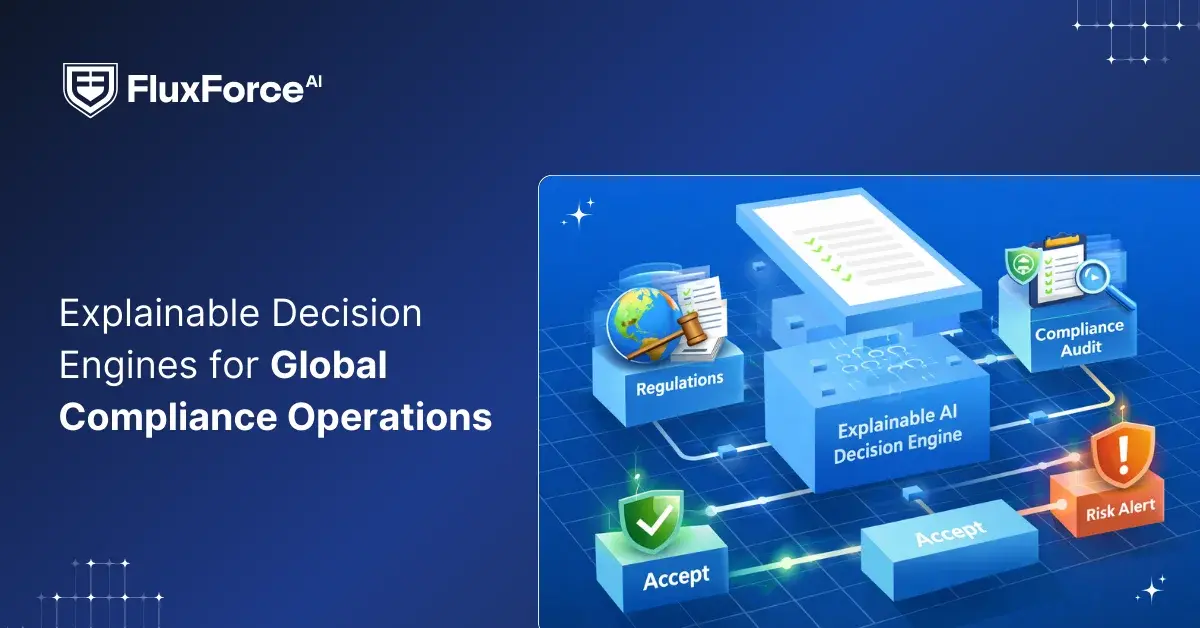

Introduction

“Trust is built when decisions can be explained.”

That idea sits at the core of governance, risk, and compliance. GRC teams are not judged only on outcomes. They are judged on how those outcomes were reached. This is where explainable AI becomes critical.

AI already scans policies, maps controls, flags risks, and monitors compliance in real time. But when AI outputs cannot be explained, they introduce a new layer of risk. In GRC, unexplained automation is often worse than manual work.

So, the real question is not can AI help GRC

It is: can AI justify its decisions to auditors, regulators, and leadership?

Explainable AI exists to answer that question.

What is explainable AI in GRC?

Explainable AI in GRC refers to AI systems that clearly show why a risk was flagged, how a control failed, and what data influenced a compliance decision.

Instead of producing a score or alert with no context, explainable AI provides reasoning that humans can review, challenge, and document.

This matters because GRC decisions are reviewed long after they are made. During audits, investigations, or regulatory reviews, teams must reconstruct decision logic. Explainable AI makes that possible.

According to PwC case studies referenced in recent GRC research, AI systems with explainability reduced compliance errors by around three-quarters while maintaining audit traceability.

Why is AI explainability important for governance ?

Governance is about accountability. Boards and executives remain responsible even when AI supports decisions.

Many organizations already use AI to:

- Summarize board discussions

- Compare new regulations against internal policies

- Highlight governance gaps in codes of conduct

But here is the problem:

If leadership cannot explain why AI highlighted one issue and ignored another, governance weakens instead of improving.

Explainable AI fixes this by exposing decision logic. It allows leaders to see which policies, controls, or data patterns influenced recommendations. This supports responsible AI governance, where humans approve decisions with confidence instead of blind trust.

How explainable AI improves risk management decisions ?

Risk teams deal with volume. Logs, transactions, vendor data, user activity, and external signals arrive faster than humans can review.

AI helps by prioritizing risks. But traditional AI often hides its reasoning. That creates tension during reviews.

Explainable AI changes how AI risk management works:

- It links risk alerts to specific behaviors or control gaps

- It shows why a pattern was considered risky

- It allows risk owners to validate or override findings

In real-world deployments cited in banking and enterprise risk programs, AI systems that surfaced explainable risk signals helped teams detect issues earlier and act faster. Mastercard, for example, significantly accelerated fraud signal detection by focusing on patterns AI could clearly explain to analysts.

This is the difference between automated risk assessment tools and trusted risk systems.

Why compliance GRC cannot rely on black-box AI ?

Compliance teams live under scrutiny. Every action must be defensible.

Black-box AI creates serious problems:

- Auditors ask for reasoning that cannot be provided

- Regulators challenge decisions with no explanation trail

- Internal teams struggle to validate outcomes

Explainable AI supports regulatory compliance automation by creating clear audit trails. It explains how a policy change, access update, or control failure triggered a compliance action.

This directly enables continuous compliance monitoring. Instead of checking controls once a quarter, teams can monitor them daily and still explain every alert. One study cited in recent AI compliance research showed AI systems identified regulatory changes with around nine-tenths accuracy, while also reducing compliance mistakes sharply. That level of performance only works when explanations exist.

The real shift explainable AI brings to GRC

Explainable AI does not replace people. It supports them.

It allows GRC teams to:

- Trust AI outputs without losing control

- Defend decisions during audits

- Move from reactive reviews to proactive oversight

Most importantly, it aligns AI with the core principle of GRC:

If you cannot explain a decision, you should not automate it.

That is why explainable AI is not optional in modern governance, risk, and compliance. It is the foundation that makes AI usable, defensible, and safe in regulated environments.

Explainable AI frameworks for governance and risk ?

Model validation teams do not approve models based on results alone. They approve models when the logic makes sense. Explainable AI plays a direct role.

Why does this matter? Traditional AI systems often operate as “black boxes,” leaving compliance officers unsure about how risk assessments were generated. With AI interpretability and model explainability techniques, explainable AI surfaces the reasoning behind every recommendation, making decisions traceable and defensible.

Clear impact on risk detection

- Automated risk assessment tools that map controls across multiple frameworks.

- Continuous scanning of internal policies and regulatory updates.

- Real-time feedback loops for risk scoring and audit preparation.

- Transparent dashboards for leadership to review decision rationale.

Example in practice:

A leading enterprise used a continuous compliance monitoring platform powered by XAI to cross-check internal security policies against ISO 27001 and SOC 2 frameworks. This reduced manual review times by 70% while improving alignment with regulatory expectations.

Key takeaway: Using structured XAI frameworks ensures that organizations are not only AI compliant but also maintain responsible AI governance, allowing human experts to focus on high-stakes judgment instead of repetitive audits.

Explainability in AI risk controls

How does explainable AI improve compliance and risk management?

Explainable AI also improves the quality of validation challenge. Instead of vague concerns, validators point to specific drivers and decision thresholds that increase risk. Model owners respond faster because issues are clear and measurable.

When explainability becomes standard practice, institutions see shorter validation cycles, fewer follow-up findings, and stronger AI model governance. Most importantly, explainable AI turns validation into a control that proves value and builds regulator confidence.

Applications include:

- Risk assessment automation for financial, cybersecurity, and operational risks.

- Predictive alerts for unusual patterns in employee behavior or transaction flows.

- Visual traceability of each AI-generated recommendation for auditors.

Primary benefits highlighted:

- Transparent reasoning supports compliance GRC objectives.

- Continuous monitoring allows proactive risk mitigation.

- Human oversight remains central, creating a responsible AI governance environment.

Explainable AI best practices for compliance

For organizations adopting AI compliance tools, following best practices ensures effective integration of explainable AI in daily operations.

Recommended steps include:

- Embed explainability in every model used for risk assessment automation.

- Conduct regular bias detection and fairness testing to ensure decisions are equitable.

- Align AI decision logic with internal policies and regulatory compliance automation frameworks.

- Maintain version control and detailed audit trails for accountability.

Why this matters:

With evolving regulations like the EU AI Act and standards such as ISO/IEC 42001, explainable AI provides a documented path showing that AI-driven decisions are consistent, auditable, and defendable. Teams using these best practices experience faster audits, fewer errors, and a measurable increase in compliance confidence.

Why explainable AI matters for GRC ?

What makes explainable AI critical for governance, risk, and controls?

As GRC programs grow more complex, traditional AI can’t always provide clear reasoning behind risk scores, control recommendations, or compliance alerts. Explainable AI (XAI) addresses this by making every AI decision transparent and understandable for humans. This is essential for organizations that need to defend decisions to auditors, regulators, or internal leadership.

Key benefits of XAI in GRC:

- Enhanced AI transparency ensures teams can trace recommendations step by step.

- Improved confidence in AI compliance tools.

- Reduced risk of errors in automated risk assessment tools.

- Enables responsible AI governance by keeping humans in the decision loop.

Stat to highlight:

A 2025 study by PwC found that organizations using XAI for risk management reduced compliance errors by 75% and improved decision-making speed by 60%.

Example:

A large financial institution used continuous compliance monitoring with XAI to flag unusual account activity. Analysts could see why transactions were flagged, which prevented false alarms and improved operational efficiency.

Explainable AI for internal controls

Internal controls are the backbone of compliance, but manually reviewing them across multiple departments or frameworks is time-consuming. XAI enables regulatory compliance automation by:

- Highlighting gaps in internal policies.

- Comparing controls against multiple standards like ISO 27001, SOC 2, or HIPAA.

- Creating audit-ready reports automatically.

Practical use case:

JPMorgan implemented an XAI-powered tool to review loan documents for control compliance. It reduced review time from hundreds of hours to just a few hours per week while maintaining full traceability of decisions.

Primary advantage:

By combining AI interpretability with model explainability techniques, internal audit teams can focus on judgment calls, rather than repetitive tasks.

Benefits of explainable AI in risk management

How does explainable AI transform risk management ?

Traditional AI can detect patterns, but without transparency, risk managers struggle to act confidently. Explainable AI ensures that risk signals are clear, interpretable, and actionable.

Benefits include:

- Earlier detection of potential risks with traceable reasoning.

- Reduced dependency on manual analysis, increasing efficiency.

- Ability to integrate insights into risk assessment automation workflows.

Example:

Western Digital leveraged XAI for supply chain risk. AI highlighted suppliers at risk of delays, and risk managers could see the exact reasoning behind each alert. This proactive approach saved the company millions during global supply chain disruptions.

Why it matters for GRC officers:

Using explainable AI tools for GRC officers ensures that all risk-related decisions are defensible, consistent, and compliant with both internal standards and external regulations.

Conclusion

Explainable AI is changing the way GRC works. It helps teams understand risks faster, keep up with regulations, and make better decisions. By using AI governance, AI risk management, and AI compliance tools, organizations can work smarter without losing human judgment.

The key is to implement responsible AI governance, focus on AI interpretability, and follow best practices for compliance. When done right, explainable AI saves time, reduces errors, and builds trust across the business.

In short, AI transparency and model explainability techniques make governance, risk, and compliance simpler, safer, and more efficient.

.webp)

Share this article