Listen To Our Podcast🎧

Introduction

The problem with most risk committees across enterprises is the growing volume of AI-driven decisions being approved without full visibility. However, to meet time-sensitive operational demands, risk teams do not need to accelerate approvals blindly, but to rely on AI models that are explainable and defensible.

When decision logic remains hidden, challenging risk signals and confirming governance alignment often becomes difficult.

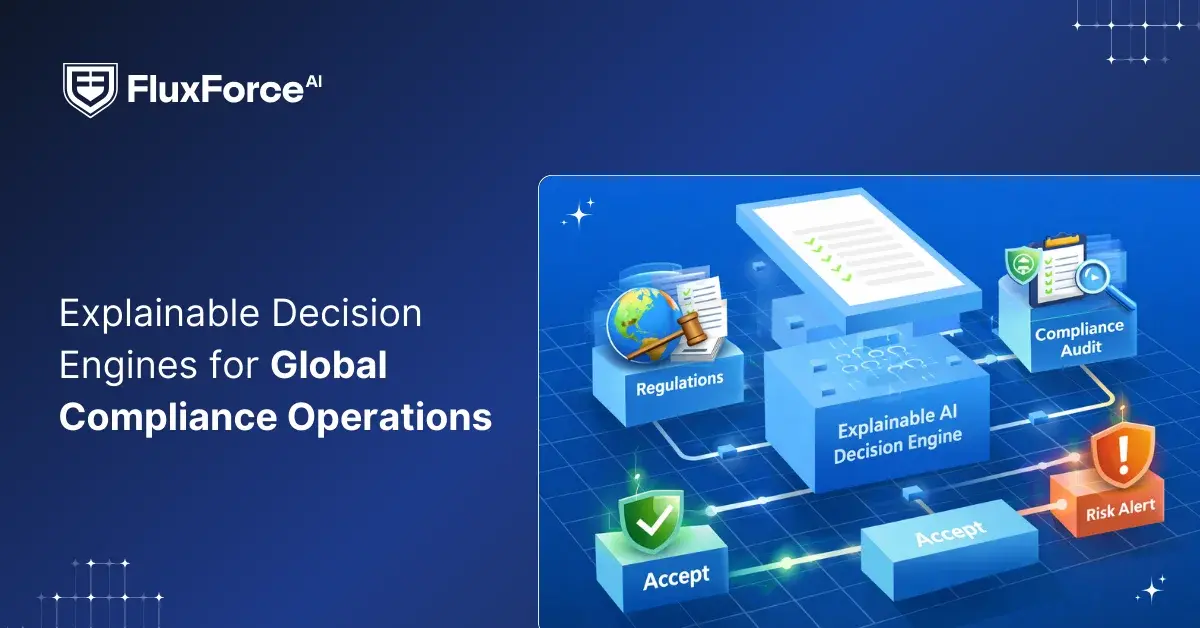

Explainable AI supports enterprise risk committees by making model behaviour visible and reviewable at the point of oversight. From initial risk assessment through final decision validation, committees gain clarity into how outcomes are formed and which factors influence judgment.

This article provides a clear breakdown of how XAI strengthens the effectiveness of enterprise risk committees while improving AI governance and compliance.

Why Enterprise Risk Committees Need Explainable AI ?

In enterprises, decisions that matter are no longer measured by speed but by accuracy, fairness, and alignment with policy. Risk committee members across organizations were responsible for 68 percent of delayed fraud detection in 2024, even with AI integration in place.

The core problem is AI models (often operating as black boxes) are lacking in:

- Clear audit trails that meet regulatory expectations

- Logic that explains why a transaction was flagged or approved

- Accountability showing which inputs influenced a decision

- Human oversight when automated decisions carry real impact

Regulators increasingly expect AI-driven decisions to remain clear, accountable, and actionable. Explainable AI gives committees visibility into how models reach decisions. With integrated explainable AI in risk management, members can:

- Defend audit reviews with documented reasoning for every model decision

- Identify bias or drift before it creates regulatory or reputational risk

- Translate technical outputs into explanations executives can act on

- Meet regulatory expectations without fixing compliance after deployment

- Maintain control over risk decisions instead of relying on opaque models

5 Ways XAI Strengthens Risk Committee Supervision

XAI turns AI outputs into clear, auditable insights, giving risk committees the clarity and confidence they need to manage enterprise risks effectively. The following five areas show how XAI ensures decisions remain aligned with higher authorities

XAI turns AI outputs into clear, auditable insights, giving risk committees the clarity and confidence they need to manage enterprise risks effectively. The following five areas show how XAI ensures decisions remain aligned with higher authorities

1. Understanding the “Why” Behind Every Decision

Explainable systems provide committees a detailed view of which variables influenced AI decision-making. By understanding why a model flagged a risk, members can evaluate whether the alert reflects genuine exposure or a false signal.

Leveraging XAI for enterprise risk management supports:

- Accurate prioritization of emerging risks

- Improved discussion quality during committee reviews

- Consistent, evidence-based decision-making aligned with enterprise objectives

2. Detecting Hidden Risks Early

XAI highlights unusual patterns and inconsistent results that may indicate weakening controls or rising exposure. Governance teams can investigate causes earlier and initiate action before issues appear through losses or audit findings.

Using XAI supports:

- Earlier visibility into control gaps

- Clearer root cause investigation

- Faster corrective action

3. Ensuring AI Governance and Compliance

Explainable outputs provide traceable evidence showing how automated decisions follow approved policies and regulatory requirements. Oversight bodies can confirm adherence, document exceptions, and demonstrate control effectiveness during audits.

Applying explainable AI governance enables:

- Verifiable policy compliance

- Defensible audit documentation

- Consistent governance enforcement

4. Aligning AI Insights with Strategic Objectives

XAI enables risk committees to interpret system alerts in the context of the organization’s key objectives. Instead of seeing a generic risk, committees can understand how it affects important business areas.

This allows oversight teams to:

- Link risks to business priorities

- Guide mitigation based on impact

- Maintain strategy-aligned oversight

5. Strengthening Accountability and Documentation

XAI shows why a decision was made and who approved it. For example, if a high-risk alert is acted on, the system records the reason and the person responsible. This makes it easy to check actions and explain them to auditors or regulators.

Using XAI helps:

- Track who made each decision

- Keep clear records of actions

- Provide proof for audits and reviews

Essential AI Governance Frameworks Explainable Systems Align With

The core principles of explainable AI (transparency, fairness, and accountability) support alignment with several governance frameworks, such as:

1. DORA (Digital Operational Resilience Act)

DORA requires financial institutions to test and document how AI systems behave under stress. Explainable models allow risk committees to demonstrate resilience by showing exactly how decision logic adapts during market volatility or operational disruption. Without explainability, proving resilience becomes speculative.

2. SR 11-7 (Federal Reserve Guidance on Model Risk Management)

The Federal Reserve expects firms to validate models continuously and document their limitations. Explainable AI produces the audit trail SR 11-7 demands: what the model considered, how it weighted inputs, where it might fail. This turns validation from a retrospective exercise into an ongoing oversight capability.

3. GDPR Article 22 (Right to Explanation)

European regulators enforce the principle that automated decisions affecting individuals must be explainable. For risk committees overseeing credit, fraud, or compliance models, this means providing clear reasoning on demand. XAI systems generate those explanations natively, reducing legal exposure while maintaining operational speed.

4. NYDFS Cybersecurity Regulation Part 500

New York's Department of Financial Services mandates accountability for third-party risk and algorithmic decision-making. Explainable AI allows committees to assess vendor models with the same rigor applied to internal systems, ensuring that outsourced risk decisions remain defensible under regulatory review.

Operational Impact of Explainable AI in Enterprise Risk Management

For enterprise risk committees, explainable AI improves the ability to act on decision signals without sacrificing oversight quality. Here are a few strong examples of what teams gain across daily risk operations.

- Faster Escalation of High-Risk Decisions- Instead of waiting for data teams to interpret flagged cases, committee members receive explanations at the moment a decision requires review.

-

Reduced Reliance on Technical Intermediaries- Risk leaders can evaluate model outputs directly without needing engineers to translate results. Explanations are generated in business terms, not code, so oversight happens where decisions are made.

-

Improved Accuracy in Audit Responses- When regulators or auditors request documentation, explainable systems provide complete decision trails automatically.

-

Earlier Detection of Model Drift or Bias- Explainability points out shifts in model behavior before they result into compliance failures.

- Stronger Accountability in Vendor Risk Management- Explainable systems force vendors to document their decision logic, giving committees the leverage to demand transparency and hold external providers to the same standards as internal models.

Blueprint for Implementing XAI at the Committee Level

1. Establish Clear Explainability Requirements Before Model Deployment

Risk committees should define what constitutes an acceptable explanation before any AI system goes live. This includes specifying which decisions require human-readable justifications, how detailed those explanations must be, and under what conditions a model output needs additional review. Setting these standards upfront prevents retroactive compliance work.

2. Integrate Explainability into Existing Model Validation Workflows

XAI doesn't require parallel governance structures. It should be embedded directly into current validation processes so that explainability becomes a standard checkpoint alongside accuracy testing. This means model risk teams evaluate both performance metrics and the quality of explanations during every validation cycle.

3. Create Role-Specific Explanation Formats for Different Stakeholders

A CISO reviewing a fraud alert needs different detail than an auditor tracing compliance evidence. Explainable systems should generate explanations tailored to the audience: high-level summaries for executives, detailed factor breakdowns for compliance teams, technical diagnostics for model validators. One-size-fits-all explanations slow decision-making instead of enabling it.

4. Build Audit Trails That Link Explanations to Specific Decisions

Every model output should carry a traceable record of the factors that influenced it. Risk committees need systems that archive explanations alongside decisions so that when a regulator asks why a loan was denied six months ago, the answer is immediately retrievable. This eliminates reconstruction efforts during audits.

5. Train Members to Challenge Model Outputs Using Explanations

Explainability only improves oversight if committees know how to use it. Training should focus on recognizing when an explanation seems incomplete, when factor weights don't align with policy expectations, and when to escalate for deeper technical review. The goal is not to turn risk leaders into data scientists but to equip them with the questions that expose model weaknesses.

Conclusion

Enterprise risk committees must act with clarity and confidence as AI influences critical decisions. Explainable AI allows committees to understand the reasoning behind outcomes, identify anomalies, and confirm that decisions align with internal standards and regulatory expectations.

By revealing how models reach conclusions, XAI reduces blind spots, supports accurate assessment of risk, and strengthens the committee’s ability to make informed judgments. Clear explanations also help maintain accountability and document decisions in a way that regulators can review.

For ensuring effectiveness in automated solutions, enterprise risk committees must have clear expectations with XAI platforms. The system must provide:

- Evidence quality and traceability

- Regulatory defensibility

- Integration with governance systems

- Scalability across business units

- Operational usability for non-technical stakeholders

.webp)

Share this article