Listen To Our Podcast🎧

Introduction

In multi-framework compliance, accuracy depends less on detection and more on justification.

Organizations today use regulatory compliance AI to handle growing regulatory scope. Many also adopt AI compliance tools to reduce manual effort. These systems can scan regulations quickly and suggest mappings across frameworks.

The real challenge appears after the mapping is created.

Compliance teams are expected to defend every decision. Auditors ask why two rules were linked. Risk teams ask what logic was applied. Regulators ask whether the decision can be explained clearly. If an AI system cannot answer these questions, trust breaks down.

This is why AI explainability matters.

Explainable AI focuses on making decisions understandable. It allows teams to see how and why a regulatory requirement was mapped. This level of machine learning transparency helps teams explain artificial intelligence decisions using simple language that aligns with regulatory expectations.

In regulated environments, this clarity is essential. Expectations around AI transparency in regulated industries continue to rise. Accuracy alone is no longer enough. Organizations are expected to show reasoning, not just results.

By using trustworthy AI with explainability, compliance teams gain confidence in automation. They can review mappings, validate logic, and respond to audit questions without redoing the work manually.

This approach also aligns with responsible AI frameworks, which emphasize accountability and interpretability. When AI can explain itself, compliance becomes easier to manage and easier to defend.

This blog explains How XAI improves accuracy in regulatory compliance by bringing clarity to multi-framework regulatory mapping. It focuses on practical understanding rather than technical complexity.

Why Traditional Regulatory Mapping Fails at Scale ?

As organizations expand across regions and regulations, regulatory mapping becomes harder to manage. Teams are no longer dealing with one framework at a time. They are aligning multiple rulebooks that were never designed to work together.

To handle this complexity, many organizations turn to regulatory compliance AI and standard AI compliance tools. These tools promise faster mapping and reduced manual effort. In practice, they introduce new risks that are less visible but more damaging.

Text matching is not the same as regulatory understanding

As organizations expand across regions and regulations, regulatory mapping becomes harder to manage. Teams are no longer dealing with one framework at a time. They are aligning multiple rulebooks that were never designed to work together.

To handle this complexity, many organizations turn to regulatory compliance AI and standard AI compliance tools. These tools promise faster mapping and reduced manual effort. In practice, they introduce new risks that are less visible but more damaging.

Machine learning improves speed but hides reasoning

More advanced regulatory compliance AI systems use machine learning to understand language better. They analyze patterns and generate similarity scores. While this improves coverage, it creates a new challenge.

The system provides an answer, but not an explanation.

Without AI explainability, compliance teams cannot see how a decision was made. They cannot verify whether the logic aligns with regulatory expectations. This lack of machine learning transparency reduces trust and slows adoption.

Audits demand justification, not confidence scores

Regulators and auditors do not accept unexplained outputs. They expect organizations to justify how requirements were interpreted and mapped. When teams cannot explain artificial intelligence decisions clearly, audit discussions become longer and more difficult.

This is especially critical as expectations around AI transparency in regulated industries increase. Regulations are no longer focused only on outcomes. They also focus on decision logic.

Scaling without trust creates operational risk

As regulations evolve, mappings must be updated. Traditional systems struggle to adapt. Static rules break. Black-box models cannot explain changes. Teams are forced back into manual reviews.

This is why many automation initiatives stall. The technology scales, but trust does not. Accuracy becomes difficult to measure. Governance becomes harder to maintain.

These limitations explain why explainable AI is gaining attention in compliance programs. It addresses the core failure point of traditional mapping systems. It brings visibility into decisions and allows teams to review and refine mappings with confidence.

Next, we will examine how XAI works in practice and why it improves accuracy in multi-framework regulatory mapping.

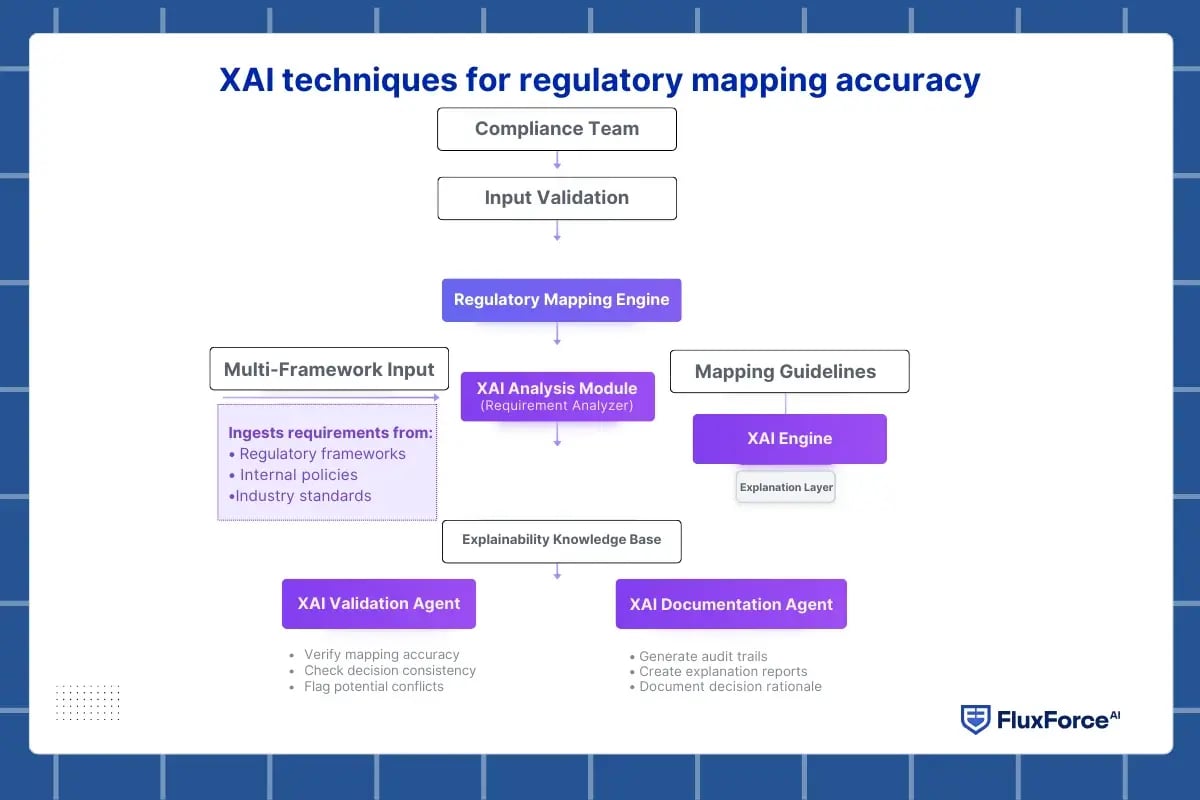

XAI techniques for regulatory mapping accuracy

Regulatory mapping is only as effective as the decisions teams can validate and defend. Accuracy is not measured by the number of matches alone. It is measured by how clearly each decision can be reviewed, corrected, and repeated. Explainable AI enables compliance teams to achieve this by linking AI outputs directly to rationale and intent.

Here we outline how XAI improves accuracy during real compliance operations.

Accuracy improves when explanations drive feedback

In operational workflows, compliance teams review AI-generated mappings before approval. When reasoning is hidden, teams spend time guessing or rejecting mappings, slowing the process.

With AI explainability, each mapping includes decision logic. Teams can see which obligations were matched and why. This machine learning transparency reduces review time and allows early corrections, improving overall quality.

XAI techniques for regulatory mapping accuracy reduce false matches

Traditional automation produces two types of errors: incorrect mappings and missing links. Both create compliance risk.

Explain AI systems highlight the concepts and intent behind each mapping. Teams can quickly remove incorrect links and identify gaps. This improves precision and recall simultaneously, supporting XAI techniques for regulatory mapping accuracy in multi-framework environments.

XAI methods for improving AI decision accuracy support audits

Audit readiness requires traceable decisions. Auditors expect to understand why a requirement was mapped to another. Confidence scores alone are insufficient.

AI model interpretability metrics measure the consistency and reliability of explanations. Teams can confirm whether mappings are repeatable across similar cases. This is how XAI methods for improving AI decision accuracy strengthens operational compliance and aligns with audit expectations.

XAI for multi-framework regulatory mapping ensures consistency

Regulations evolve constantly. New rules, updates, and amendments create drift if mappings are not consistent.

Explainable AI for multi-framework regulatory mapping preserves decision paths. Teams can apply the same logic to new requirements and frameworks, ensuring consistent accuracy over time. Repeatable logic also builds trust with regulators and internal reviewers.

Responsible AI strengthens long-term mapping accuracy

Accuracy is not static. To maintain it, teams need transparency, monitoring, and accountability.

By following responsible AI frameworks, organizations ensure that XAI improvements are sustainable. Teams can detect biases, track performance, and maintain accuracy as regulations change. This supports trustworthy AI with explainability and positions compliance operations for long-term success.

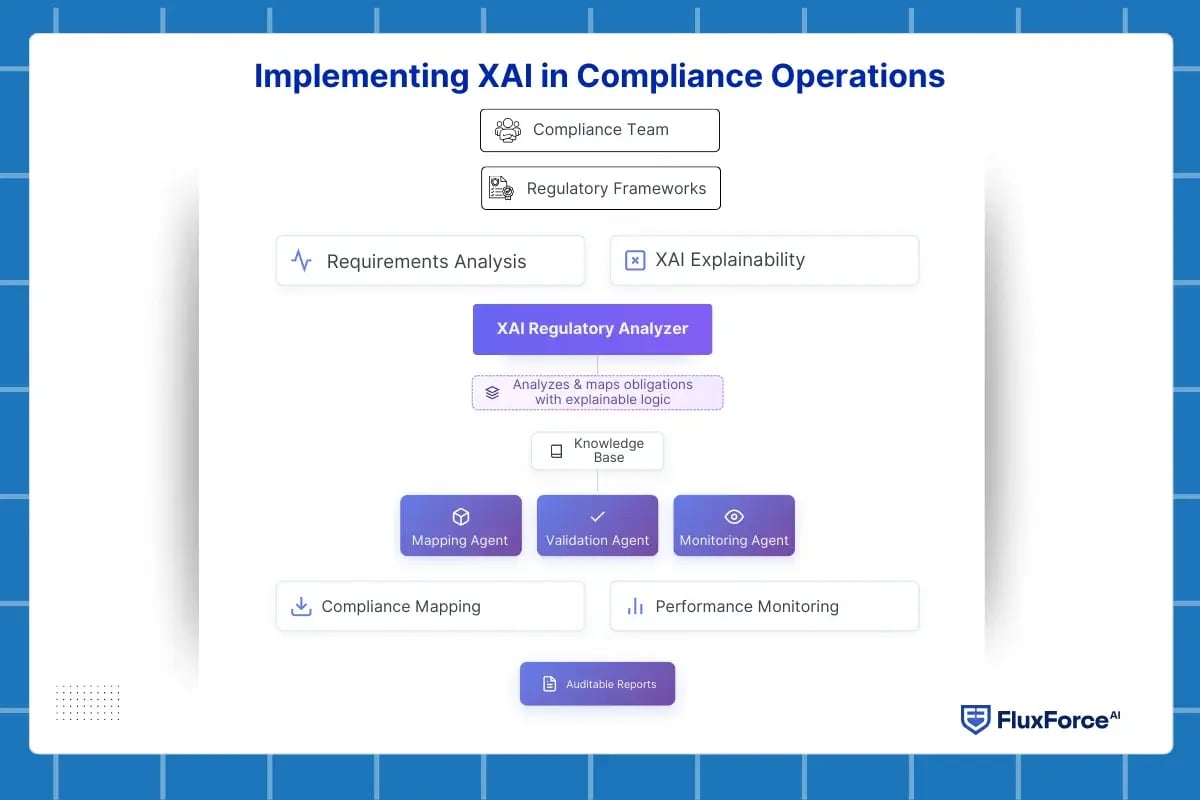

Implementing XAI in Compliance Operations

Successfully adopting explainable AI requires more than technology. It requires operational alignment, workflow integration, and team readiness. Accuracy in regulatory mapping improves when AI supports decision-making, not replaces it.

Here we will explain how fintech teams can embed XAI into daily compliance operations.

Start with understanding regulatory obligations

The first step is clarity. Compliance teams must define obligations across frameworks and capture context for each rule.

AI systems work best when obligations are structured and tagged. Using AI for regulatory compliance, teams can automate initial mapping while keeping human oversight for critical decisions.

Integrate explainability into workflows

Deploying XAI techniques for regulatory mapping accuracy requires visible AI reasoning. Teams should:

- Review AI decisions through dashboards

- See key factors that influenced mappings

- Validate alignment with intent, not just keywords

This ensures trustworthy AI with explainability and reduces operational friction during reviews.

Pilot small, refine continuously

Start with one regulatory framework or a high-priority use case. Monitor how the team interacts with AI explanations:

- Which mappings are easy to approve?

- Which require clarification or retraining?

Iterative refinement strengthens XAI methods for improving AI decision accuracy, building confidence before scaling to multiple frameworks.

Scale while maintaining transparency

Regulators want clear answers. They need to know why decisions were made. Explainable AI provides this automatically. Clear explanations reduce follow-up questions. Reviews happen faster. Audit work is easier. This lowers hidden compliance costs in financial services compliance automation.

Embed XAI in team culture

Teams should:

- Be trained to understand AI explanations

- Use AI insights to guide decisions rather than override them blindly

- Provide feedback to improve models continuously

This operational approach aligns with responsible AI frameworks and ensures long-term success.

Monitor impact and refine strategy

Measure performance through operational KPIs:

- Reduced errors in mapping

- Faster review cycles

- Consistent alignment across frameworks

This demonstrates How XAI improves accuracy in regulatory compliance while building confidence in automated workflows.

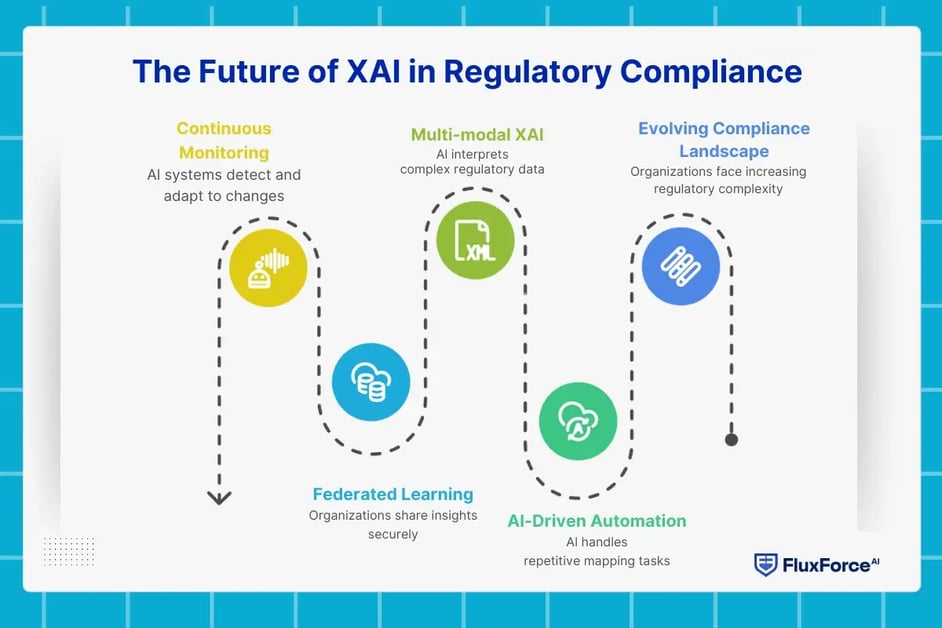

The Future of XAI in Regulatory Compliance

The compliance landscape is evolving rapidly. Organizations are handling more regulations, faster updates, and higher scrutiny from auditors. Explainable AI is no longer optional; it is central to achieving accurate, traceable, and auditable regulatory mapping.

AI-driven automation for routine mapping

By 2027, AI for regulatory compliance will handle most repetitive mapping tasks, freeing human teams to focus on validation and strategic oversight. XAI and AI model transparency for regulators ensures that even automated decisions remain fully reviewable, traceable, and auditable.

This evolution strengthens XAI techniques for regulatory mapping accuracy and reduces manual workload while maintaining control.

Multi-modal XAI interprets complex regulatory data

Regulations increasingly include tables, diagrams, and structured content. Explainable AI for compliance with regulations will interpret these multi-modal inputs, enabling more accurate and auditable mappings.

Teams can verify how AI links obligations across formats, reducing errors and ensuring alignment with regulatory intent.

Federated learning supports secure cross-organization insights

Sharing compliance intelligence without exposing sensitive data is essential. Responsible AI and regulatory mapping strategies will adopt federated learning. Organizations can collaboratively improve AI models while maintaining data confidentiality, promoting trustworthy AI with explainability.Continuous monitoring and adaptive AI

Future compliance requires AI systems that detect gaps and adapt to regulatory changes automatically. XAI methods for improving AI decision accuracy allow teams to identify weak mappings, retrain models, and maintain high precision over time.

This ensures organizations stay ahead of regulatory updates and minimize compliance risk.

Conclusion

Accurate compliance is built on clarity, not just rules. Explainable AI ensures every mapping is transparent, auditable, and defensible. With AI explainability, XAI methods for improving AI decision accuracy, and responsible AI frameworks, organizations reduce risk, accelerate reviews, and achieve consistent, multi-framework compliance. The advantage goes to those who implement XAI now.

.webp)

.webp)

Share this article