Listen To Our Podcast🎧

%20(1).png)

Introduction

The capabilities of Artificial Intelligence (AI) in document fraud detection are increasingly being questioned by regulators. In banks, most AI models can identify anomalies in documents, such as unusual fonts, inconsistent sizes, or spacing errors, but very few explain the reasoning behind their decisions.

Fraudsters, in increasing numbers, are leveraging AI to quickly trick these detection systems and bypass altered documents. According to industry reports, banks that rely solely on standard AI have experienced a 20–30% decline in detection accuracy compared with the early years of adoption.

Conventional AI often produces alerts without clear explanation, making it difficult for investigators to validate results and slowing compliance processes. Explainable AI addresses these challenges by not only detecting suspicious documents but also providing a transparent reasoning path, highlighting the specific factors that contribute to each decision.

AI enhances document forgery detection with transparent

Learn about innovative solutions and boost your security

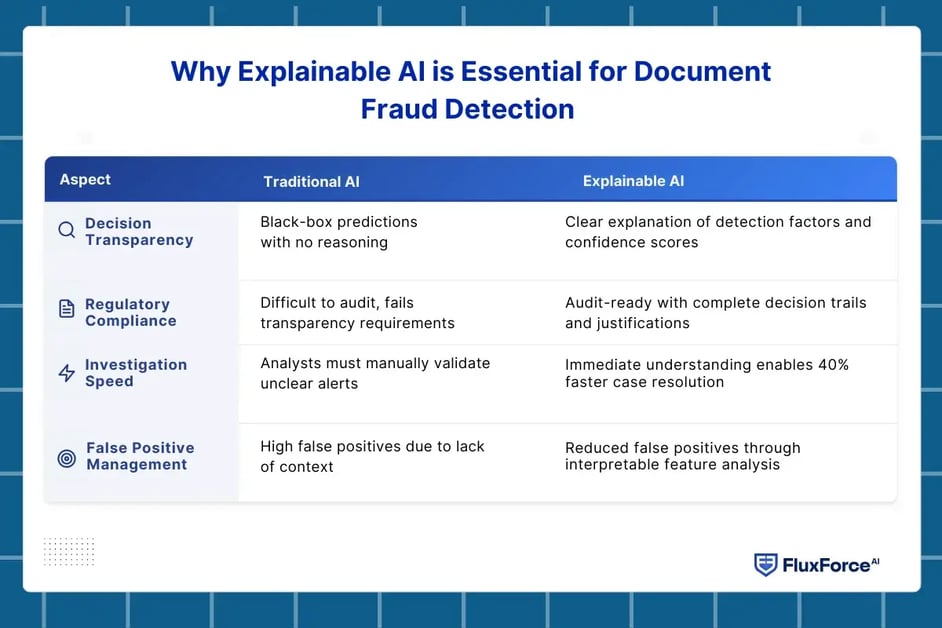

Why Explainable AI is Essential for Document Fraud Detection ?

Regulatory bodies increasingly expect transparency in document fraud detection decisions. Traditional AI models can flag documents based on learned patterns, but once fraudsters understand those patterns and use widely available tools, forged documents can pass through undetected.

Explainable AI exposes the decision logic behind each alert. Instead of relying only on font or pattern deviations, it highlights deeper inconsistencies and emerging manipulation techniques, allowing detection systems to adapt as fraud methods evolve. This enables investigators to validate decisions quickly and ensures that automated document verification aligns with regulatory expectations.

Here’s a clear difference between traditional AI and explainable AI in document fraud detection

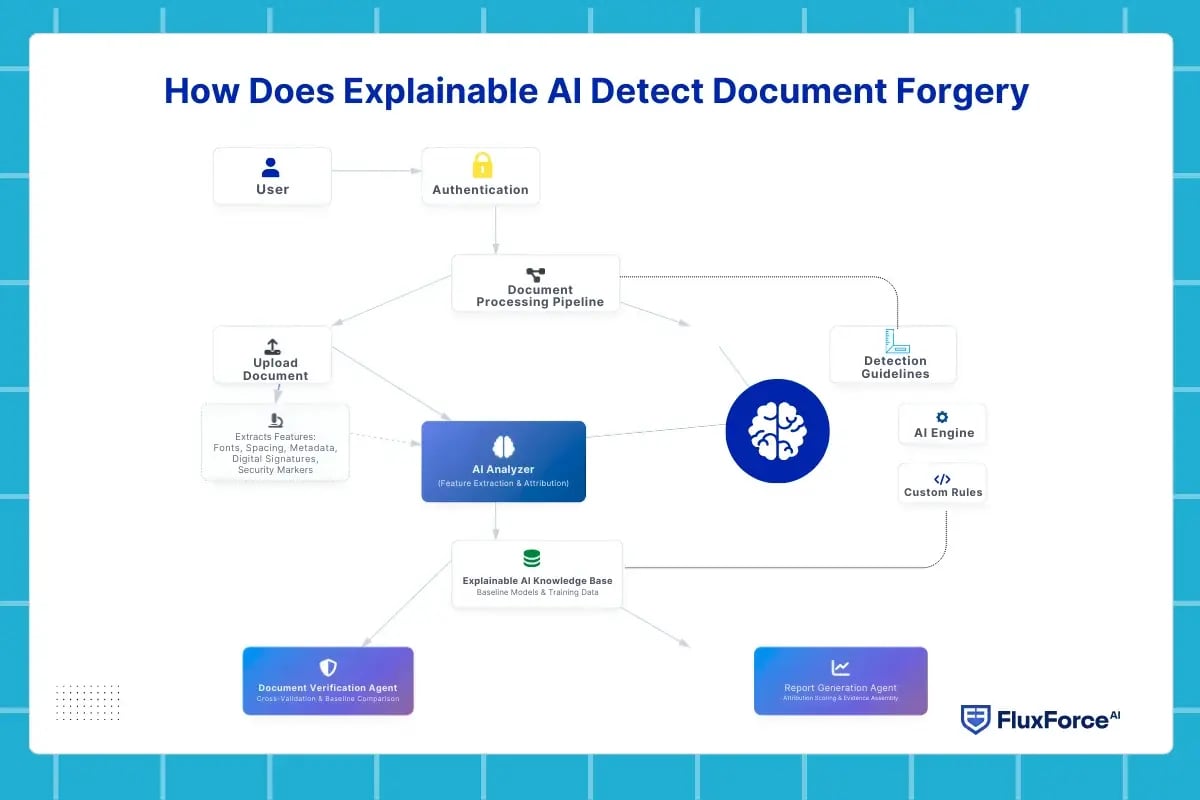

How Does Explainable AI Detect Document Forgery ?

Integrating explainable AI into document forgery detection adds transparency at every stage of analysis. Instead of producing a single risk score, the system explains how different document elements contribute to an alert. Below are the key mechanisms that enable superior detection capabilities:

1. Feature-Level Analysis and Attribution

Explainable AI systems break documents into granular features such as font consistency, spacing patterns, metadata timestamps, and digital signatures. Each feature is assigned an attribution score. When document fraud detection identifies an anomaly, the system shows exactly which features influenced the decision and their relative contribution.

2. Contextual Pattern Recognition

Rather than relying on isolated anomalies, explainable AI establishes self-updating baselines for legitimate documents and explains deviations from those norms. In KYC document verification, for example, the system identifies why a passport’s security features differ from verified samples and references specific visual markers or metadata inconsistencies that reviewers can confirm.

3. Multi-Modal Verification with Reasoning

AI document verification evaluates documents across visual content, digital properties, and behavioural signals. Explainable AI maintains transparency across each layer. When inconsistencies appear, the system explains how visual alterations align with metadata anomalies, forming a comprehensive fraud narrative.

4. Confidence Scoring with Justification

Explainable AI goes beyond binary outcomes by providing confidence scores supported by justification. A document may receive an 87% fraud probability with explanations such as font inconsistencies (45% contribution), metadata conflicts (30%), and digital signature failure (25%). This clarity helps investigators prioritize review actions efficiently.

The Role of Explainable AI in Financial Services Governance

AI-based forged document detection in banking enables institutions to meet regulatory requirements while reducing operational friction. Here's what makes explainable AI better for KYC verification while meeting other critical governance objectives:

1. Meeting Regulatory Scrutiny Standards

Regulators increasingly require banks to justify every automated decision that affects customer onboarding or transaction approval. When explainable AI is applied to financial document verification, each fraud alert is supported by a clear decision trail.

The system records which document attributes deviated from standards, how confidence scores were calculated, and when manual review was triggered. During audits, compliance teams with structured explanations can reduce audit preparation time by up to 50%.

2. Operationalizing Risk Management

Effective risk management depends on visibility into emerging fraud patterns. Explainable AI highlights how new forgery techniques—such as advanced identity image manipulation or novel document alterations—bypass existing controls.

By exposing these mechanics, risk teams can adjust detection logic proactively. In one European bank, interpretable machine learning identified a coordinated document fraud scheme three weeks earlier than traditional systems, preventing €2.3 million in potential losses.

3. Building Cross-Functional Confidence

Meeting AI governance in financial services requires alignment across risk, legal, compliance, customer operations, and technology teams. Explainable AI supports this by providing role-specific visibility.

- Risk teams track pattern evolution

- Legal teams access audit-ready documentation

- Customer service teams receive clear reasons for declined applications

- Technology teams monitor model behaviour

This shared transparency reduces internal friction and supports confident AI deployment.

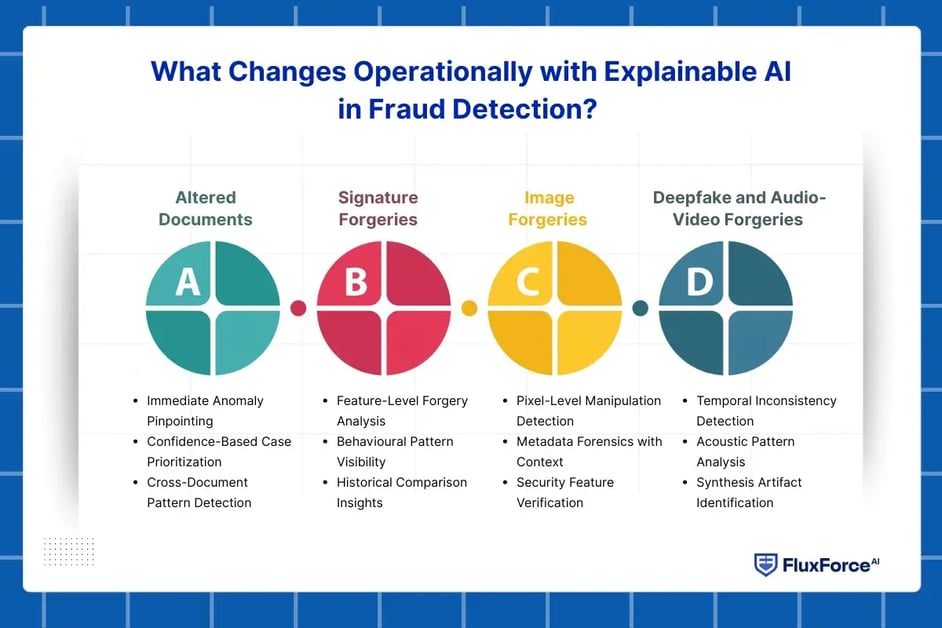

What Changes Operationally with Explainable AI in Fraud Detection?

Explainable AI significantly transforms operational workflows by replacing time-intensive manual investigations with transparent, automated insights that accelerate decision-making. From addressing document forgeries to image fraud, explainable AI delivers immediate clarity across all types of forgeries.

Altered Documents

- Immediate Anomaly Pinpointing: The system highlights the exact elements that trigger an alert, such as font inconsistencies, spacing deviations, timestamp conflicts, or altered security features. Investigators no longer review documents page by page. This focused review reduces investigation time by up to 60%.

- Confidence-Based Case Prioritization: The system ranks financial document fraud cases by detection confidence and attaches clear explanations to each score. Teams review high-risk documents first and route lower-confidence cases through automated or secondary workflows.

- Cross-Document Pattern Detection: The system links multiple altered documents by identifying shared modification methods or common source characteristics. Investigators uncover coordinated fraud activity earlier than manual review processes allow.

Signature Forgeries

- Feature-Level Forgery Analysis: The system breaks signature verification into observable elements such as stroke pressure, pen lift points, writing speed, and shape consistency. Each element directly supports the final assessment.

- Behavioural Pattern Visibility: The system explains signing behaviours that differ from verified samples, including hesitation points or inconsistent stroke speed. These patterns often indicate traced or replicated signatures.

- Historical Comparison Insights: The system compares suspect signatures with verified historical samples and highlights specific differences. Investigators use these insights to reach validation decisions faster.

Image Forgeries

- Pixel-Level Manipulation Detection: The system identifies specific image regions that show signs of editing, including cloned areas, lighting inconsistencies, compression artifacts, or resolution mismatches.

- Metadata Forensics with Context: The system examines file metadata and explains conflicts between document claims and creation details, such as software mismatches or impossible timestamp sequences.

- Security Feature Verification: The system evaluates identity document features such as holograms, microprinting, or UV patterns and explains whether they align with known issuance standards. Investigators verify these characteristics using standard tools.

Deepfake and Audio-Video Forgeries

- Temporal Inconsistency Detection: The system identifies frame-level issues such as unnatural facial movement, lighting changes, or audio-video misalignment and explains how these patterns indicate synthetic content.

- Acoustic Pattern Analysis: The system highlights spectral irregularities, unnatural pauses, or frequency patterns that differ from natural speech and provides timestamps for focused review.

- Synthesis Artifact Identification: The system detects indicators common in generated media, including boundary distortions or pixel patterns that differ from camera-captured content. Investigators use these indicators to identify forgeries as techniques evolve.

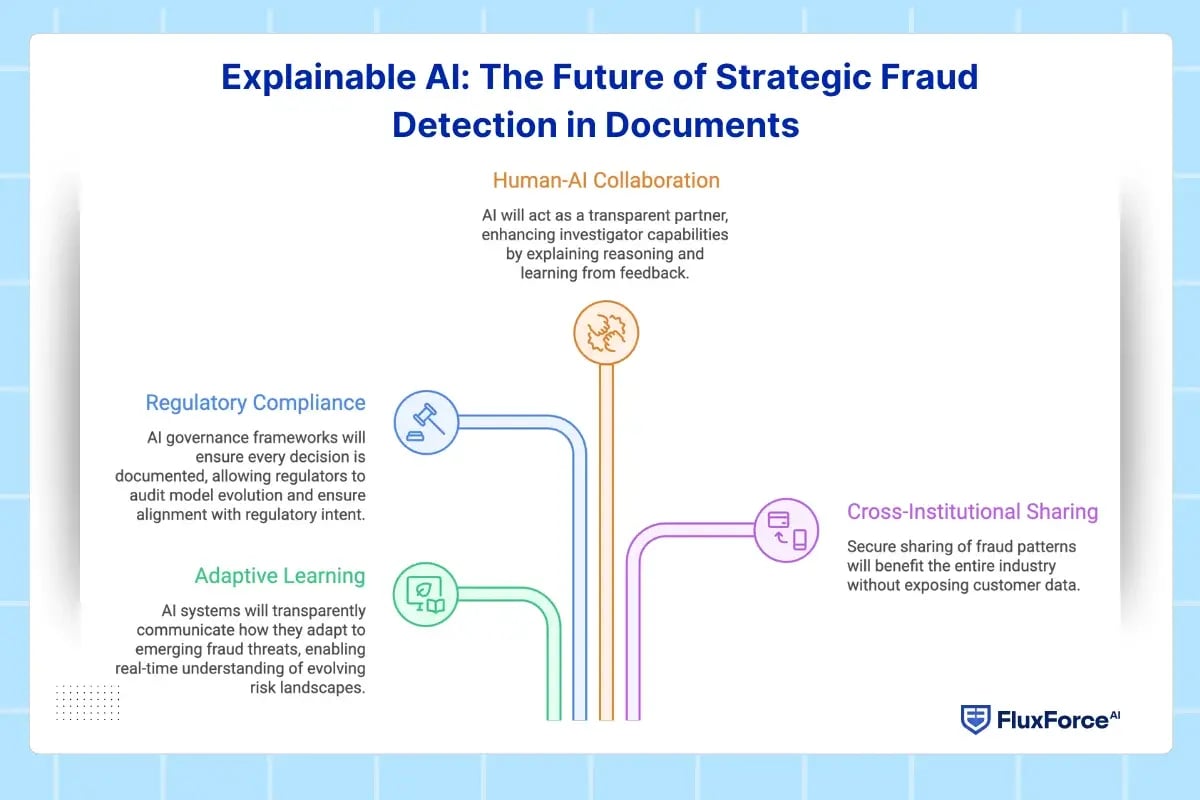

Explainable AI: The Future of Strategic Fraud Detection in Documents

1. Adaptive Learning with Transparent Evolution

Future explainable AI systems will not only detect current fraud patterns but will transparently communicate how they're adapting to emerging threats. As fraudsters develop new techniques, these systems will explain what new patterns they've learned and why, enabling compliance teams to understand evolving risk landscapes in real-time.

2. Regulatory-Ready AI Governance Frameworks

The convergence of regulatory compliant AI and automated document verification will establish new industry standards where every AI-driven decision comes with complete lineage documentation. Regulators will be able to audit not just individual decisions but entire model evolution histories, understanding how detection capabilities have adapted over time and ensuring that AI systems remain aligned with regulatory intent.

3. Collaborative Human-AI Investigation Models

Rather than replacing human expertise, explainable AI will enhance investigator capabilities by functioning as a transparent analytical partner. Systems will explain their reasoning in terms that align with human investigative frameworks, suggesting investigative pathways while acknowledging uncertainty, and learning from investigator feedback to continuously improve detection accuracy.

4. Cross-Institutional Intelligence Sharing

Explainable AI frameworks will enable secure, privacy-preserving fraud pattern sharing across financial institutions. Banks will be able to share "explanation signatures" of novel fraud techniques without exposing customer data, allowing the entire industry to benefit from collective detection intelligence while maintaining competitive differentiation and regulatory compliance.

Conclusion

Most document forgery systems only label files as “suspicious” without providing justification. That limitation creates friction during audits, customer challenges, and legal examinations, where teams must explain how conclusions were reached.

Explainable AI resolves that gap by attaching explicit reasoning to every decision. Each flagged document includes visible evidence, including font alterations, spacing irregularities, metadata conflicts, and abnormal signature structures, along with measured impact on the final risk score. Investigators receive a clear decision trail rather than a blind outcome

.webp)

Share this article