Listen To Our Podcast🎧

.png)

Introduction

Banks are investing millions in AI, yet many leaders still ask one uncomfortable question. If a model cannot explain its decision, can it truly create value? Across the industry, credit teams, compliance officers, and risk managers are realizing that performance alone is not enough. The real challenge in AI in finance is not prediction. The challenge is trust.

The gap between promise and proof

Over the last few years, artificial intelligence in banking has moved from pilot projects to core operations. Models now support lending decisions, fraud reviews, customer onboarding, and pricing. But as adoption grows, so does scrutiny. Regulators want reasons behind outcomes. Boards want measurable ROI of AI in banking. Customers want fairness.

Traditional black box systems deliver scores without context. They improve speed but create new risks. When a loan is rejected or a transaction is flagged, banks must answer three questions.

Why did this happen?

Is the decision fair?

Can we prove it during an audit?

Without these answers, AI investment in banking remains fragile.

Why explainability now matters more than ever ?

Financial institutions operate in a world where every decision must be defended. This is why explainable AI (XAI) has become central to banking digital transformation of AI programs. Explainability connects model output to business logic. It allows teams to validate decisions and demonstrate XAI transparency in AI models.

More importantly, explainability changes how value is measured. Instead of only tracking accuracy, banks can track approval of quality, reduced disputes, and faster regulatory reporting. These factors directly influence AI impact on banking profitability and long-term confidence.

This blog will explore how How explainable AI improves ROI for financial institutions and why XAI benefits for financial services go beyond compliance. We will connect explainability with revenue growth, cost savings, and risk reduction. The discussion will focus on practical XAI use cases in banking and clear methods to measure Return on investment artificial intelligence initiatives.

The future of AI in finance will belong to institutions that can combine intelligence with accountability. Explainability is not an optional feature. It is the foundation for sustainable returns.

Why Traditional AI Fails to Deliver Real ROI in Banking

A model that predicts outcomes accurately but cannot explain its reasoning introduces operational friction. Credit officers hesitate to act on decisions they cannot justify. Compliance teams invest excessive effort preparing audit-ready explanations. Customer service struggles to address disputes without clear answers. These hidden costs steadily erode the ROI of AI in banking.

.webp?width=1200&height=800&name=ai%20regulation%20future%20(3).webp)

For example, machine learning in banking may accelerate loan approvals in theory, but if risk teams require manual checks to trust the model, what seems like automation turns into partial automation. This is why many banks question the real AI investment benefits in finance.

Where Banking Value Leaks ?

Three recurring challenges limit returns from artificial intelligence in banking:

- Operational rework – Unexplainable decisions require manual intervention, slowing processes and reducing efficiency.

- Regulatory exposure – Without traceable reasoning, AI for financial compliance becomes cumbersome, increasing audit costs.

- Customer distrust – Clients question outcomes when fairness and rationale are unclear, threatening retention and loyalty.

These factors directly impact cost savings with AI in banking and hinder enterprise-wide adoption.

The ROI Equation Banks Often Miss

Traditional ROI calculations focus on accuracy, throughput, or reduction in manual effort. What is often overlooked is the post-deployment cost of human oversight, audits, and dispute resolution. Without explainability, AI fraud detection in banking may flag risks effectively but leaves investigators without actionable guidance, increasing workload rather than reducing it.

The Strategic Shift

Progressive banks now recognize explainability as a core component of value creation. By integrating AI risk management in finance with business objectives, they convert predictions into actionable, defensible decisions. Credit approvals accelerate, audit cycles shorten, and customer communication becomes clearer.

Ultimately, explainable models increase banking ROI from AI projects by enabling sustainable scaling, reducing operational friction, and building trust with regulators and customers alike.

How Explainable AI (XAI) Improves ROI in Banking

As banks increase their AI in finance projects, moving from black-box AI models to explainable AI (XAI) is very important. Unlike systems that are hard to understand, XAI shows clearly why a model makes a decision.

.webp?width=1200&height=800&name=ai%20regulation%20future%20(2).webp)

It is necessary to improve the ROI of AI in banking.

Building Trust and Better Performance

Explainable AI helps build trust across the bank. Compliance teams can see why a loan was approved or rejected. This reduces doubts and allows faster decision-making. In financial terms, this improves AI investment benefits in finance by reducing delays and unnecessary steps.

For example, AI fraud detection in banking works better when XAI explains why a transaction is flagged. Investigators spend less time trying to understand the model, reducing fraud losses and increasing both cost savings with AI in banking and confidence in automated systems.

Regulatory Compliance and Reducing Risk

Banks must follow strict rules. XAI helps by creating a clear record of how decisions are made. When AI for financial compliance checks transactions or credit applications, XAI ensures every step can be explained to regulators. This lowers the chance of fines and protects the bank’s reputation, improving ROI & Investment.

XAI also supports AI risk management in finance. By making model decisions easy to understand, banks can find mistakes, correct biases, and make sure predictions follow policies. This not only keeps regulators happy but also makes operations more efficient and profitable.

Operational Efficiency and ROI

Using XAI allows banks to expand AI banking transformation projects. Explainable outputs reduce the need for human checking. Processes like credit scoring, loan approvals, or responding to RFPs become faster and more reliable. These improvements lead to real financial gains:

- Lower labor costs because fewer people need to check AI results

- Faster decisions that improve customer satisfaction and revenue

- Easier scaling of AI projects without extra problems

For example, a bank using XAI for digital lending can approve loans in hours instead of days. This increases the number of customers served and improves banking digital transformation AI results.

XAI Use Cases That Show ROI

Some XAI use cases in banking with clear benefits include:

- Loan Decisions – XAI explains credit approvals, allowing faster and safer decisions

- Fraud Detection – Clear reasoning helps investigators act quickly and reduce costs

- Risk Management – XAI shows what factors affect portfolio risk, supporting better decisions

- Regulatory Reporting – Transparent AI makes audits easier and lowers compliance effort

These examples show that XAI benefits for financial services go beyond accuracy. They help banks save money, reduce risks, and make better decisions.

Why XAI Increases ROI

- Faster Decisions – Staff can trust AI outputs and act without delay

- Lower Rework Costs – Clear AI reasoning reduces manual checking, improving ROI of AI in banking

- Regulatory Safety – Decision records prevent fines and support long-term investment returns

- Customer Trust – Transparent AI builds confidence, helping retain clients and increase revenue

Banks using XAI effectively turn their AI investment in banking into measurable results, not only in savings but also in efficiency, risk management, and customer trust.

Navigating Regulation and Compliance with AI

Banks face increasing scrutiny from regulators when deploying AI in core processes. The future of AI in finance depends not only on model performance but also on how well institutions demonstrate compliance and traceability.

Aligning AI with Regulatory Expectations

Financial regulators require banks to maintain AI governance frameworks that clearly show how decisions are made. AI transparency and auditability are central to meeting these expectations.

A critical component is explainable AI in finance. When a credit approval, fraud alert, or risk score is generated, regulators expect clarity in reasoning. Lack of explanation can lead to fines, investigations, or operational delays.

Building Practical Compliance Measures

AI compliance solutions now focus on embedding transparency into workflows. For example, AI audit trails capture inputs, outputs, assumptions, and human approvals, allowing banks to answer questions from auditors and supervisors quickly. This also supports AI risk management, ensuring models remain aligned with regulatory requirements over time.

Integrating compliance into operations requires collaboration. Data teams, risk managers, and compliance officers must work together to maintain clear AI model governance, ensuring that the future of AI in finance is not just innovative but also defensible.

Long-Term ROI Strategies and the Future of Explainable AI in Banking

As banks move beyond early AI experiments, the focus shifts to long-term ROI of AI in banking. Sustainable value comes not just from deploying AI in finance but from governing, monitoring, and scaling it in ways that regulators, customers, and teams trust.

Explainable AI (XAI) bridges performance and accountability.

- Financial returns: Faster approvals, better pricing, and reduced fraud.

- Operational returns: Less time on manual reviews and compliance reporting.

- Risk-adjusted returns: Lower fines and reputational risk due to explainability.

- Capability returns: Institutional knowledge as teams learn to govern AI models.

XAI enhances these ROI areas by making decisions transparent. For example, explainable credit risk models reduce appeals, improve compliance, and lower operational costs.

Strategic Use Cases with Measurable Impact

- Digital Lending and Underwriting: Explainable models reduce default review times and increase loan throughput, saving costs, and improving customer experience.

- Fraud Detection: XAI flags suspicious transactions with clear reasoning, speeding investigations and reducing fraud losses.

- Compliance and Audit: Regulators can trace decisions, cut audit response times, and strengthen AI for financial compliance.

Explainability Strengthens Governance

XAI improves governance by connecting model inputs, logic, and outputs. Clear AI outputs support certification, audits, and faster scaling, boosting banking ROI from AI projects.

Future Trends

- Regulatory demands: Auditable; fair AI models are increasingly required worldwide.

- Customer transparency: Explainable decisions build trust and retention.

- Ethical AI: Ensures fairness and protects brand reputation.

Principles for Long-Term ROI with XAI

- Track all model inputs and outputs for transparency.

- Embed explainability throughout the model lifecycle.

- Measure success beyond accuracy, including reduced reviews, audit times, and disputes.

- Integrate XAI into governance and risk management.

By applying these principles, banks can turn AI investment in banking into sustained, measurable value.

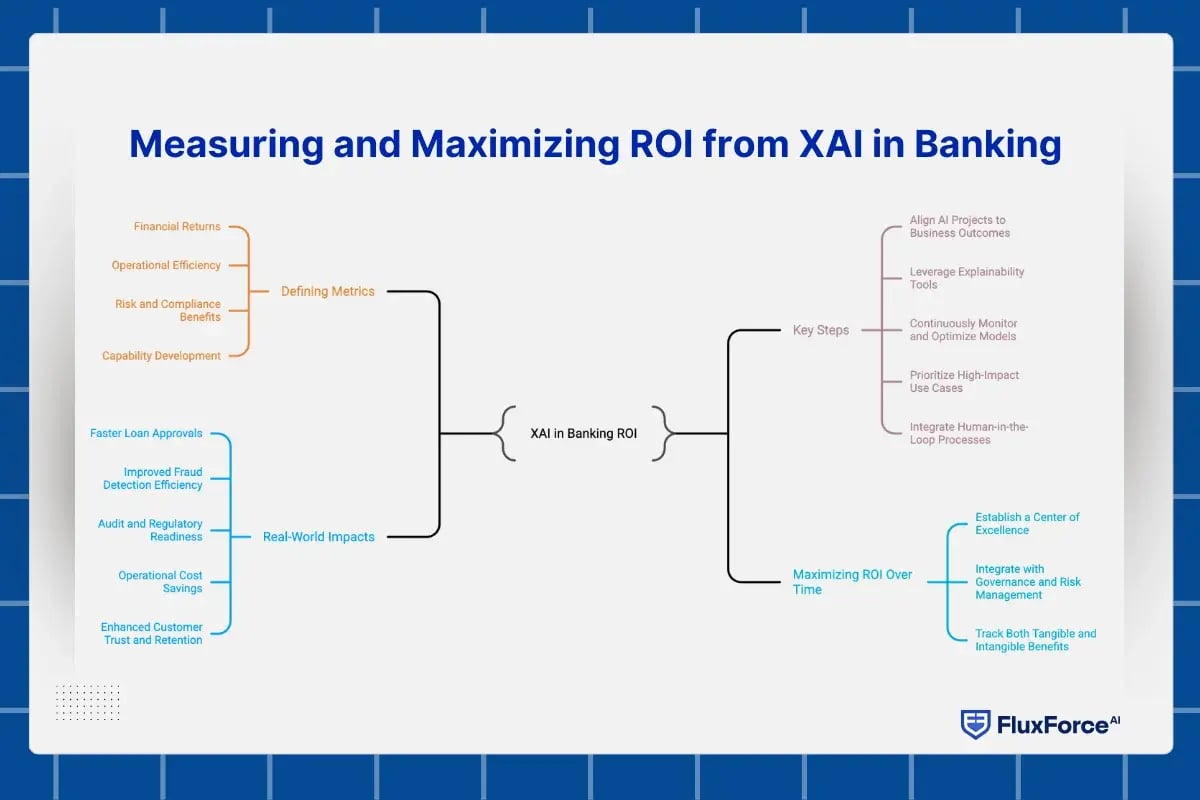

Measuring and Maximizing ROI from XAI in Banking

As banks increasingly invest in AI in finance, it is no longer enough to simply deploy models and hope for improved efficiency. To capture true value, institutions need a structured approach to measuring the ROI of AI in banking, and explainable AI (XAI) is central to this process.

Unlike traditional black-box models, XAI provides transparency, traceability, and accountability, which directly impacts operational performance, regulatory compliance, and financial outcomes.

Defining Metrics for ROI in Banking with XAI

ROI in AI goes beyond cost savings or revenue increases. Banks need to adopt a multi-dimensional view that captures both tangible and intangible benefits. Some key areas to focus on include:

- Financial Returns: Explainable models improve credit decisioning, dynamic pricing, and fraud prevention. Faster approvals, better pricing accuracy, and lower fraud losses directly boost revenue and profitability. For example, a retail bank using XAI in digital lending can approve more loans per day without increasing operational costs.

- Operational Efficiency: XAI reduces manual intervention by clarifying model outputs. This leads to faster processing of loan applications, regulatory reporting, and fraud investigations. When human teams can trust AI outputs, labor resources are freed to focus on higher-value tasks.

- Risk and Compliance Benefits: Explainable AI ensures that every decision can be justified to auditors and regulators. Transparent credit scoring or anti-money laundering models minimize the risk of fines, regulatory sanctions, and reputational damage. This risk-adjusted ROI is often overlooked in traditional ROI calculations.

- Capability Development: XAI contributes to institutional knowledge. Teams learn to interpret model outputs, monitor performance, and manage AI systems effectively. Over time, this capability strengthens the organization’s ability to scale AI responsibly, delivering long-term strategic value.

By tracking these metrics, banks can ensure that AI investments deliver measurable returns across financial, operational, and strategic dimensions.

Key Steps to Maximize ROI with XAI

To translate explainable AI into tangible business value, banks should follow a structured approach:

1. Align AI Projects to Business Outcomes:

Every AI initiative should have clearly defined goals. Fraud detection models should focus on reducing investigation time and preventing losses. Digital lending models should aim to increase approval throughput while maintaining credit quality. Clear alignment ensures ROI is measurable and relevant.

2. Leverage Explainability Tools:

XAI frameworks provide detailed insights into model decisions. By connecting inputs, logic, and outputs, banks can monitor performance in real time, identify errors, and produce audit-ready explanations. Transparency reduces disputes, accelerates decision-making, and strengthens regulatory confidence.

3. Continuously Monitor and Optimize Models:

ROI is not static. Banks must track model performance, operational metrics, and decision outcomes to identify areas for improvement. Regular model retraining, feature validation, and scenario testing ensure sustained returns and prevent performance drift.

4. Prioritize High-Impact Use Cases:

Not all AI projects deliver the same value. Banks should focus on areas where XAI can yield immediate, measurable benefits. Key high-impact use cases include digital lending, fraud detection, portfolio risk management, and regulatory reporting. This prioritization ensures that investments are targeted for maximum ROI.

5. Integrate Human-in-the-Loop Processes:

While AI can automate decisions, expert oversight is critical. Combining explainable models with human judgment ensures that decisions are accurate, ethical, and defensible. This balance strengthens operational reliability, mitigates risk, and improves stakeholder trust.

Real-World Impacts of XAI on Banking ROI

Practical implementations of XAI demonstrate significant improvements across multiple dimensions:

- Faster Loan Approvals: Retail banks using explainable credit models have reduced approval times from days to hours. Faster processing increases the number of customers served and drives higher revenue without increasing staffing costs.

- Improved Fraud Detection Efficiency: XAI-enabled fraud models flag suspicious activity with clear reasoning, allowing analysts to act decisively. Investigations become 30 to 50 percent faster, operational costs drop, and fraud losses are reduced.

- Audit and Regulatory Readiness: Banks implementing XAI in compliance reporting have halved the time required to respond to audits. Each decision is traceable, reducing regulatory risk and reinforcing trust with authorities.

- Operational Cost Savings: By minimizing manual interventions, explainable AI frees up human resources for value-added tasks. This operational efficiency translates directly into cost reductions and improved ROI of AI in banking.

- Enhanced Customer Trust and Retention: Transparent AI builds confidence among clients. When customers understand why a loan or credit decision was made, they are more likely to trust the bank, improving loyalty and lifetime value.

Maximizing ROI Over Time

Measuring ROI is only the first step. To maximize returns, banks must embed XAI into organizational processes and culture:

- Establish a Center of Excellence (CoE): A dedicated team for XAI governance ensures consistent standards, continuous monitoring, and integration with risk frameworks.

- Integrate with Governance and Risk Management: XAI outputs should feed into compliance dashboards, audit logs, and risk reports. This ensures that explainability strengthens both operational efficiency and regulatory compliance.

- Track Both Tangible and Intangible Benefits: While financial gains are straightforward to quantify, benefits such as increased trust, faster decision-making, and enhanced institutional capability should also be measured and reported.

By systematically measuring, monitoring, and scaling explainable AI initiatives, banks transform AI from a technology experiment into a sustainable, high-impact investment. XAI is not only a tool for transparency. It is a strategic lever that maximizes ROI, reduces risk, and positions financial institutions for long-term success in the AI-driven era.

XAI boosts ROI for AI investments in banking

by enhancing transparency, trust, and decision-making.

Conclusion

AI in finance offers tremendous potential, but its value depends on more than just accurate predictions. Banks often face hidden costs, delays, and compliance challenges when using traditional black-box models, which can reduce the real ROI of AI in banking. Explainable AI (XAI) solves this problem by clearly showing why decisions are made, making models transparent, auditable, and easier to trust. With XAI, banks can improve financial performance through faster loan approvals, better pricing, and reduced fraud, while operational efficiency increases as fewer manual checks are needed. Beyond immediate gains, XAI allows teams to understand, govern, and scale AI responsibly, ensuring sustainable benefits. By adopting explainable AI, banks can turn AI investment in banking into measurable results, combining smarter automation with accountability, reducing risk, and creating long-term growth and profitability.

.webp)

Share this article