Listen To Our Podcast🎧

Introduction

Boards are accountable for an organization’s financial results, regulatory standing, and reputation. And AI, in almost all high-impact business processes, (from loan approvals to fraud detection) is making decisions that

While intelligent automation indeed helps streamline business processes, but decisions made without transparency carry real consequences. As per standards, any system that influences business decisions should be fully explainable to company leaders. Model explainability allows boards to understand and manage the risks of “black box” AI systems, ensuring decisions align with company policies and support responsible AI practices.

This article explains why model explainability must be a core part of AI governance and treated as a board-level requirement.

How Black-Box Decisions Translate Into Financial and Reputational Damage ?

Black-box AI systems create exposure across multiple dimensions of enterprise risk.

When boards cannot explain automated decisions, they lack necessary control mechanisms and face:

- Unexpected Financial Losses: Opaque algorithms can make incorrect risk assessments that accumulate into material losses. In October 2024, Goldman Sachs was fined $45 million for Apple Card system failures. Without transparency, organizations cannot identify flawed logic until losses materialize.

- Customer Retention Challenges: When legitimate customers are blocked without clear explanation, trust erodes rapidly. AI compliance failures create friction in customer relationships that can take years to rebuild, particularly when staff cannot provide coherent justification.

- Erosion of Market Trust: Public perception of algorithmic fairness directly impacts brand value. The 2019 Apple Card controversy, where users reported apparent gender bias, triggered widespread scrutiny. Market confidence depends on demonstrated AI decision transparency throughout the organization.

- Audit and Control Failures: Internal audit functions cannot review processes they cannot understand. Model risk management frameworks require documentation of system operations, but black-box models prevent auditors from validating controls.

- Regulatory Scrutiny and Penalties: Financial regulators in multiple jurisdictions now explicitly require explainability. The EU AI Act mandates that high-risk AI systems provide clear output explanations. Penalties for non-compliance can reach €35 million or 7% of global annual revenue.

- Legal and Liability Risks: Courts consistently reject "the algorithm decided" as legal defence. Organizations must demonstrate that proper controls existed and outcomes can be justified through documented logic, not just statistical accuracy.

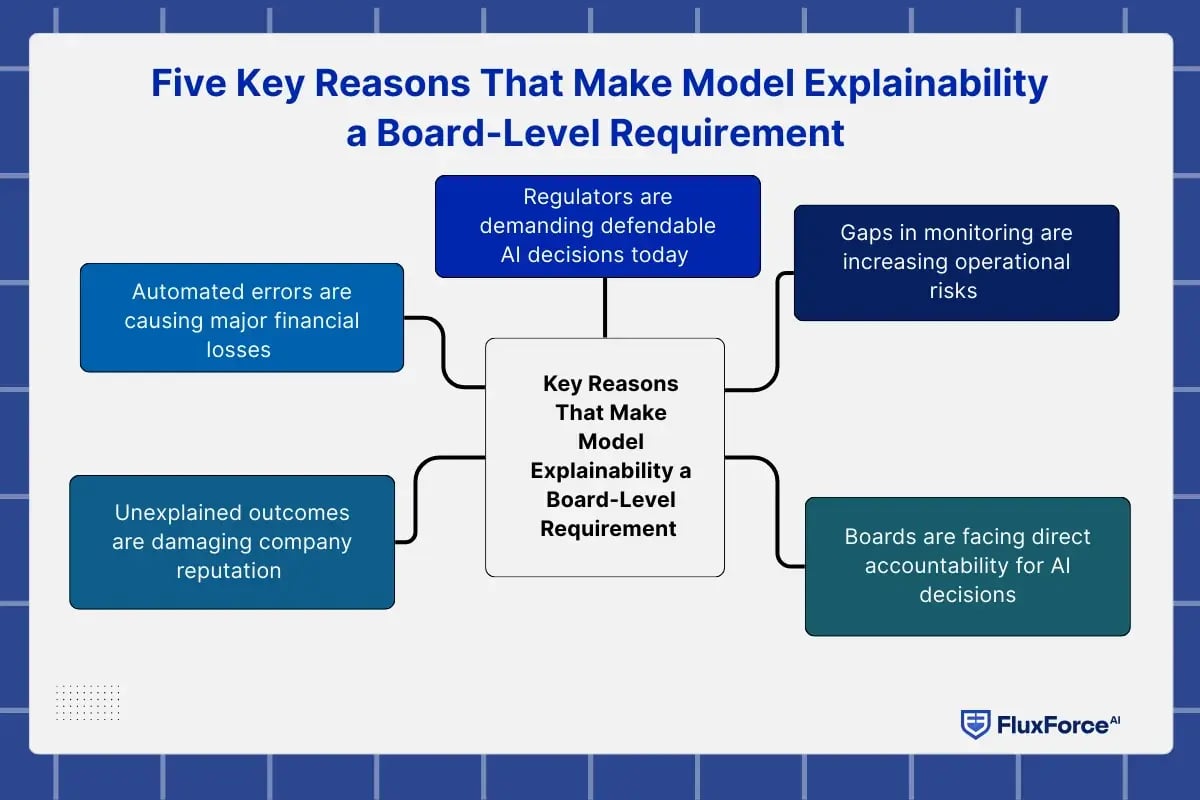

Five Key Reasons That Make Model Explainability a Board-Level Requirement

1. Regulators are demanding defendable AI decisions today

Multiple regulatory bodies have established explicit requirements for AI accountability and model transparency:

- European Union: The EU’s AI Act, effective August 2024, mandates that high-risk AI systems provide technical capabilities to explain their outputs. Article 86 grants individuals the right to receive clear explanations when AI impacts health, safety, or fundamental rights.

- United States: Since 2011, the Federal Reserve, OCC, and FDIC have enforced model risk management through SR 11-7. This guidance requires banks to ensure that models are conceptually sound and validated.

- Canada: In 2024, OSFI issued Guideline E-23, defining enterprise-wide model risk management expectations, including explicit explainability requirements. Additionally, the Consumer Financial Protection Bureau emphasizes that consumer protection laws apply fully, with no technological exceptions.

2. Automated errors are causing major financial losses

Model failures create direct financial exposure that boards must account for. Research from Deloitte projects that generative AI could drive U.S. fraud losses from $12.3 billion in 2023 to $40 billion by 2027. These losses stem from systems operating beyond human oversight capacity.

When models make incorrect risk assessments in lending, trading, or fraud detection, accumulated errors can reach material thresholds before patterns emerge. Without model explainability, organizations cannot conduct root cause analysis. The $89 million in combined penalties against Apple and Goldman Sachs in 2024 demonstrate costs extending far beyond immediate fines to include legal defence, system remediation, and lost revenue during product freezes.

3. Unexplained outcomes are damaging company reputation

Reputation risk from AI governance failures extends beyond regulatory penalties. When customers experience decisions, they perceive as unfair or inexplicable, public trust deteriorates rapidly. The 2019 Apple Card controversy triggered widespread scrutiny even before regulatory action, demonstrating how perception alone damages brand value.

Financial institutions face vulnerability because business models depend on customer confidence. If a high-value client is blocked from a legitimate transaction without coherent explanation, they will likely move their business elsewhere. Model explainability provides the foundation for customer service teams to address concerns with specific, defensible rationales.

4. Gaps in monitoring are increasing operational risks

AI risk management requires continuous oversight that black-box systems make impossible. Models can drift as data distributions change, introducing biases or errors that accumulate silently. Without interpretable outputs, risk managers cannot detect when model behaviour deviates from expectations.

The Federal Reserve's model validation framework emphasizes effective challenge as essential to model risk management. This requires independent reviewers to assess whether models are properly specified. When models lack transparency, validators cannot perform this function. Canada's OSFI explicitly requires institutions to perform monitoring that includes detection of model drift and unwanted bias—tasks that demand explainability.

5. Boards are facing direct accountability for AI decisions

Corporate governance increasingly holds directors personally accountable for AI oversight. When automated systems cause harm, regulators and courts look to the board to demonstrate that appropriate governance structures existed.

This accountability cannot be delegated entirely to technical teams. Boards must understand how AI systems make decisions affecting strategic objectives, financial performance, and regulatory compliance. Model explainability translates technical processes into executive-level insights that enable informed governance. Without this capability, boards cannot fulfil their fiduciary duties regarding AI risk.

What happens when boards ignore this?

Organizations that deploy AI without establishing explainability face predictable consequences across operational, financial, and reputational dimensions.

When explainability is not there:

1. Wrong customers get blocked- Legitimate transactions trigger false positives that opaque systems cannot justify. High-value clients experience service denials that front-line staff cannot explain, creating immediate dissatisfaction and long-term relationship damage that competitors quickly exploit.

2. Good customers leave- Once trust breaks, customers rarely return. When individuals cannot understand why they were declined or flagged, they assume the worst about the organization's competence and fairness. Migration to competitors accelerates, particularly among the most profitable customer segments who have options.

3. Regulators step in- Unexplained algorithmic decisions attract regulatory attention rapidly. Examiners expect institutions to demonstrate how AI governance frameworks ensure fair, compliant outcomes. When organizations cannot provide clear model documentation and validation evidence, enforcement actions follow with financial penalties and operational restrictions.

4. Lawsuits increase- Legal claims based on algorithmic discrimination or unfair treatment gain traction when defendants cannot explain their systems' logic. Courts have established that choosing to use opaque decision-making tools can itself violate fair lending and consumer protection statutes, creating liability regardless of intent.

6. News headlines damage trust- Public coverage of AI failures creates lasting brand harm. Stories about biased algorithms or inexplicable denials spread quickly through media and social channels, affecting not just the implicated organization but industry-wide confidence in automated decision-making and AI model validation processes.

What Effective AI Governance Looks Like at Board Level

1. Establish clear ownership of AI risk management

Boards should designate specific executive accountability for AI governance, ensuring that responsible ai frameworks include model explainability as a core requirement. This means assigning a chief risk officer with direct reporting lines to the board on AI oversight matters and regular reporting on model performance, validation results, and ai compliance status.

2. Require explainability standards in model development

AI governance frameworks must specify explainability requirements before models enter production. This includes documentation standards for technical teams, validation procedures that assess whether explanations are adequate, and approval processes that verify explainable ai capabilities exist before deployment.

3. Implement continuous monitoring and review processes

Effective ai risk management requires ongoing oversight, not one-time approvals. Boards should ensure organizations maintain monitoring systems that detect model drift, performance degradation, and unexpected outcomes. Regular validation cycles must confirm models continue operating as intended.

4. Build organizational capability to explain AI to stakeholders

Explainability benefits are realized only when organizations can communicate model logic to diverse audiences. This means training customer service teams to explain AI decisions, equipping compliance staff to demonstrate model transparency to regulators, and enabling executives to discuss AI oversight with investors. Boards should verify these capabilities exist throughout the organization.

Ensure transparency, compliance, and confidence across environments

—transform your cloud strategy today.

Conclusion

When an automated system blocks a high-value transaction or denies a legitimate customer, the board is ultimately accountable for that outcome. Regulators, auditors, and even courts will not accept “the model decided” as a justification. They expect a clear explanation of what factors influenced the decision and whether proper controls were in place.

Without that visibility, financial institutions are exposed not only to regulatory penalties, but also to reputational damage that no accuracy metric can offset.

If AI is making business decisions, leadership must be able to explain and defend those decisions. Otherwise, the company is taking blind risk.

Share this article