Listen To Our Podcast🎧

.png)

Introduction

When an AI system changes a supplier’s risk rating or flags a shipment investigation, the immediate question is not about accuracy. It is about accountability.

Why was this supplier flagged?

Which signals drove the decision?

Can we defend this during an audit or regulatory review?

These questions now sit at the center of AI in supply chain security discussions. Automated decisions increasingly influence supplier onboarding, routing, and escalation. In global supply chain security, those decisions carry regulatory, financial, and reputational consequences.

Most organizations already rely on supply chain analytics for supply chain risk management. The issue is not detection. It is accountability. Risk scores appear. Threat alerts trigger. But without AI transparency, teams struggle to justify actions tied to supply chain risk assessment and supply chain security controls.

This gap matters more now than ever. Over the last two years, regulators and auditors have made AI model explainability for compliance and audits a clear expectation. In supply chain compliance management, opaque AI decisions are increasingly treated as control weaknesses, especially where cybersecurity, fraud detection, or supplier eligibility is involved.

Explainability turns AI outputs into defensible decisions. It restores supply chain visibility, supports human oversight, and allows leaders to own outcomes with confidence. Without it, AI in supply chain accelerates decisions but undermines trust. With it, AI becomes accountable, governable, and usable at scale.

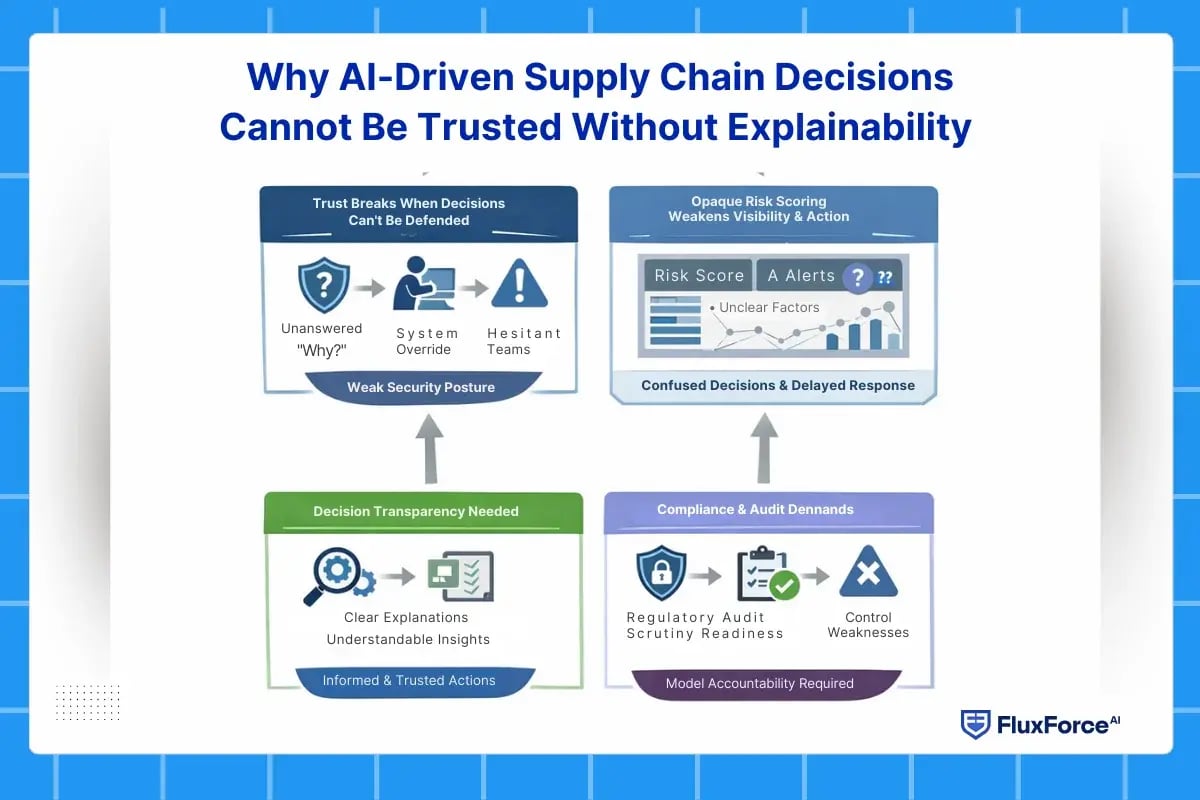

Why AI-Driven Supply Chain Decisions Cannot Be Trusted Without Explainability ?

AI now sits directly in the decision path for supplier approval, shipment release, and threat escalation. These are not advisory insights anymore. They are operational triggers. When AI in supply chain drives action without explanation, trust becomes the first casualty.

Explainability is what determines whether AI-driven decisions are accepted, challenged, or ignored. Without it, even accurate models struggle to influence real outcomes in supply chain security.

This creates pressure on risk management in banking teams. Compliance functions must balance local operating realities with global standards. Country-level risk ratings often oversimplify exposure and fail to reflect partner-specific behavior.

Trust breaks when decisions cannot be defended

In supply chain security, trust is practical. It shows up when a risk team accepts an automated score. When procurement stands by a supplier downgrade. When security escalates an alert generated by supply chain threat detection.

That trust disappears the moment someone asks why and no one can answer.

As AI in supply chain systems influence supply chain risk management decisions, leaders are expected to justify outcomes. Without explainability, teams hesitate or override the system. Both weaken security posture.

Opaque risk scoring weakens visibility and action

Most organizations already rely on supply chain analytics and supply chain intelligence. Dashboards show alerts and scores in near real time. The gap is understanding.

Without AI transparency, supply chain visibility becomes superficial. Leaders see elevated risk but cannot identify which factors drove the change. Financial exposure, geopolitical signals, cybersecurity events, and data quality issues blur together. Action stalls.

Explainability clarifies which signals mattered during supply chain risk assessment and why thresholds were crossed.

Decision transparency is now a compliance expectation

Over the last two years, audit and regulatory reviews have tightened. If AI influenced a supplier decision, a security escalation, or fraud response, reviewers now expect AI model explainability for compliance and audits.

In supply chain compliance management, unexplained AI outputs are increasingly treated as control weaknesses. This is especially visible in supply chain cybersecurity, sanctions exposure, and supplier eligibility decisions.

Explainability enables ownership, not hesitation

Explainable AI does not slow response. It enables ownership. Risk and security leaders can understand, challenge, and stand behind outcomes.

Without explainability, AI in supply chain accelerates decisions but erodes trust. With it, AI becomes a dependable component of supply chain security, not an opaque system no one wants to defend.

Where Explainability Directly Improves Risk Assessment, Visibility, and Security

Explainability matters most at the exact points where AI outputs turn into action. Not in dashboards. In decisions that change supplier status, trigger investigations, or escalate security incidents. This is where AI in supply chain either strengthens control or quietly undermines it.

Explainable risk scoring improves supplier risk assessment

Supplier risk assessment is one of the most sensitive uses of AI. Scores influence onboarding, contract renewals, and audit scope. Without explainable AI for supplier risk assessment, teams struggle to justify why a supplier’s risk profile changed.

Explainability shows which inputs mattered. Financial stress signals. Geographic exposure. Cyber indicators. Data freshness. That clarity allows supply chain risk management teams to validate assumptions, challenge outliers, and document decisions in a way auditors can follow.

Visibility becomes actionable when drivers are clear

Many organizations claim strong supply chain visibility, yet still struggle to act. The reason is simple. Visibility without context is noise.

Explainable AI connects supply chain analytics to specific risk drivers. Leaders can see not just that risk increased, but why. That distinction matters during disruptions, sanctions screening, and cyber incidents. It turns supply chain intelligence into prioritization, not just observation.

Security and threat detection require explainable escalation

In supply chain cybersecurity, automated threat detection often triggers escalation. When a system flags anomalous behavior or potential fraud, teams need to know which signals caused the alert.

Explainability supports confident escalation in supply chain threat detection and supply chain fraud detection. It helps security teams distinguish real threats from data artifacts. It also supports post-incident review and accountability when actions are questioned.

Across these areas, explainability is not an overlay. It is what makes AI in supply chain usable in high-stakes environments where decisions must be justified, defended, and repeated under scrutiny.

How Explainable AI Supports Compliance, Audits, and Governance

Here’s what actually happens when an audit or regulatory review touches AI.

No one asks how advanced the model is.

They ask what decision it influenced.

If AI in supply chain played a role in downgrading a supplier, blocking a shipment, or escalating a cyber event, the review starts there. The expectation is simple. Show the reasoning.

In practice, this is where many programs struggle. Teams can show the outcome but not the logic. A risk score exists, but the drivers are unclear. An alert fired, but the contributing signals are buried. From a governance standpoint, that is a problem.

Explainability changes the posture of these conversations. Instead of defending the model, teams walk through the decision. Which data points were used. Which risk factors carried weight. Why the threshold was crossed at that moment. That level of transparency is what AI model explainability for compliance and audits actually means in operational terms.

Over the last two years, regulatory expectations have hardened around this point. In supply chain compliance management, AI-assisted decisions are expected to be reviewable and attributable. Especially in global supply chain security, sanctions exposure, and supply chain data security, the inability to reconstruct a decision is increasingly treated as a control weakness.

Governance also breaks down without ownership. When no one can explain an AI-driven outcome, no one wants to sign off on it. Explainability restores that chain of responsibility. It allows human oversight without turning AI into a black box that everyone avoids.

This is why explainability is not an audit add-on. It is the mechanism that allows AI in supply chain systems to operate inside real compliance, assurance, and governance frameworks without becoming a liability.

What Explainability Changes at the Decision Table ?

By this point, the question is not whether AI in supply chain is powerful enough. Most organizations already rely on it for supply chain risk assessment, security monitoring, and prioritization.

What changes with explainability is how decisions are made in the room.

When risk scores and threat alerts are explainable, conversations move faster. Leaders debate judgment, not data credibility. Procurement discusses mitigation options instead of questioning the signal. Security focuses on response, not validation. This is where supply chain analytics actually support decision-making rather than slowing it down.

Without explainability, the dynamic flips. Meetings drift into model debates. Decisions get deferred. Overrides happen quietly. Supply chain visibility exists, but it does not carry authority.

Explainability shifts AI from being something teams react to, into something they rely on. It aligns supply chain security, governance, and accountability at the point where decisions are made, not after outcomes are reviewed.

That shift is subtle. But at scale, it determines whether AI strengthens decision-making or just adds another layer to manage.

Conclusion

Explainability has become essential to how global supply chain security actually works.

Security decisions now rely on AI in supply chain systems to assess supplier risk, detect threats, and trigger intervention. These decisions affect border movement, supplier access, and continuity under pressure. If the reasoning behind them cannot be explained, security controls lose authority when they are challenged.

Explainability strengthens supply chain security by making AI-driven risk decisions defensible. It allows leaders to show why a supplier was flagged, why a shipment was stopped, or why a threat was escalated. That clarity supports supply chain risk management, improves supply chain visibility, and holds up during audits and regulatory review.

As global supply chains face tighter scrutiny and higher disruption risk, transparency is no longer optional. AI model explainability for compliance and audits is now part of maintaining credible security posture, not just satisfying governance teams.

The future of AI in supply chain security will not be defined by faster models or more data. It will be defined by decisions that can be explained, owned, and trusted across borders when it matters most.

Share this article