Listen To Our Podcast🎧

Introduction

Banks use machine learning to support credit approvals, fraud alerts, transaction monitoring, and regulatory risk models. These decisions affect customers, capital, and compliance outcomes. Regulators expect banks to explain why models produce specific results and to reproduce past decisions during audits and investigations.

Many teams still generate explanations after deployment or only when regulators ask for them. That approach increases risk. It forces teams to recreate evidence long after decisions occur and often fails when models, features, or data pipelines change.

Mature banks integrate explainability directly into MLOps pipelines. They treat explanations as governed artifacts, not optional reports. This approach supports model risk management, audit readiness, and operational investigations without slowing deployment cycles.

This article explains where explainable AI fits inside banking MLOps workflows, what controls banks apply at each stage, and how teams use explanations to support credit, fraud, and regulatory risk use cases.

Improve compliance and decision-making

XAI enhances banking MLOps pipelines by improving transparency

What Changes in the Banking AI Workflow When Explainability is Added ?

Adding explainability to MLOps pipelines changes how teams work and how decisions are reviewed. Traditional workflows—train, validate, deploy—now include XAI-specific actions at each stage. These changes affect:

- Model validation: Feature contributions are analyzed alongside accuracy metrics.

- Deployment approvals: Models must meet explainability thresholds before promotion.

- Monitoring: Shifts in decision drivers are tracked in real time.

- Governance: Automated logs capture explainability outputs for audits.

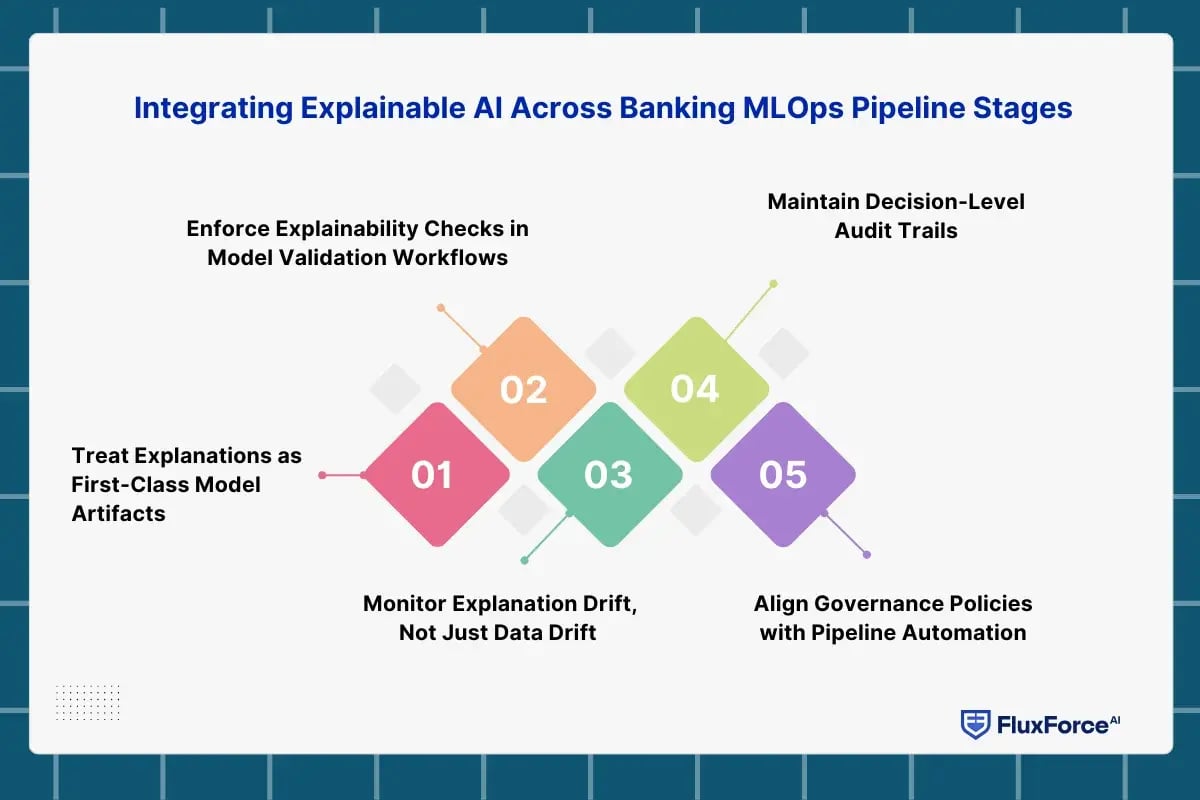

Integrating Explainable AI Across Banking MLOps Pipeline Stages

Stage 1: Data Preparation and Feature Engineering

Explainability begins before model training. Data and feature selection decisions must be traceable and well-documented:

- Feature Lineage Tracking: Each variable’s source, transformation, and business rationale is recorded. For example, debt-to-income ratio calculations are traceable to the original system.

- Metadata Storage: Feature definitions and transformations are stored in the pipeline as machine-readable metadata.

- Regulatory Alignment: Ensures inputs comply with guidelines like SR 11-7 or fair lending rules.

Integration impact: Data prep tools now generate XAI-ready artifacts automatically, enabling downstream traceability and audit support.

Stage 2: Model Training and Candidate Evaluation

During training, explainability methods are applied alongside traditional performance metrics:

- XAI Methods: SHAP, LIME, or counterfactual explanations evaluate how features influence model predictions.

- Candidate Comparison: Multiple model architectures (e.g., gradient boosting vs neural networks) are assessed for both accuracy and interpretability.

- Hyperparameter Tuning: Teams balance predictive power with explanation consistency, selecting models whose decisions are understandable and stable.

Integration impact: Feature attribution outputs are stored alongside models in registries, forming the first evidence layer for audits and validations.

Stage 3: Pre-Deployment Validation and Model Risk Assessment

Explainability is embedded in the validation process, not applied afterward:

- Feature Alignment Checks: Validation teams confirm that feature contributions match business logic.

- Segment Analysis: Models are evaluated for consistent behaviour across customer segments.

- Bias Detection: Proxies for protected attributes are identified and mitigated.

Artifacts produced: SHAP/LIME outputs, validation reports, and bias metrics are versioned and linked to each model candidate.

Integration impact: Models cannot be promoted without passing explainability thresholds, making validation gates XAI-aware.

Stage 4: Model Registry and Version Control

Approved models and their explainability outputs are stored in a centralized registry:

- Stored Artifacts: Model binaries, hyperparameters, feature attribution summaries, bias tests, and validation reports.

- Versioning: Each model version retains XAI outputs, enabling reproducibility.

- Access Control: Risk, compliance, and business teams can review model logic without manual reconstruction.

Integration impact: Registries serve as a single source of truth for both production operations and regulatory audits.

Stage 5: Production Deployment and Real-Time Explainability

Explainability continues into live operations:

- Decision Logging: Each prediction includes its feature contributions, stored alongside transaction or request data.

- Alert Mechanisms: Operations teams receive notifications if feature importance shifts unexpectedly.

- Customer Support Integration: Analysts can reference explanation outputs during dispute resolution or fraud investigations.

Integration impact: Real-time explainability becomes part of the operational workflow, reducing manual interventions and improving response times.

Stage 6: Ongoing Monitoring and Explanation Drift Detection

Monitoring now includes both accuracy metrics and explanation stability:

- Explanation Drift Detection: Tracks whether feature contributions change relative to baseline behaviour.

- Automated Alerts: Teams are notified when models rely disproportionately on specific features.

- Root-Cause Analysis: Detects upstream data issues or behavioural shifts in customer activity.

Integration impact: This proactive approach prevents silent model failures, improves decision consistency, and ensures regulatory compliance.

Stage 7: Model Retirement and Historical Audit Support

When models are retired, their XAI artifacts remain accessible:

- Archived Outputs: All feature attributions, validation reports, and bias metrics are stored alongside model versions.

- Audit Evidence: Regulatory or internal queries can trace every historical decision to a specific model version and its explanations.

- Governance Assurance: Historical completeness ensures that retired models remain auditable for years.

Integration impact: Explainability artifacts extend the lifecycle of models beyond active production, ensuring compliance continuity.

How Explainability Makes Models Audit-Ready by Design ?

Embedding XAI into MLOps pipelines transforms audit preparation:

- Decision-Level Traceability: Every production outcome links to model version, input features, explanation output, and thresholds. This means that any credit decision, fraud alert, or risk score can be traced back to the exact model and data that generated it.

- Comprehensive Documentation: Training data, validation results, and feature importance analyses are versioned and stored automatically. All model updates, retraining activities, and validation checks are captured in real time.

- Bias and Fairness Evidence: Segment-level explanations show models comply with non-discrimination rules. Validation reports highlight whether features disproportionately impact protected groups, helping banks proactively mitigate risks and demonstrate adherence to fair lending and regulatory guidelines.

Impact of Explainable AI on Credit, Fraud, and Risk Decisions

Explainability changes how banks build, validate, and operate models across core use cases:

1. Credit Risk Models

Leveraging explainable AI for credit risk models clarifies why loans are approved or denied:

- Feature attribution identifies which factors whether payment history, debt utilization, or income, drive decisions.

- Reduces reliance on proxies for protected attributes, supporting fair lending compliance.

- Provides clear adverse action explanations for customers and regulators.

2. Fraud Detection Models

Explainable AI in fraud detection models improves operational efficiency and customer experience:

- Analysts see which features triggered alerts (e.g., geolocation, transaction velocity).

- Real-time explanations reduce investigation time and false positives.

- Historical logs support post-incident analysis and regulatory reporting.

3. Regulatory Capital and Stress Testing

Risk models for capital planning benefit from XAI:

- Feature attributions confirm models respond appropriately to macroeconomic factors.

- Stress-test outputs remain interpretable for regulators.

- Transparent models reduce rework during supervisory examinations.

Five Banking MLOps Best Practices to Follow

The practical steps listed below help organizations improve transparency without overhauling existing systems:

1. Treat Explanations as First-Class Model Artifacts

In mature MLOps in banking, explanations are stored alongside model binaries, training datasets, and hyperparameters. Feature attribution profiles, bias checks, and segment behaviour summaries are versioned in the model registry. This ensures validation teams and auditors always review the same evidence that supported deployment decisions, instead of recreating explanations after issues arise.

2. Enforce Explainability Checks in Model Validation Workflows

Model validation in banking should not approve models based only on performance and stability metrics. Approval workflows must include explainability thresholds, such as acceptable feature dominance, prohibited variable influence, and segment consistency checks. These controls directly support model risk management requirements and reduce late-stage rejections by independent review teams.

3. Monitor Explanation Drift, Not Just Data Drift

Traditional monitoring focuses on population stability and score distributions. Banking MLOps best practices now include monitoring shifts in feature contributions and decision logic. If a fraud model suddenly relies more on location than transaction velocity, teams can intervene before accuracy metrics show visible decline.

4. Maintain Decision-Level Audit Trails

Every production decision should be traceable to:

- Model version

- Input features

- Explanation output

- Applied thresholds

This level of AI auditability allows banks to respond to regulatory queries without assembling evidence manually across multiple systems.

5. Align Governance Policies with Pipeline Automation

Policies alone do not enforce responsible AI. Governance controls must be embedded in CI/CD gates, validation sign-offs, and deployment approvals. When explainability checks fail, promotion to production should automatically stop. This is where AI governance in banking becomes enforceable, not advisory.

Conclusion

In banking MLOps pipelines, explainability is typically introduced during both model validation and production monitoring stages. After training, feature attribution methods are generated alongside performance metrics and stored with the model version in the registry. This allows model risk teams to review not only accuracy but also decision drivers before deployment.

Once in production, the same explanation logic runs on live transactions, creating traceable decision logs that support regulatory audits and post-incident investigations. As a result, explainability becomes part of standard model governance rather than an external reporting step.

Share this article