Listen To Our Podcast🎧

.png)

Introduction

Recent regulatory reviews show that most large banks now use AI in at least one core decision system. As AI in financial services expands across credit, fraud, and compliance, regulators are shifting focus from performance to accountability. Financial institutions are expected to explain how automated decisions are made, not just defend outcomes.

This is why XAI in finance has become a control requirement rather than a technical option. Black-box models increase exposure across fair lending, fraud operations, and model risk management. When decisions cannot be explained, they cannot be audited or defended.

Explainable AI supports trustworthy AI by making model behavior transparent to risk teams, compliance leaders, and regulators. It enables clear decision traceability and strengthens governance across high-impact financial use cases.

This blog outlines best practices for deploying explainable AI in financial systems. The focus is on practical deployment in regulated environments. Each section addresses how financial institutions can implement explainability without adding operational or compliance risk.

Practice 1 – Make Explainability a Governance Standard

Deploying explainable AI in financial systems must start with governance. This is the first and most critical best practice. In AI in financial services, explainability cannot be treated as a technical enhancement or a model add-on.

Banks should formally define explainability within their AI governance framework. This means stating where explanations are mandatory, how they are reviewed, and who is accountable for them. High-impact use cases such as credit scoring, fraud detection, and compliance monitoring require consistent AI decision transparency by default.

When explainability is governed, institutions move closer to responsible AI and trustworthy AI adoption. Models become easier to validate, audit, and defend during regulatory reviews.

What this practice enforces ?

- Clear policies for explainable AI in banking use cases

- Standard requirements for AI model transparency across teams and vendors

- Defined ownership for explanation approval and escalation

- Alignment with AI risk management and compliance controls

By making explainability a governance standard, financial institutions reduce operational ambiguity and strengthen control over financial AI models. This practice creates a stable foundation for compliant and scalable explainable AI deployment.

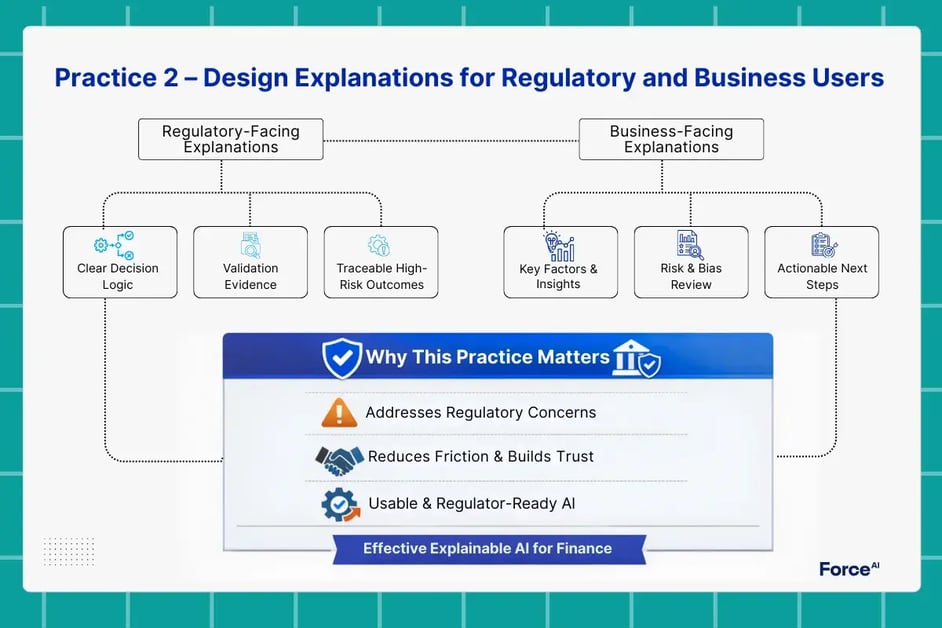

Practice 2 – Design Explanations for Regulatory and Business Users

Explainability fails when it assumes a single audience. In AI in financial services, regulators, risk leaders, and business teams interpret AI decisions differently. Effective deployment of explainable AI requires explanations that are tailored by role, not by model type.

Regulatory guidance and industry research, including recent CFA Institute analysis, highlight that supervisors expect explanations to support accountability, audit review, and post-incident investigation. They do not evaluate AI logic the same way internal data science teams do. This mismatch often leads to rework, delayed approvals, or model restrictions.

Regulatory-facing explanations

For compliance and supervisory review, explanations must support AI model transparency and AI auditability. This includes:

- Clear decision logic tied to input data

- Evidence supporting AI model validation outcomes

- Traceable reasoning for adverse or high-risk decisions

These explanations form the basis of AI compliance in finance and must remain consistent over time.

Business-facing explanations

Operational teams require model interpretability that supports action. Fraud analysts, credit officers, and risk managers need to understand why a decision occurred and what factors drove it. This supports AI risk management, bias review, and escalation workflows.

Why this practice matters ?

Research shows that lack of explainability remains a top regulatory concern for banks adopting AI. Designing explanations by stakeholder role improves trust, reduces friction, and supports trustworthy AI without sacrificing decision speed.

Banks that apply this practice deploy explainable AI in banking environments that are usable, defensible, and regulator-ready.

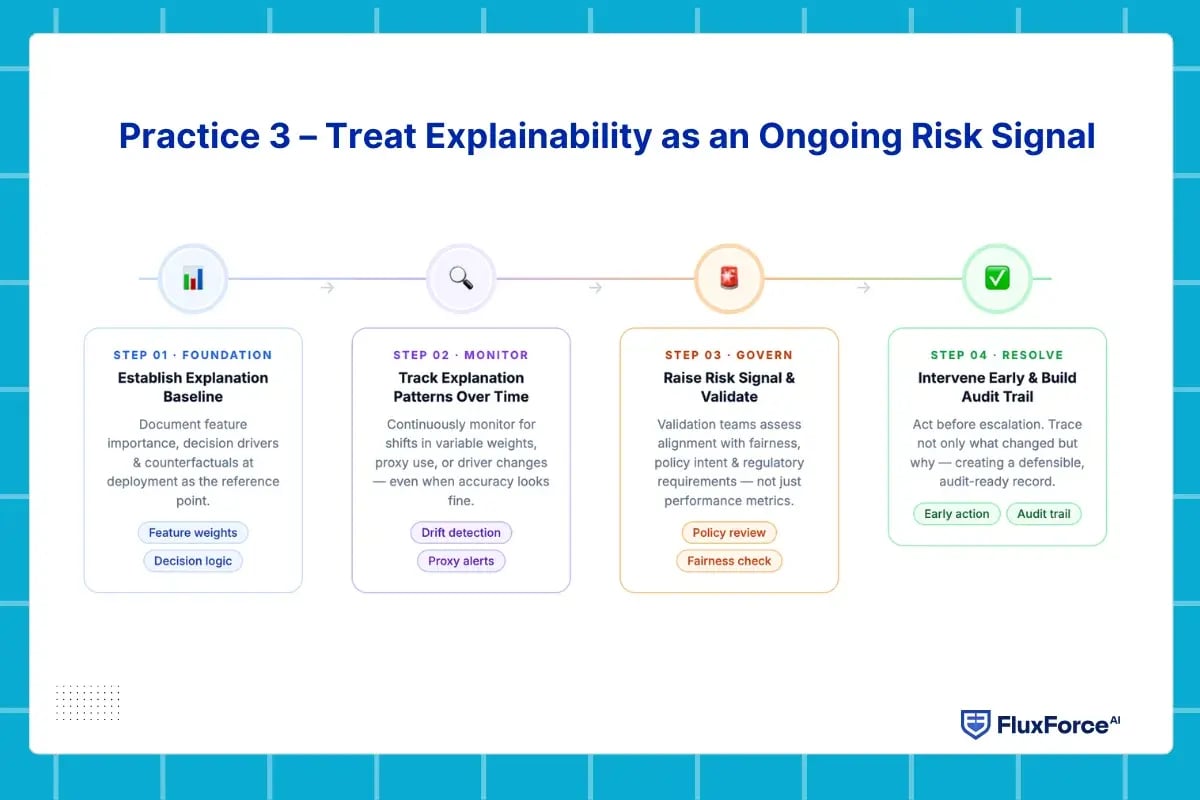

Practice 3 – Treat Explainability as an Ongoing Risk Signal

In financial systems, model risk rarely appears as a sudden failure. It develops gradually through small changes in data, customer behavior, or economic conditions. One of the most practical uses of explainable AI is identifying these shifts early, before they escalate into regulatory findings or operational losses.

In AI in financial services, a model can remain statistically accurate while drifting away from its original intent. When teams cannot see how decision logic evolves, risk accumulates silently.

Enabling real-world AI decision transparency

Operational teams need concise, consistent explanations tied to individual outcomes. In fraud operations, explainable AI for fraud detection works best when alerts surface the specific behavioral signals that triggered a flag. This reduces false positive handling time and improves analyst confidence.

In lending, explainable AI for credit scoring allows decision reviewers to assess alignment with fair lending policies without reverse-engineering model outputs. Clear explanations also support adverse action reasoning while preserving AI model transparency.

Designing for performance and control

High-volume environments demand speed, but speed does not require opacity. Mature deployments separate prediction execution from explanation delivery. This hybrid structure allows machine learning explainability to operate in parallel, preserving system performance while maintaining oversight.

When explainability is embedded this way, financial AI models become easier to supervise, challenge, and refine. The result is stronger AI auditability, fewer escalations, and better alignment between automated decisions and institutional risk appetite.

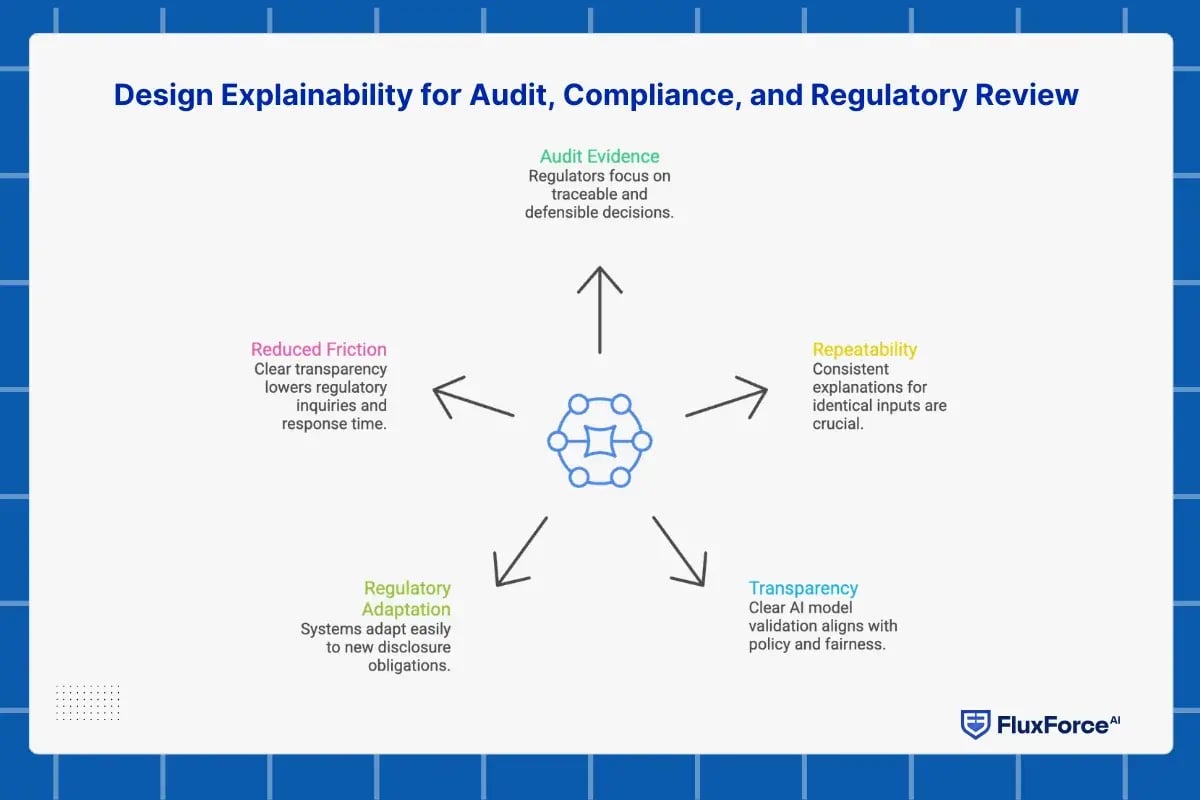

Design Explainability for Audit, Compliance, and Regulatory Review

Explainability carries the most weight when decisions are questioned after they are made. In AI in financial services, regulatory reviews often occur months after deployment, when teams must justify outcomes under real scrutiny. Explainable AI must therefore support retrospective analysis, not just real-time understanding. This practice focuses on aligning explainability with how audits and regulatory examinations actually function.

What regulators actually test in explainable AI systems

Audits evaluate evidence, not intent. When supervisors assess explainable AI, they focus on whether a specific decision can be traced, explained, and defended after the fact. This applies to credit approvals, fraud alerts, and risk classifications.

An explanation that exists only at runtime does not meet audit expectations. Reviewers expect institutions to reproduce decision logic using the same model behavior and data context that existed at the time of execution.

Turning explainability into an audit control

For compliance teams, AI auditability depends on repeatability. Explanations must remain consistent for identical inputs. They must also be linked to the approved model version used in production. This linkage supports internal reviews and external examinations without manual reconstruction.

Strong AI model transparency improves AI model validation. Validation teams can assess alignment with policy intent and fair use requirements. This is critical for regulated workflows such as lending and transaction monitoring.

Meeting regulatory expectations across regions

Expectations around AI compliance in finance continue to expand. Regulatory frameworks increasingly require institutions to demonstrate how automated decisions are governed. Systems designed with audit-ready explainability adapt more easily to new disclosure obligations.

Clear AI decision transparency reduces regulatory friction and lowers response time during supervisory inquiries. For organizations operating financial AI models at scale, this approach strengthens oversight and protects regulatory credibility.

Conclusion

Explainable AI fails in financial systems when it is treated as a feature instead of a discipline. Models may perform well, yet still create exposure if decisions cannot be reviewed, challenged, or defended later.

The practices outlined in this blog reflect how regulated teams actually evaluate AI in financial services. Explainability must align with governance. It must serve regulators and operators differently. It must surface risk early. It must exist inside production workflows. It must hold up during audits without reconstruction.

This is where most implementations break down. The gap is rarely theory. It is execution.

For banks and fintechs using explainable AI in credit, fraud, or risk decisions, the real question is not whether explanations exist. It is whether those explanations survive validation, audits, and operational pressure.

Choosing the right development partner determines that outcome. The right partner builds explainability as a control. The wrong one leaves teams defending models they do not fully trust.

If your organization is evaluating or scaling artificial intelligence in banking, the next step is to see how these practices work in production.

Request a Demo to review how explainable AI can be deployed with auditability, control, and regulatory confidence.

Share this article