Listen To Our Podcast🎧

Introduction

Over the past two years, regulators have increased scrutiny on cross-border compliance risk, identifying it as a major driver of AML and sanctions failures. Institutions are now expected not only to screen partners, but to provide defensible explanations for risk management in banking decisions across multiple jurisdictions.

Cross-border partners operating in high-risk jurisdictions often involve complex ownership structures, indirect control, and fragmented data sources. Traditional aml compliance tools, including static risk scoring and basic sanctions screening for partners, can highlight potential exposure. However, they rarely explain why a partner presents elevated risk or which specific factors drive that assessment.

This creates material gaps in third-party risk management. When regulators or auditors ask why a high-risk vendor was onboarded, retained, or not escalated, compliance teams frequently struggle to provide clear, evidence-based reasoning. Increasingly, unexplained risk decisions lead to supervisory findings, remediation requirements, and enhanced monitoring obligations.

Explainable AI (XAI) addresses this gap by making risk scoring logic transparent and traceable. Compliance leaders gain clear visibility into which risk indicators influenced decisions, how those indicators were weighted, and how conclusions were reached. This supports audit readiness, regulatory confidence, and effective human-in-the-loop oversight in line with current model governance expectations.

Improve compliance and decision-making

XAI enhances banking MLOps pipelines by improving transparency

Why Validating High-Risk Cross-Border Partners Is So Difficult ?

Cross-border compliance risk is not uniform across regions. Regulatory standards differ. Data access varies. Enforcement maturity is uneven. A partner viewed as low risk in one market may raise concerns in another.

This creates pressure on risk management in banking teams. Compliance functions must balance local operating realities with global standards. Country-level risk ratings often oversimplify exposure and fail to reflect partner-specific behavior.

Cross-Border Compliance Risk Varies by Jurisdiction

Cross-border compliance risk is not uniform across regions. Regulatory standards differ. Data access varies. Enforcement maturity is uneven. A partner viewed as low risk in one market may raise concerns in another.

This creates pressure on risk management in banking teams. Compliance functions must balance local operating realities with global standards. Country-level risk ratings often oversimplify exposure and fail to reflect partner-specific behavior.

High-Risk Jurisdictions Limit Risk Visibility

Partners operating in high-risk jurisdictions AML environments often lack reliable ownership records or transparent corporate disclosures. Beneficial ownership information may be incomplete or outdated. Public data sources are fragmented.

Traditional AML compliance controls rely on structured and verifiable data. When data quality is weak, assessments are based on assumptions rather than evidence. This weakens third-party risk management and attracts regulatory scrutiny.

Sanctions Exposure Changes Over Time

Sanctions screening for partners is no longer a one-time control. Sanctions regimes change frequently. Regulators now expect ongoing monitoring throughout the partner lifecycle.

Without a clear view of how sanctions risk evolves, institutions struggle to justify delayed escalation or missed exposure. This remains a common source of financial crime compliance findings.

How Explainable AI Improves Cross-Border Partner Validation ?

For high-risk cross-border partners, regulators now expect institutions to explain how risk decisions were reached, not just present risk outcomes. Explainable AI (XAI) supports this shift by making partner risk assessments transparent.

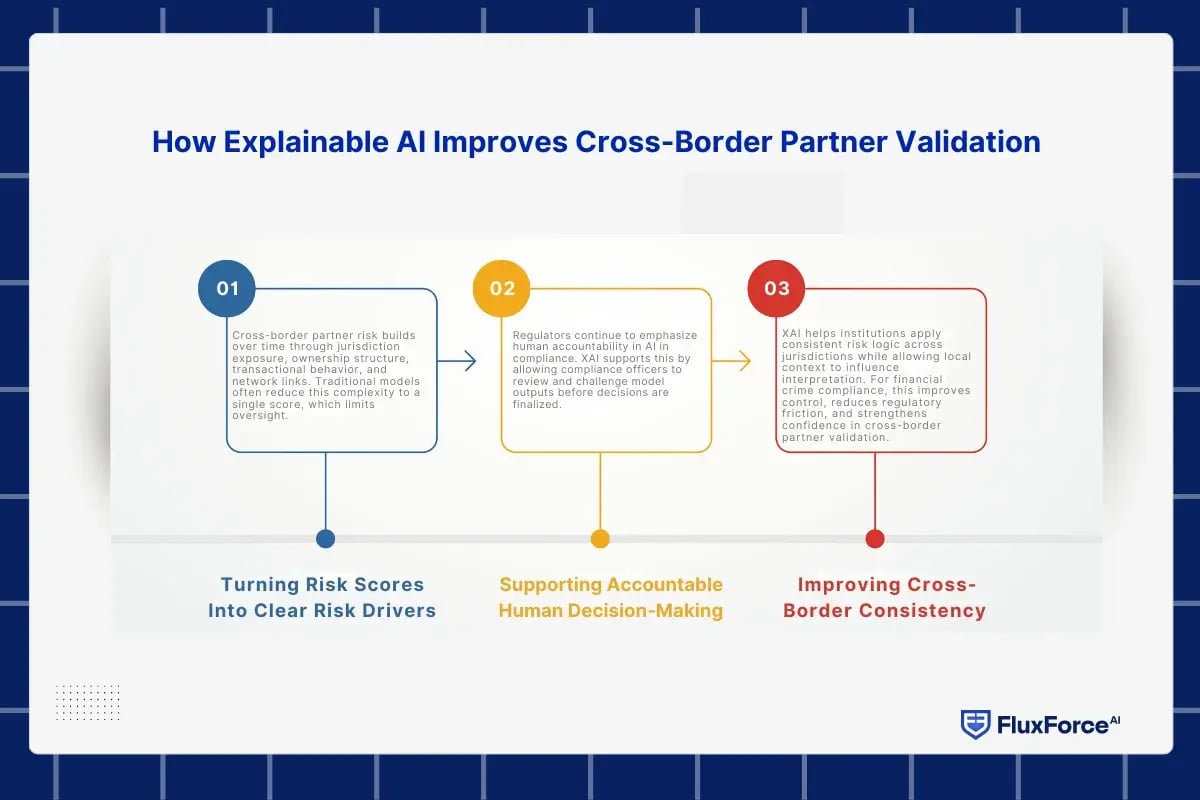

Turning Risk Scores Into Clear Risk Drivers

Cross-border partner risk builds over time through jurisdiction exposure, ownership structure, transactional behavior, and network links. Traditional models often reduce this complexity to a single score, which limits oversight.

XAI enables AI-based third-party risk assessment by showing which factors influenced a partner’s risk rating and how strongly they contributed. Compliance teams can identify why a partner was classified as high risk and which indicators triggered escalation. This clarity strengthens third-party risk management and supports evidence-based decisions.

Supporting Accountable Human Decision-Making

Regulators continue to emphasize human accountability in AI in compliance. XAI supports this by allowing compliance officers to review and challenge model outputs before decisions are finalized.

In risk management in banking, this is critical for high-risk partner approvals. Explainability ensures decision-makers understand the rationale behind risk assessments and can apply judgment confidently. It also creates an audit trail that shows decisions were informed, reviewed, and governed.

Improving Cross-Border Consistency

XAI helps institutions apply consistent risk logic across jurisdictions while allowing local context to influence interpretation. For financial crime compliance, this improves control, reduces regulatory friction, and strengthens confidence in cross-border partner validation.

Explainable Risk Scoring in Cross-Border Partner Due Diligence

AI compliance solutions translate regulatory expectations into enforceable controls. Regulators do not prescribe vendors, but they consistently test for governance, auditability, and risk mitigation. Institutions that deploy AI without these capabilities struggle to meet AI regulatory compliance, even when AI outcomes appear accurate.

Traditional scoring approaches combine jurisdiction risk, ownership complexity, transaction behavior, and sanctions screening for partners into a single output. While useful for prioritization, these scores often fail to explain why a partner is classified as high risk. When risk levels change, compliance teams are left defending conclusions instead of presenting evidence. For financial crime compliance, that distinction is critical.

Explainable AI (XAI) makes risk scoring suitable for regulatory review. Explainable models reveal the drivers behind risk assessments, such as high-risk jurisdictions AML exposure, indirect sanctions links, or changes in cross-border transaction activity. This transparency is essential for AI-based third-party risk assessment in complex international environments.

Regulators also expect proportionate responses to risk. Not every high-risk partner requires the same controls. XAI enables institutions to apply enhanced due diligence, transaction restrictions, or increased monitoring based on specific risk drivers rather than generic thresholds. This strengthens risk management in banking and improves confidence in AI in compliance programs.

Ongoing Monitoring of Cross-Border Partners Using Explainable AI ?

Most cross-border partner risk does not surface during onboarding. It develops later through changes in transaction behavior, ownership links, or geographic exposure. Regulators are well aware of this shift. That is why ongoing monitoring has become a priority area in reviews of aml compliance and third-party risk management, particularly for partners connected to high-risk jurisdictions.

The issue is not whether monitoring exists. It is whether institutions understand what the monitoring results actually mean.

Alert Volume Without Risk Understanding

In many programs, ongoing monitoring still revolves around alert volume. Alerts increase. Reviews are triggered. Escalations follow. Yet compliance teams often struggle to answer a basic question: what changed in the partner’s risk profile, and why does it matter now?

Without that clarity, financial crime compliance becomes reactive. Decisions are driven by thresholds rather than understanding. This is where regulators begin to challenge the effectiveness of risk management in banking, even when controls appear active.

Explainable AI and Meaningful Risk Change

Explainable AI (XAI) improves ongoing monitoring by tying risk changes to clear drivers. When a partner’s profile shifts, explainable models surface the cause. This may be increased exposure to high-risk jurisdictions AML, new indirect sanctions connections, or transaction behavior that no longer aligns with the expected risk profile.

For cross-border transaction risk analysis, this distinction is critical. Compliance teams can focus on material risk changes instead of noise, and escalation decisions become evidence-led rather than precautionary.

Defensible Decisions Over Time

From a governance perspective, ongoing explainability matters because decisions accumulate. XAI allows institutions to show why controls were tightened, why reviews were delayed, or why a partner was retained despite elevated risk. These explanations can be reviewed internally and defended externally.

For regulators assessing AI in compliance, this demonstrates that monitoring is active, risk-aware, and accountable. In high-risk environments, transparency increasingly determines whether supervisory confidence is maintained or lost.

Explainability, Audit Readiness, and High-Risk Jurisdictions

Operating across borders places risk management in banking under direct regulatory pressure, especially when partners sit in sanctioned or weak-control markets. In these cases, regulators focus less on whether a risk model exists and more on whether its outcomes can be explained, challenged, and defended.

Why Audit Readiness Breaks Down in Cross-Border Reviews

In high-risk jurisdictions AML environments, data gaps and indirect ownership are common. Traditional aml compliance tools often rely on aggregated risk scores that cannot show how jurisdiction risk, sanctions indicators, or transaction behavior influenced a decision. During audits, this creates a gap between what the model detected and what compliance teams can prove.

This is a frequent failure point in financial crime compliance reviews. Regulators increasingly expect institutions to explain why a high-risk partner was approved, retained, or exited, not just show that screening occurred.

How Explainable AI Strengthens Regulatory Confidence

Explainable AI (XAI) closes this gap by making decision logic visible. Compliance teams can demonstrate how individual risk drivers contributed to a partner’s risk profile and how controls were applied. This supports defensible third-party risk management decisions and aligns with expectations for AI in compliance governance.

In high-risk jurisdictions, explainability also enables faster supervisory reviews. Clear documentation reduces follow-up queries and lowers the risk of findings related to model opacity or weak oversight.

Conclusion

Validating high-risk cross-border partners has become one of the most scrutinized areas of aml compliance and financial crime compliance. Sanctions volatility, opaque ownership structures, and uneven data quality across jurisdictions have made traditional approaches to third-party risk management increasingly difficult to defend.

In this environment, explainability is no longer optional. Regulators expect institutions to show how risk decisions were made, what factors influenced them, and how human oversight was applied. Explainable AI (XAI) enables this by turning model outputs into transparent, reviewable reasoning that supports sound risk management in banking.

For cross-border partner due diligence, the value of XAI is not automation alone. It provides regulatory trust. It strengthens audit readiness. It allows compliance teams to operate confidently in high-risk jurisdictions where unexplained decisions quickly become supervisory issues.

The strategic takeaway is clear. Institutions that embed explainability into AI in compliance frameworks are better positioned to validate partners, withstand regulatory challenge, and manage cross-border risk with credibility rather than assumption.

Share this article