Listen To Our Podcast🎧

Introduction

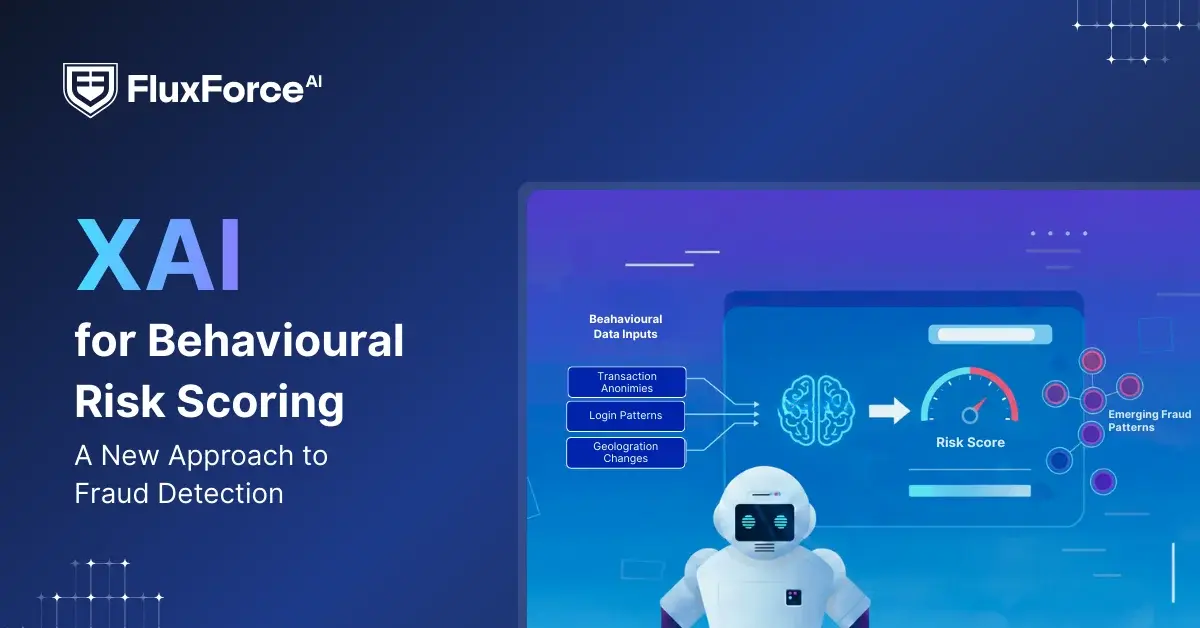

Fraud decisions today are increasingly driven by behavioural signals. Login frequencies, changing locations, fund transfers, and more influence how risk is evaluated. But predicting verdicts without explainability is fast, not easy to justify.

For financial institutions, a decision without clear visibility into which behavioural signals influenced the outcome becomes a liability. It increases reliance on manual reviews, contributes to regulatory risk, and slows response timelines.

With the influence of Explainable AI (XAI) models in finance, a new approach is emerging for detecting fraud. By connecting the dots of behavioural patterns, fraud decisions can be reviewed, questioned, and understood.

This piece analyses the impact of Explainable AI on risk scoring and how it shifts fraud detection from opaque prediction to data-driven decision-making.

Boost security and accuracy with FluxForceAI’s advanced solutions today.

Enhance security and accuracy

The Reality Behind “AI-Driven” Fraud Models in Most Banks

Across the U.S., 91% of banks use AI in fraud detection workflows. While these systems often generate accurate risk scores. What they rarely provide is visibility into why a transaction, account, or behaviour was classified as high risk.

The result is a set of operational and regulatory challenges that directly affect fraud teams:

Risk scores are delivered without behavioural context

- Analysts can see that an account is flagged as high risk, but not which customer behaviours triggered the alert.

Verification workloads increase

- Without model reasoning, teams must manually review transactions, histories, and patterns to understand alerts.

Emerging fraud patterns go undetected

- Models trained on historical behaviour fail to surface early-stage behavioural shifts, allowing new fraud techniques to progress before controls adapt.

Audit and compliance reviews become difficult

- When decisions cannot be traced to observable inputs, audit reviews become slower and explanations to regulators become harder.

Risk signals remain fragmented across systems.

- Independent AI tools generate alerts using separate logic and data, preventing a consolidated view of behavioural risk across the customer lifecycle.

How XAI Transforms Behavioural Risk Scoring in Practice ?

Explainable fraud risk models, instead of just flagging risky behaviour, show the actions that drove the behavioural score higher. Here's a breakdown of how Explainable AI scores risk based on behaviour:

1. Data ingestion from multiple sources

The system pulls behavioural data from transactions, login events, device fingerprints, and customer interaction logs. This creates a complete view of activity patterns.

2. Feature extraction and scoring

The model identifies which behaviours matter most. For example: multiple login attempts from different locations within an hour or sudden large transfers to new accounts. Each behaviour receives a number based on fraud correlation.

3. Behaviour score breakdown generation

Instead of outputting a single number, the model generates a behaviour score breakdown. This shows analysts exactly which signals contributed to the final risk rating. A score of 85/100 might break down as: 40 points from location anomaly, 30 points from transaction velocity, 15 points from device mismatch.

4. Real-time decision with interpretable output

The system delivers both the verdict and the reasoning. Analysts see not just "high risk" but "high risk due to login from new country + transfer to previously unused account + amount 300% above user average."

Transitioning from black box prediction to transparent reasoning significantly helps fraud teams make right decisions. Real-time transaction monitoring with explainable AI allows teams to act faster and with more confidence.

The Shift Explainable Fraud Risk Models Introduce

Explainable fraud risk models bring a fundamental change to behavioural risk scoring. By replacing non-understandable risk scores with reasoning-led responses, it helps organizations build trust. Below are the key shifts they introduce:

1. From Risk Scores to Explanation-Driven Verdicts- Instead of producing isolated behavioural scores, explainable models surface the behavioural patterns and signals that drive fraud decisions. This allows teams to understand why risk escalates, not just that it does.

2. Explainability in Risk Scoring for Analyst Confidence- Behavioural score breakdowns show exactly how each action contributes to overall risk. Analysts can validate alerts faster, reduce false positives, and avoid overreliance on automated outputs.

3. Transparency to Customers Without Exposing Models- Explainable fraud risk models support customer-facing transparency by providing defensible decision narratives without revealing sensitive detection logic.

4. Governance and Audit Readiness by Design- XAI allows firms to explain outcomes to customers using clear, defensible narratives. This maintains trust without revealing sensitive model logic or proprietary detection methods.

Based on McKinsey’s 2023 report, nearly 70% of financial service providers have started to implement XAI to strengthen fraud controls and regulatory compliance.

Comparison: XAI vs Traditional Credit Scoring Models Used by Banks

The results of credit risk scoring and its effectiveness change significantly when black-box models are replaced with Explainable AI systems. The table below clearly highlights the differences:

Financial Services Use Cases for XAI-Based Behavioural Scoring

Explainable behavioural scoring not only improves fraud detection accuracy but also strengthens operational efficiency and compliance across financial institutions. Below are some key applications showing its impact:

1. Account Takeover Detection

XAI models identify when legitimate accounts show behavioural changes. Integrated risk-based authentication and behavioural biometrics work together to verify user identity based on typing patterns, mouse movements, and navigation habits.

2. AML and Fraud Analytics

XAI help compliance teams detect money laundering patterns by explaining which transaction sequences and behavioural combinations triggered alerts. This makes case building more efficient and regulatory reporting more defensible.

3. Real-Time Transaction Monitoring

Financial services fraud risk scoring operates in milliseconds during payment processing. Explainable models allow systems to block suspicious transactions while providing merchants and customers clear reasons for the decision.

4. Personalized Risk Assessment

Interpretable machine learning for fraud detection enables banks to adjust security measures based on individual customer behaviour patterns. Low-risk customers experience less friction while high-risk activities face additional verification steps.

Key Reasons Why XAI-Driven Behavioural Scoring is the Next Fraud Detection Standard

With the ability to address the limitations of complex, opaque models, Explainable AI aligns closely with modern fraud prevention requirements. For organizations XAI-driven behavioural scoring enables significant future-positives:

1. Regulatory compliance becomes manageable

When regulators ask why a customer was flagged or denied, banks can provide specific behavioural factors rather than generic risk scores. This satisfies growing regulatory demands for algorithmic transparency.

2. False positive rates drop significantly

Analysts can quickly validate or dismiss alerts when they understand the behavioural score breakdown. This reduces wasted investigation time and improves customer experience by minimizing incorrect blocks.

3. Models adapt to new fraud tactics faster

XAI for fraud detection reveals which behavioural signals matter most. When fraud patterns shift, teams can update detection rules based on actual behavioural data rather than guessing which variables to adjust.

4. Cross-team collaboration improves

Fraud analysts, data scientists, and compliance teams can discuss decisions using the same behavioural framework. Everyone understands what drove a verdict, making model refinement more efficient.

5. Customer trust increases

When customers understand why security measures triggered, they cooperate more readily with verification processes. Transparency in decisions significantly reduce complaints and support tickets.

Conclusion

Companies don't struggle with detecting fraud. They struggle with explaining fraud decisions. The shift from traditional risk scoring to XAI-driven behavioural scoring models addresses this gap directly. By making fraud detection reasoning transparent, financial institutions gain operational efficiency, regulatory compliance, and customer trust simultaneously.

When each behavioural signal becomes clear, analysts detect fraud faster and act with confidence.

The question for financial institutions is no longer whether to adopt Explainable AI in risk scoring, but how quickly they can integrate it into existing fraud detection infrastructure. That’s why FluxForce AI provides pre-built AI modules that drive operational value within the first 90 days of deployment. With seamless API integration, our XAI-based behavioural scoring extends beyond better fraud detection.

Share this article