Listen to our podcast 🎧

Introduction

The modern banking environment is largely dependent on AI to catch and act on fraud in real time. But as the use of AI expands, fraudsters are becoming smart enough to target the small blind spots these advanced models still miss.

Across organizations, when an AI system flags activity without explaining why, teams often take time to investigate, and by then, new fraud patterns do the damage. Last year, global fraud losses crossed $34 billion, even with the worldwide adoption of AI, proving how quickly attackers adapt.

This blog explains how Explainable AI (XAI) helps close these blind spots effectively and improve banking fraud detection. Moreover, it shows how quickly banks can respond to fraud and make more accurate decisions.

XAI enhances fraud prevention by revealing hidden risks

Boost security with FluxForce AI today!

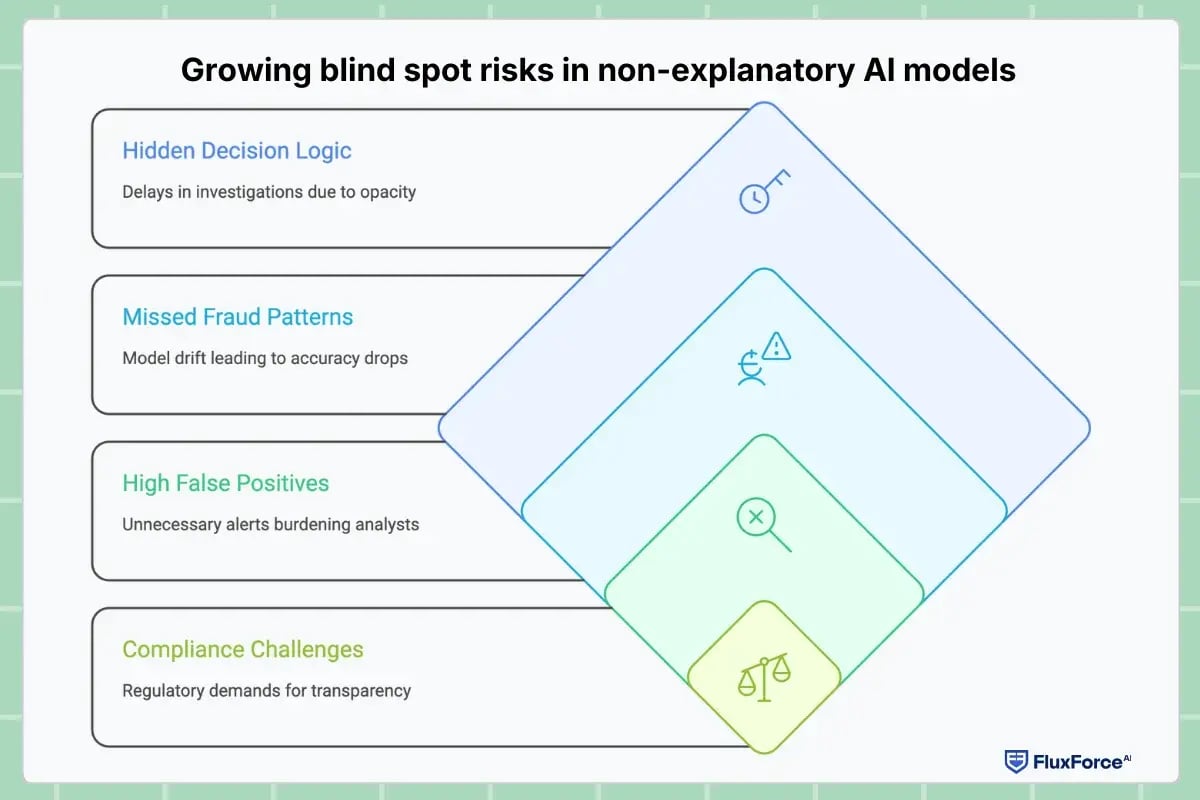

Growing blind spot risks in non-explanatory AI models

Deep learning and machine learning models detect patterns well, but they operate without explaining their decisions. As fraudsters evolve their strategies, these silent systems create measurable financial, operational, and compliance risks for banks.

Here are the major blind spots created by non-explanatory fraud detection models:

1. Hidden decision logic that delays investigations

When analysts don’t know why a model flagged a transaction, investigation cycles stretch unnecessarily. Across banks, unclear alerts increase resolution times by nearly 30–50%.

2. Missed new fraud patterns due to model drift

Model drift is one of the biggest revenue-impacting issues in financial fraud detection. When shifts in customer behaviour or fraud patterns go unnoticed, banks see a significant accuracy drop within months.

3. High false positives

Banks report that up to 70% of flagged alerts are false positives in black-box AI systems. Since analysts cannot see the model’s reasoning, they must treat every alert as critical.

4. Compliance challenges and weak audit trails

Regulators increasingly demand transparency in AI-driven fraud detection. Banks without explainable outputs face average fines in millions.

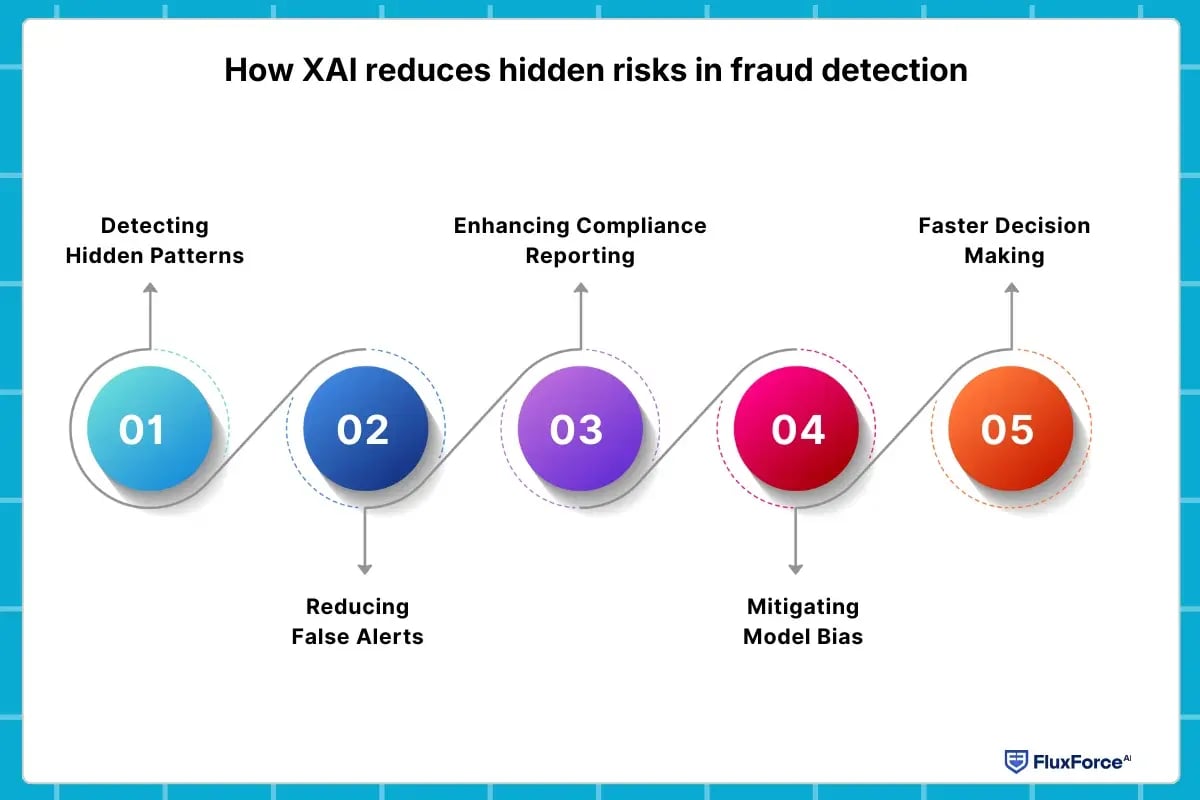

How XAI reduces hidden risks in fraud detection ?

Explainable AI reduces blind spots by showing analysts exactly why a transaction appears suspicious. As an example, Perplexity, an AI used for search, lists the sources it relies on to display results. Transparency in AI decisions improves detection, speeds investigations, and increases confidence in model outcomes.

XAI helps banks address several key risks in fraud detection:

1. Detecting Hidden Patterns– XAI shows analysts which steps influenced a model’s decision. It highlights unusual patterns such as rapid account activity, sudden location changes, or irregular transaction sequences, allowing teams to respond quickly and prevent potential losses.

2. Reducing False Alerts– Analysts can see why AI flagged a transaction, enabling them to separate genuine threats from harmless anomalies. This clarity reduces wasted effort, lowers false positives, and directs resources toward the high-risk activities that need immediate attention.

3. Enhancing Compliance Reporting– XAI automatically provides explanations that teams can share with regulators. Analysts can show why a transaction received a flag or approval, which supports compliance requirements and strengthens reporting.

4. Mitigating Model Bias– XAI identifies patterns that models may favour or ignore, exposing potential bias. Teams can adjust the models, accordingly, ensuring fair and unbiased decisions, and preventing fraud from slipping through due to overlooked data segments.

5. Faster Decision Making– Analysts act immediately when AI decisions are transparent. They approve, block, or investigate transactions without hesitation. Time-sensitive cases no longer rely on guesswork, reducing losses and improving overall operational efficiency.

Explainable Workflows for Fraud Analysts and Compliance Teams

XAI fits naturally into daily fraud investigation processes. It gives analysts and compliance teams the clarity they need to act quickly and effectively. Here are the key explainable workflows enabled by XAI:

1. Real-Time Alerts with Insights

Whenever the fraud model flags activity, XAI displays the key factors behind it. Analysts know immediately if the trigger was:

- Transaction amount

- Login device fingerprint

- Customer behaviour changes

This replaces long investigation cycles with instant clarity.

2. Root-Cause Analysis

Analysts can instantly see the underlying cause behind suspicious activity. XAI highlights patterns like:

- Repeated login failures

- Sudden cross-border transfers

- Unusual step-up authentication attempts

This helps banks address the source of fraud instead of reacting to isolated alerts.

3. Streamlined Case Management

As XAI clarifies each alert, analysts can prioritize cases based on clarity and risk. This helps teams:

- Identify high-risk transactions instantly

- Focus on cases with strong explanations

- Reduce backlog and investigation delays

This improves operational efficiency and ensures cases move through the system faster.

4. Collaboration Across Teams

Everyone, fraud analysts, compliance officers, and risk managers, can view the same explanations. XAI supports stronger collaboration by enabling:

- Shared understanding of each flagged event

- Fewer disagreements over decisions

- Smoother coordination during investigations

This reduces confusion and strengthens overall fraud prevention workflows.

5. Trusted AI-Driven Fraud Detection

When analysts understand how the model works, trust grows naturally. Transparent fraud detection leads to:

- Increased retention of customers

- Fewer escalations caused by uncertainty

- Clearer communication with regulators and auditors

Trust makes the entire fraud detection process more reliable and predictable.

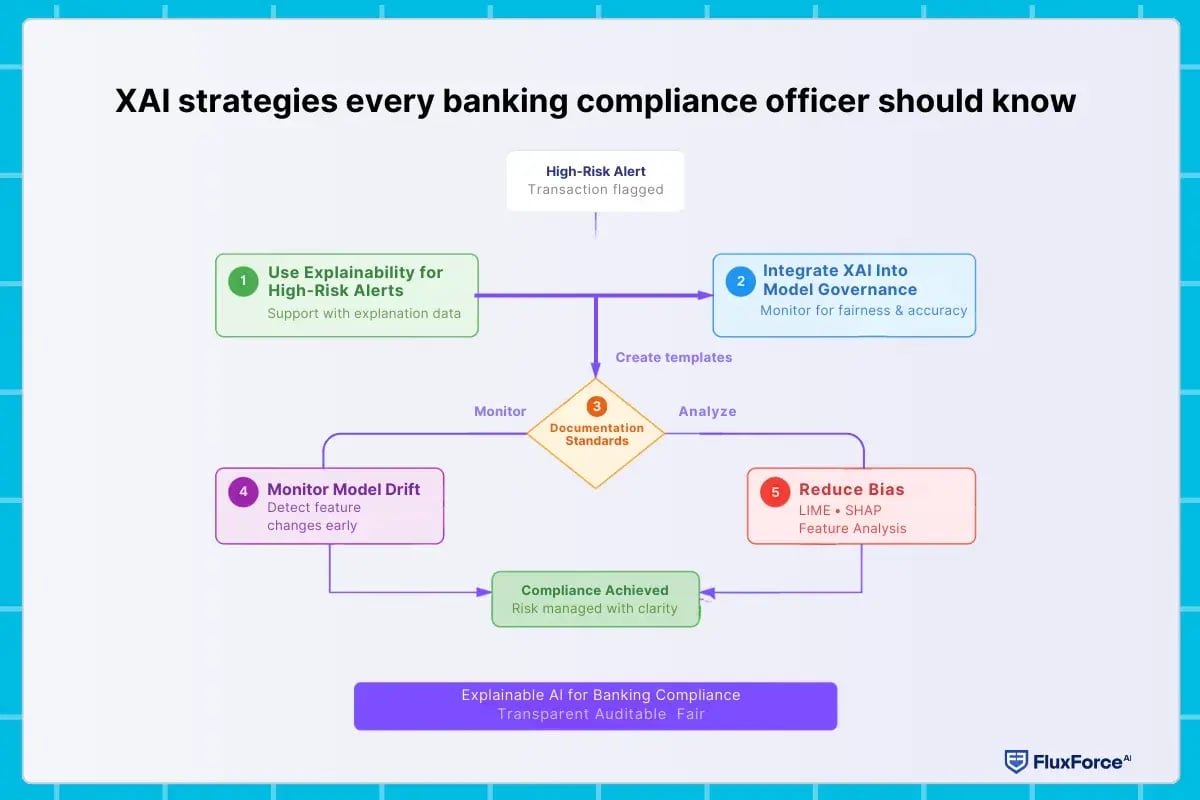

XAI strategies every banking compliance officer should know

To help compliance teams manage risk with clarity, banks must adopt the following strategies:

1. Use Explainability for Every High-Risk Alert

Compliance officers should ensure that any high-risk transaction is supported by explanation data. This helps teams understand the exact cause behind decisions and prepares them for regulator queries.

2. Integrate XAI Into All Model Governance Workflows

Explainability should be part of the bank’s AI governance process. This ensures models are monitored regularly for fairness, accuracy, and reliability.

3. Create Clear Documentation for Each Model Decision

XAI provides insights that can be used directly in compliance reports. Officers should create standardized documentation templates for easier regulatory reviews.

4. Monitor Model Drift with Explainability Tools

When model performance changes, XAI can show which features are behaving differently. This helps compliance officers detect drift early and take corrective action.

5. Use Explainable Techniques to Reduce Bias

Techniques like feature contribution insights, LIME, and SHAP help officers identify bias. This improves fairness and avoids regulatory penalties.

What changes when XAI enters fraud detection (Outcomes) ?

Explainable AI enhances banking fraud operations across several dimensions. The following table demonstrates key outcomes:

.webp?width=1200&height=800&name=What%20changes%20when%20XAI%20enters%20fraud%20detection%20(Outcomes).webp)

The future of transparent AI banking systems

SpaceX founder Elon Musk frequently emphasized that AI systems must be transparent and aligned with human intentions.

In banking, this idea matters because fraud teams work in high-stakes environments where unclear model decisions create avoidable friction. Transparency in AI models powers the future of finance by giving teams precise reasoning behind alerts and reducing the guesswork that slows daily investigations. In the next 4–5 years, banking fraud detection will increasingly rely on systems where every model decision can be traced to specific factors. Analysts and compliance teams will have direct visibility into feature impacts, enabling quicker identification of unusual patterns and more precise monitoring of model behaviour.

XAI enhances fraud prevention by revealing hidden risks

Boost security with FluxForce AI today!

Conclusion

Most fraud models can flag suspicious activity, but they rarely explain the reasoning behind a decision. XAI closes these blind spots by revealing the exact patterns the model detected and what it missed, such as unusual spending spikes or unused event data. With clear explanations, analysts make faster, more accurate decisions and gain greater confidence in the model’s output. Explainability transforms fraud detection from a black-box process into a transparent, reliable, and high-performing system. Banks that adopt XAI today strengthen their defences and are better equipped to prevent fraud tomorrow.

Share this article