Listen to our podcast 🎧

Introduction

Modern banking relies heavily on AI and ML models to make decisions on loans, fraud alerts, AML checks, and risk scores. These intelligent models now influence outcomes with regulatory, financial, and customer impact. However, many operate with limited transparency, making it difficult for governance teams to understand how results were produced. Auditors and regulators expect clear reasoning behind every decision, but black‑box models rarely provide it. This gap puts pressure on banks to strengthen oversight and clarify model behaviour.

As a result, Explainable AI (XAI) has become an essential component of modern model governance, especially as reliance on automated decisions continues to grow.

Why Model Governance Demands Explainable AI in Modern Banking ?

Black-box AI systems may optimize speed, but they create some of the costliest slowdowns in banking operations. When a model produces an outcome without showing its reasoning, teams manually waste hours trying to break down the decision. Until then, customer decisions stay on hold, risk cases remain open, and auditors are left without clarity.

Regulators strictly expect banks to justify every model output that affects customer decisions or increases risk. XAI gives governance teams the visibility these reviews depend on.

An AI system with a clear answer to every “why” can:

- Show the exact drivers behind each decision

- Help teams confirm alignment with approved rules

- Shorten audit and validation cycles

- Reduce compliance risk through transparent reasoning

- Surface bias or inconsistent behaviour

- Improve trust in automated risk and credit decisions

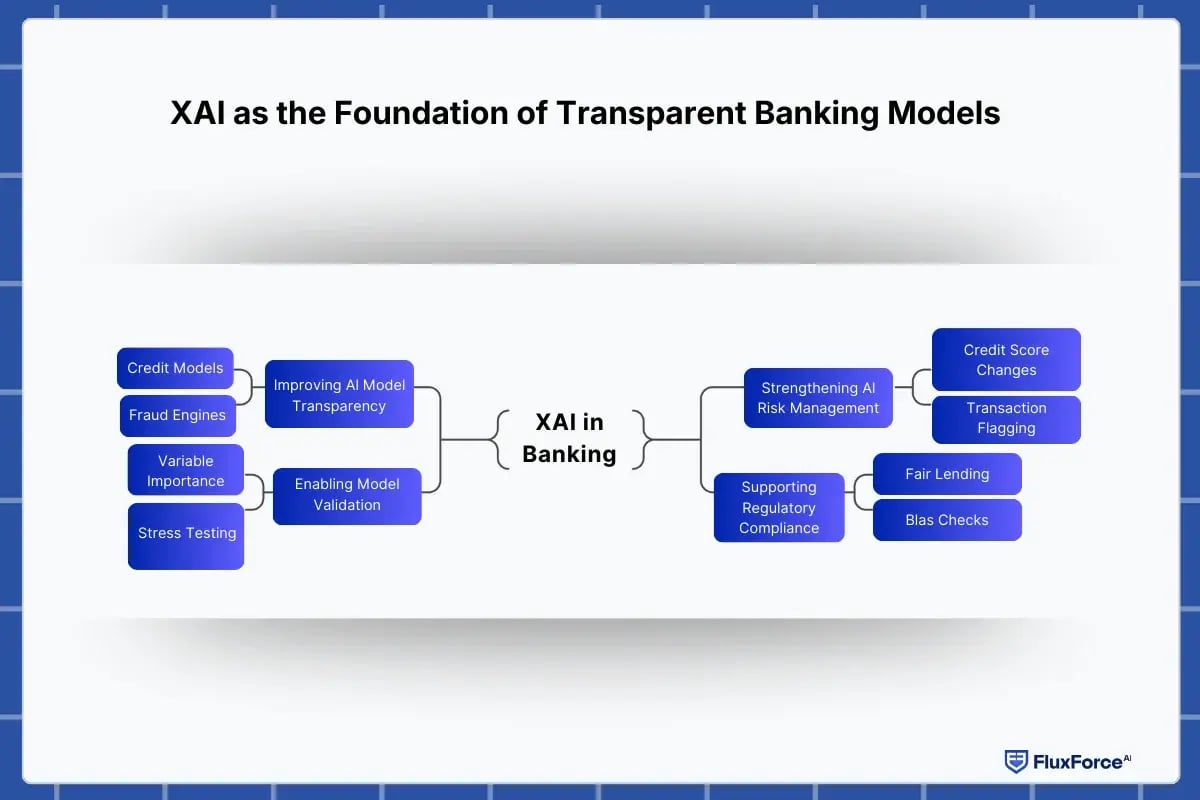

XAI as the Foundation of Transparent Banking Models

An open and transparent banking contributes to lasting relationships with customers. XAI gives teams a framework to achieve this by providing a direct view into the data points, signals, and patterns behind each model decision.

Below are the areas where XAI strengthens governance the most.

1.ImprovingAI Model Transparency in Banks

Every model that touches a customer journey benefits from transparency. Whether it’s a credit model ranking borrowers or a fraud engine scanning transactions, explainability reveals the variables that mattered most. Transparency helps governance teams verify:

- Whether the decision aligns with approved policies

- Whether the model relied on risky or unstable variables

- Whether any unintentional bias influenced the outcome

This level of transparency is essential across high-impact processes, such as loan assessments, interest rate decisions, and customer due diligence.

2. Strengthening AI Risk Management in Banking

Risk leaders need visibility to manage exposure effectively. XAI provides insight into:

- Why a credit score changed

- Why a transaction was flagged as high-risk

- Why a customer’s AML rating was escalated

- Why a borrower moved from “low risk” to “borderline”

With this clarity, risk leaders can identify anomalies, detect model drift early, and confirm that risk classifications reflect actual behaviour. In a 24/7 risk environment, explainability becomes the primary monitoring tool.

3. Enabling Model Validation Using XAI

Validation teams must confirm that models behave consistently and within policy. XAI speeds up this work by revealing:

- The variables driving each output

- Whether feature importance aligns with expectations

- Whether explanations stay stable across segments

- How decision logic changes under stress testing

Explainability replaces trial-and-error with evidence. This results in faster validation cycles, clearer documentation, and fewer review delays.

4. Supporting Regulatory Compliance with XAI

Regulators often expect banking AI systems to justify its decisions, especially across lending, AML, fraud detection, and customer risk scoring. Explainability helps banks meet:

- Fair lending requirements

- Bias and discrimination checks

- Model Risk Management (MRM) guidelines

- AML/CTF model documentation demands

- GDPR rights to explanation (in relevant regions)

5. Driving Bias Detection in AI Models

AI models often pick up old patterns from historical data and create unfair results. XAI helps banks spot this by showing:

- Disproportionate rejection rates

- Feature combinations that lead to unfair outcomes

- Patterns that suggest hidden bias

- Sensitive variable correlations

- Drifts in decision behaviour over time

With this clarity, governance teams can take corrective action, retrain models, or set limits to block biased decisions from reaching customers.

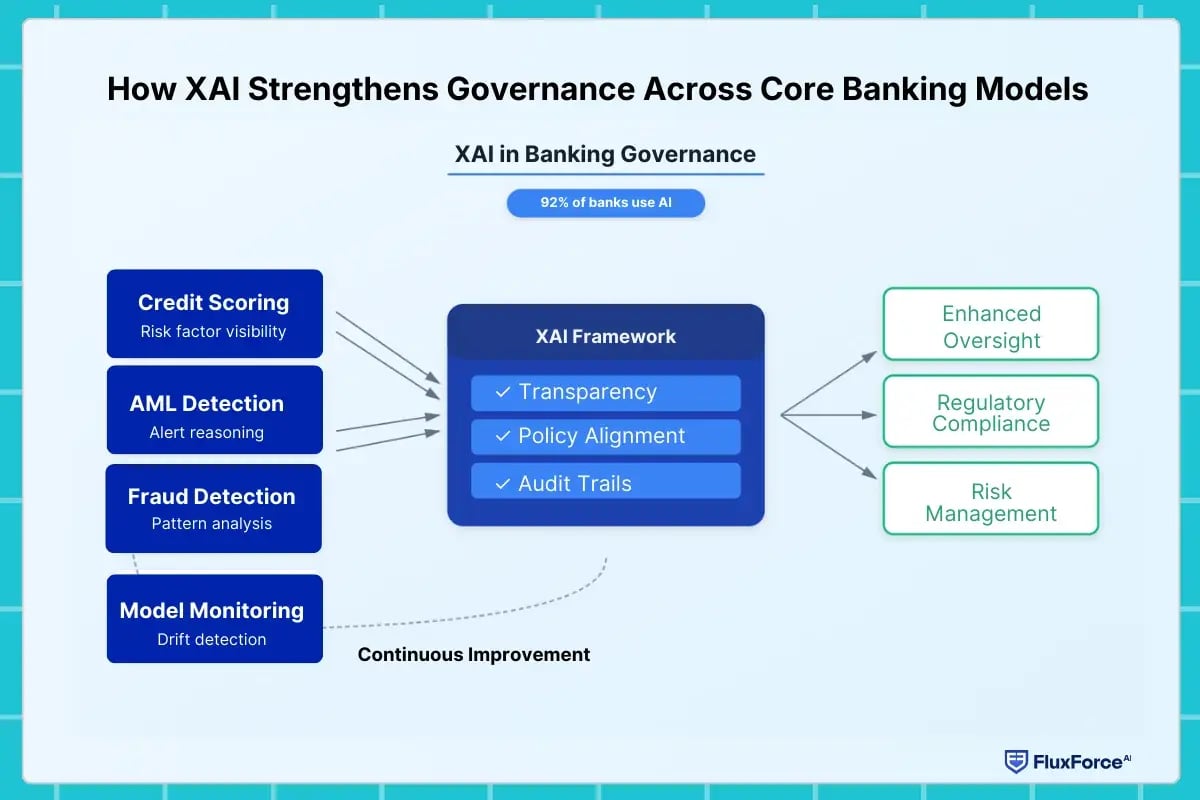

How XAI Strengthens Governance Across Core Banking Models ?

Nearly 92% of global banks have one or more active AI deployments as of 2025, and most still operate with limited visibility. While explainable AI in banking enhances oversight across all model types, certain functions experience a stronger governance impact:

1. Explainable Credit Scoring Algorithms

Across credit scoring systems, XAI exposes the exact risk factors that shape a borrower’s score. It allows reviewers to confirm that the model’s logic aligns with lending policy and regulatory expectations. This transparency improves assessment quality and supports consistent credit evaluation across customer groups.

2. AML Model Explainability

AML investigations often slow down because analysts cannot immediately see why a model flagged a transaction. XAI removes this friction by showing the risk signals behind each alert. With clear reasoning, teams can focus on meaningful cases, reduce unnecessary escalations, and maintain a clean audit record during regulatory reviews.

3. Fraud Detection Explainability

Fraud models react to fast-changing patterns, which makes governance difficult without visibility. XAI helps reviewers see whether a flagged transaction was unusual enough to justify the alert. It also helps distinguish between emerging fraud behaviour and random anomalies.

4. Explainability for Model Monitoring

Model monitoring requires continuous oversight of how decision patterns evolve. XAI helps governance teams identify changes in model behaviour by revealing shifts in reasoning, emerging data dependencies, and variations in feature influence. This level of visibility allows early detection of drift and ensures the model remains aligned with policies.

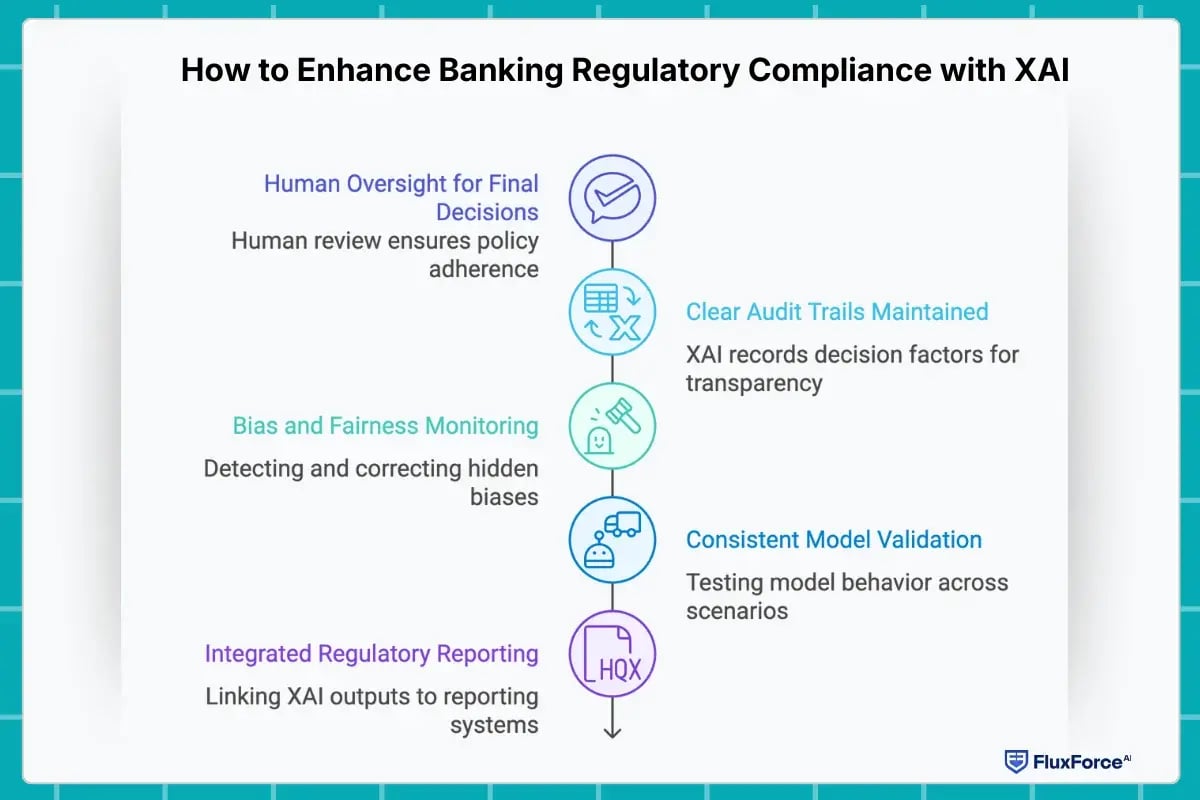

How to Enhance Banking Regulatory Compliance with XAI ?

With regulators increasingly pressurizing banks over AI decisions, banks must provide clear, traceable explanations for every model outcome. While XAI helps teams meet compliance requirements significantly, strategic approaches are necessary to ensure consistent, auditable, and bias-free governance.

Banks must ensure:

1. Human Oversight for Final Decisions- Even with XAI, human review is essential. Teams must check model outputs, intervene in risky cases, and confirm that each decision follows bank policies and regulatory requirements.

2. Clear Audit Trails Maintained- XAI should record all decision factors clearly. Auditors can trace why credit, fraud, or AML outcomes occurred, ensuring transparency, accountability, and smooth regulatory reviews.

3. Bias and Fairness Monitoring- Explainable AI must detect hidden bias in models. Teams can correct unfair patterns, ensure consistent treatment across customers, and reduce regulatory and reputational risks.

4. Consistent Model Validation- Validation teams should use XAI outputs to test scenarios and confirm models behave consistently across workflows. This ensures reliable, repeatable decisions and adherence to governance standards.

5. Integrated Regulatory Reporting- XAI outputs must link directly to reporting systems. This allows banks to provide regulators with clear, traceable, and justified explanations for every AI decision.

What Changes When XAI Becomes Part of Banking Governance ?

When explainability becomes part of the governance ecosystem, banks gain benefits that strengthen internal confidence and bring more customers.

- Increased trust in automated decision systems

- Reduced regulatory tension during audits and reviews

- More predictable outcomes across customer journeys

- Lower operational risks in fraud, AML, and credit

- Faster investigation resolution due to transparent reasoning

- Improved fairness and bias-free decisioning

- Better long-term model health through clearer monitoring

XAI transforms governance from reactive to proactive. Instead of waiting for issues to surface, governance teams catch them at the reasoning level, before they escalate.

AI enhances model governance in banks,

ensuring transparency, compliance, and trust

Conclusion

Explainable AI gives banks what black-box models cannot: traceability, accountability, and confidence. From development and validation to monitoring and audits, it strengthens every part of the model governance lifecycle. With regulators expecting full transparency and customers demanding fairness, XAI is no longer optional. It is the structural layer that enables responsible, transparent, and compliant AI in modern banking.

Share this article