Listen To Our Podcast🎧

Introduction

AI is already running inside your business. It helps decide who gets approved, flagged, shortlisted, or stopped. But here is the problem most enterprises are facing in 2026.

If a regulator asked you today how one of your AI systems made a decision, could your team explain it clearly, quickly, and with proof?

For many enterprises, the honest answer is no. This is why AI regulatory compliance 2026 has moved from a future concern to a present risk. Earlier, AI compliance lived with legal teams. In 2026, it lives inside operations.

New AI laws for enterprises directly affect how AI models are built, trained, deployed, and monitored. Teams now have to show:

- Why an AI system exists

- What data it uses

- How decisions can be reviewed

- Who is responsible when something goes wrong

When this is missing, AI systems slow down business instead of helping it.

Most Enterprises Lack Basic Control Over Their AI Systems

A common issue across large organizations is simple. No one has a full view of all AI systems running across teams.

This makes AI governance and regulation hard to follow. Without clear ownership and tracking, even low-risk AI can become a compliance issue. Risk leaders are now pushed to create structure where speed once ruled.

The Cost of Getting AI Compliance Wrong Is Rising Fast

The cost of non-compliance with AI regulations is no longer just fines. It includes delayed launches, forced shutdowns, emergency audits, and reputational damage.

Enterprises are learning this the hard way. Fixing AI compliance after systems are live is expensive and disruptive. This is why AI risk management is becoming part of everyday business decisions.

Stay ahead with FluxForceAI’s guide to AI regulatory compliance in 2026

ensure your enterprise remains compliant.

How to Prepare for AI Regulations 2026 Without Slowing the Business ?

Many enterprises think AI compliance starts when a regulator knocks. In 2026, that approach fails. New AI regulatory trends 2026 expect enterprises to prove control before incidents happen. This means preparation has to begin inside product, data, and risk teams, not after deployment.

The goal is simple. Stay compliant without killing speed.

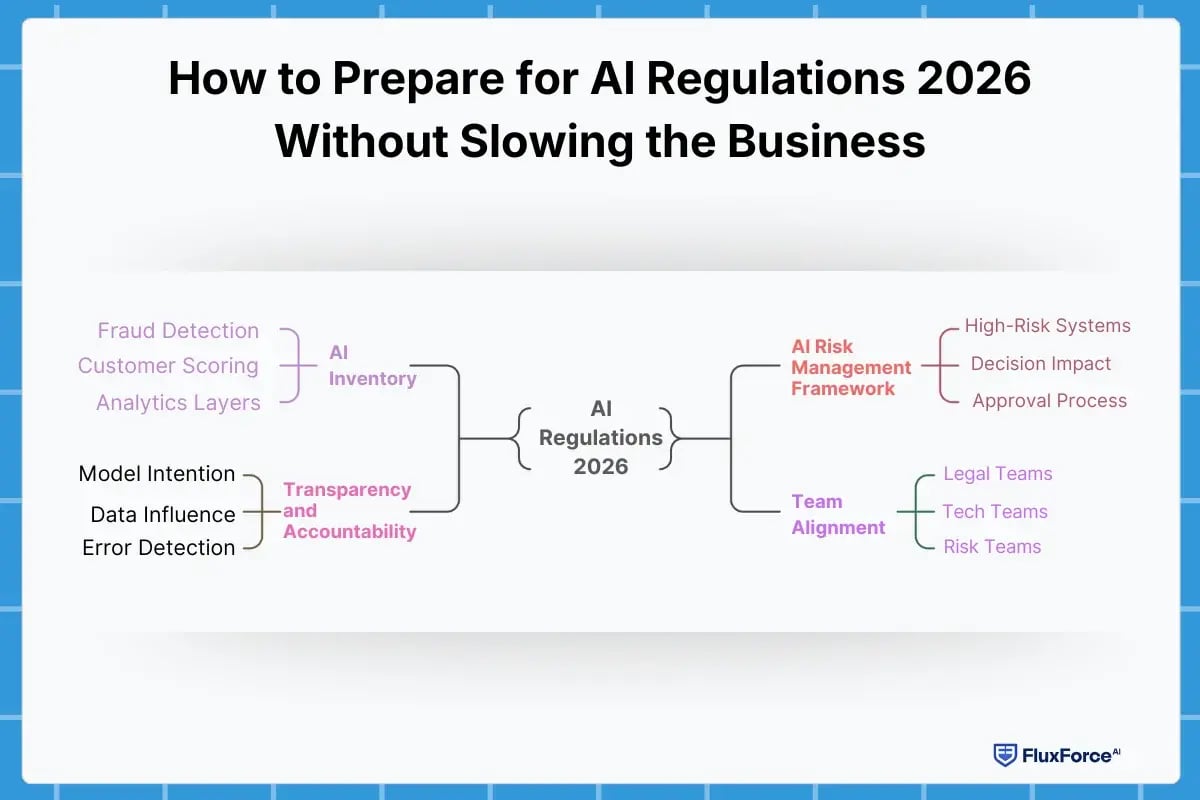

Start With an AI Inventory, Not a Policy

Before drafting any AI policy enterprise guide, enterprises must answer one basic question.

Where is AI actually being used today?

Most organizations underestimate this. AI sits in fraud detection, customer scoring, monitoring tools, analytics layers, and third-party platforms. Without a clear inventory, AI regulatory compliance becomes guesswork.

This inventory becomes the base for everything that follows.

Build an AI Risk Management Framework That Fits the Business

A generic framework does not work. Enterprises need an AI risk management framework that matches how decisions are made internally.

This includes:

- Identifying which systems are high-risk under EU AI Act requirements 2026

- Mapping decision impact on customers, employees, or markets

- Defining who approves, monitors, and overrides AI outcomes

This is where compliance and business strategy meet. Done right, it reduces friction instead of adding layers.

Embed Transparency and Accountability Early

Regulators now expect AI transparency and accountability by design.

This does not mean exposing algorithms. It means being able to explain:

- What the model is intended to do

- What data influences outcomes

- How errors or bias are detected

Enterprises that embed explainability early avoid painful rewrites later. This is becoming a core expectation under AI compliance standards globally.

Align Teams Before Regulations Force You To

One of the biggest blockers to AI compliance strategy for businesses is internal misalignment.

Legal teams think in laws. Tech teams think in performance. Risk teams think in exposure. In 2026, these teams must operate together.

Enterprises that align early move faster when new AI legal requirements arrive. Those that do not end up reacting under pressure.

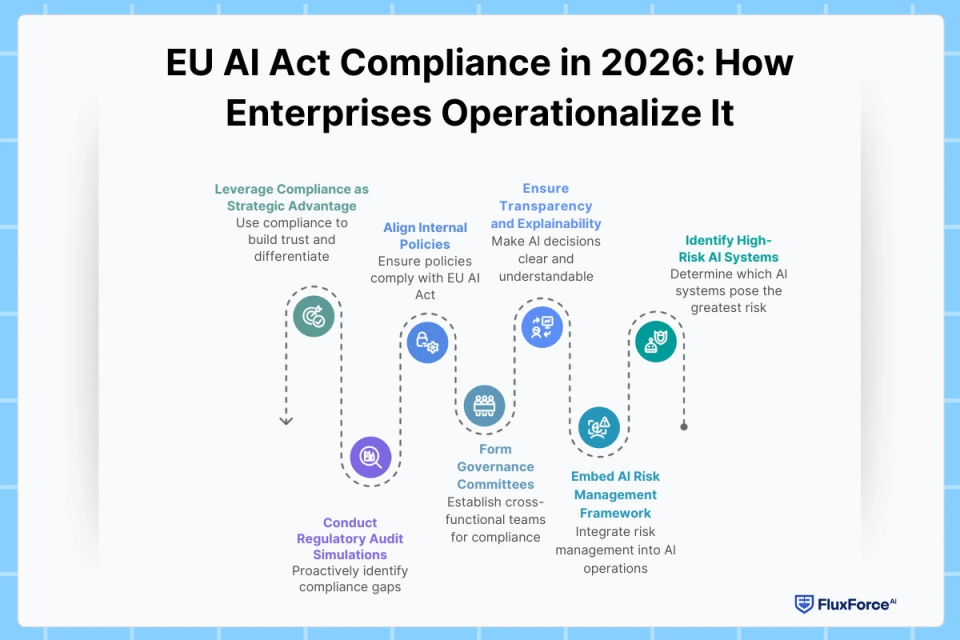

EU AI Act Compliance in 2026: How Enterprises Operationalize It

In 2026, enterprises cannot afford compliance gaps. AI decisions now affect credit, fraud detection, trading, inventory, logistics, and operational reliability. Here’s how to approach it strategically.

Identifying High-Risk AI Systems

Before you can manage compliance, you need to know which systems pose the highest risk. High-risk AI is typically involved in decision-making, anomaly detection, and predictive forecasting. These systems impact operational integrity and regulatory exposure.

-

Decision-making AI:

Systems affecting financial risk, approvals, or operational outcomes must meet transparency, explainability, and fairness standards.

-

Anomaly detection AI:

Systems detecting fraud, operational anomalies, or irregular patterns need continuous monitoring and human oversight. -

Predictive and optimization AI:

Systems forecasting demand, inventory, or operational performance require documented risk assessments and audit trails.

Embedding AI Risk Management Framework

Compliance isn’t a one-time checklist—it’s a continuous process. Embedding an AI Risk Management Framework (RMF) ensures that AI operations remain compliant throughout development, deployment, and monitoring stages.

- Risk detection and mitigation: Regular bias testing, fairness assessments, and continuous model validation.

- Documentation and audit readiness: Maintain detailed records of model decisions, data sources, and system updates.

- Operational integration: Embed checkpoints into development pipelines to make compliance continuous, not reactive.

Transparency and Explainability

No single team can manage AI compliance alone. Effective governance requires coordination between risk, legal, compliance, data science, and operational teams.

- Form cross-team governance groups including risk, legal, compliance, data science, and operations.

- Align internal policies with EU AI Act compliance.

- Conduct regular simulations of regulatory audits to proactively identify gaps.

Governance Committees and Cross-Functional Collaboration

No single team can manage AI compliance alone. Effective governance requires coordination between risk, legal, compliance, data science, and operational teams.

- Form cross-team governance groups including risk, legal, compliance, data science, and operations.

- Align internal policies with EU AI Act compliance.

- Conduct regular simulations of regulatory audits to proactively identify gaps.

Leveraging Compliance as a Strategic Advantage

Enterprises that integrate AI regulatory compliance into operations can gain trust, reduce risk, and differentiate themselves in the market.

- Demonstrate adherence to AI regulatory compliance 2026 to build stakeholder trust.

- Minimize costs of non-compliance with AI regulations through proactive governance.

- Position transparency and accountability as operational and reputational assets.

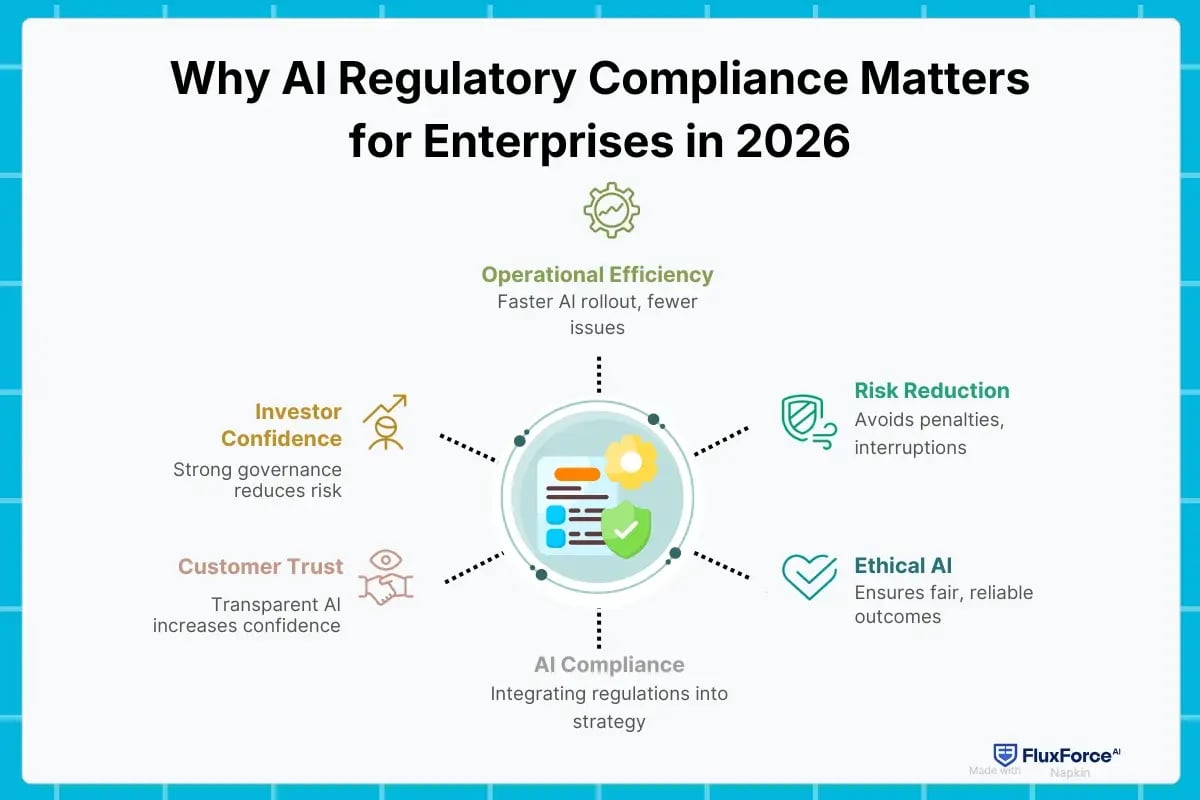

Why AI Regulatory Compliance Matters for Enterprises in 2026 ?

In 2026, enterprises face a clear reality: AI is critical to operations, and compliance is mandatory. Success depends on how well organizations plan, adapt, and embed AI regulations into their strategy.

Compliance as a Competitive Edge

Following AI regulations isn’t just about avoiding fines—it can strengthen your enterprise.

- (h4) Build trust with customers: Transparent and explainable AI increases confidence in your business decisions.

- Gain investor confidence: Showing that compliance is managed well signals strong governance and reduces risk.

- Boost operational efficiency: Enterprises with built-in compliance can roll out AI faster, while others waste time fixing issues later.

Reducing Financial and Operational Risks

Non-compliance has real consequences:

- High-risk AI systems under EU AI Act requirements 2026 face strict penalties without proper governance.

- Audits or regulatory checks can pause AI-driven operations, like fraud detection or supply chain management, causing revenue and efficiency losses.

- Different regional laws—such as Colorado AI Act compliance versus India AI regulation updates—require careful planning to avoid fines or legal trouble.

Driving Ethical AI and Accountability

Regulations push enterprises to go beyond basic compliance:

- Embedding AI transparency and accountability ensures fair and reliable AI outcomes.

- Human oversight protects enterprises from errors in AI systems that could affect critical decisions.

- Documented ethical practices safeguard your brand reputation if regulators or the public question AI decisions.

Staying Ahead of Global Standards

AI compliance is increasingly global. Enterprises need to prepare for new rules before they arrive:

- Tracking global AI governance frameworks keeps your systems aligned with upcoming laws.

- Using AI compliance standards and best practices now reduces the cost of future updates.

- A proactive approach lets enterprises turn compliance into a business advantage instead of reacting to problems.

Understanding the Cost of Non-Compliance

Knowing the risks makes compliance a priority:

- Fines for breaking rules can reach millions of dollars depending on the region.

- Operational interruptions, reputational damage, and loss of trust often cost more than the fines themselves.

- Following an AI risk management framework helps prevent these hidden losses.

Stay ahead with AI regulatory compliance in 2026

Discover essential strategies and updates

Conclusion

As enterprises step into 2026, AI is both an opportunity and a responsibility. Regulatory frameworks such as the EU AI Act and evolving state and global laws are no longer optional checkboxes. They are essential rules that shape how AI can be safely and effectively used. For businesses, success depends on aligning AI innovation with compliance from the start. Organizations that embed AI governance, risk management, and monitoring into their workflows will not only avoid penalties but also gain trust, transparency, and a strategic edge.

The path forward is clear. Enterprises must understand their regulatory obligations, integrate them into AI operations, and continuously assess risks. Compliance is not just a legal requirement. It is a way to ensure AI drives growth safely, responsibly, and sustainably.

Organizations that take these steps today will enter 2026 ready to innovate confidently while keeping regulators, customers, and partners reassured.

Share this article