Listen To Our Podcast🎧

Introduction

What if an AI system could prove compliance without ever accessing your data? That question is becoming central as companies find that privacy is not just about protection but also about business credibility. In today’s data-driven world, privacy has become the currency of trust, particulary in industries where one data leak can cause regulatory and financial damage.

A Deloitte Insights report on technology investment ROI found that about 60% of organizations identify data privacy and security as major obstacles to AI automation, with the number climbing to 65% in financial services. At the same time, PwC’s Global Digital Trust Insights 2024 survey notes that 79% of businesses expect their cybersecurity budgets to increase, up from 65% the year before.

Agentic AI systems, built to make decisions and learn on their own, rely on massive amounts of sensitive information. They process customer transactions, credit records, and internal business data to automate actions at scale. Yet, giving these systems direct access to raw data raises questions about security and compliance. The real challenge for leaders is simple: how to build secure autonomous AI that can make intelligent decisions without exposing sensitive data?

This is where privacy-preserving AI systems and zero-knowledge cryptography for AI come in. These technologies allow AI to perform calculations, validate results, and confirm compliance without revealing the actual data behind them.

For financial institutions, this shift is critical. Regulators now expect companies to show not only that their AI is secure but that its compliance can be verified at any moment. Zero-knowledge proofs in AI make this possible by turning compliance from a slow audit process into a built-in, automated check.

Privacy has moved from being a cost to being a competitive advantage. Companies adopting trustless AI environments are not only protecting themselves but also are building systems that clients, partners, and regulators can actually trust.

As we move ahead in this blog, we’ll discuss how even the most advanced Agentic AI systems can become privacy liabilities when left unchecked and why zero-knowledge proofs may be the missing piece in building secure autonomous AI. We’ll also highlight how industries like finance are already testing these models for risk-free automation.

Safeguard your sensitive data today.

Zero-Knowledge proofs are crucial for security and privacy in agentic AI systems

When autonomous AI operates without oversight

As AI systems evolve from tools into autonomous decision-makers, they bring a new kind of risk that most security frameworks cannot handle.

The rise of privacy blind spots

Agentic AI works on its own. It learns from data, adjusts decisions, and improves over time. This independence makes it powerful, but it also creates privacy blind spots. These are moments when the system processes or transfers information without clear human approval. No one can easily tell who accessed the data, when it was used, or why it was needed.

Inside a controlled setup, such as an internal analytics workflow, this might seem harmless. But when permissionless AI data exchange begins across teams, partners, or networks, control weakens. The AI creates new patterns and insights. Sometimes, those patterns reveal more than anyone planned to share.

Here’s the problem. AI doesn’t understand privacy by default. It’s designed to optimize performance, not protect information. During training or fine-tuning, it often needs full access to raw datasets, including sensitive financial or personal records. Even if that data is anonymized, traces can still expose identities or confidential links between systems.

This makes privacy-preserving AI systems essential. Without built-in verification, companies rely on audits that happen after the fact. By then, the exposure has already occurred.

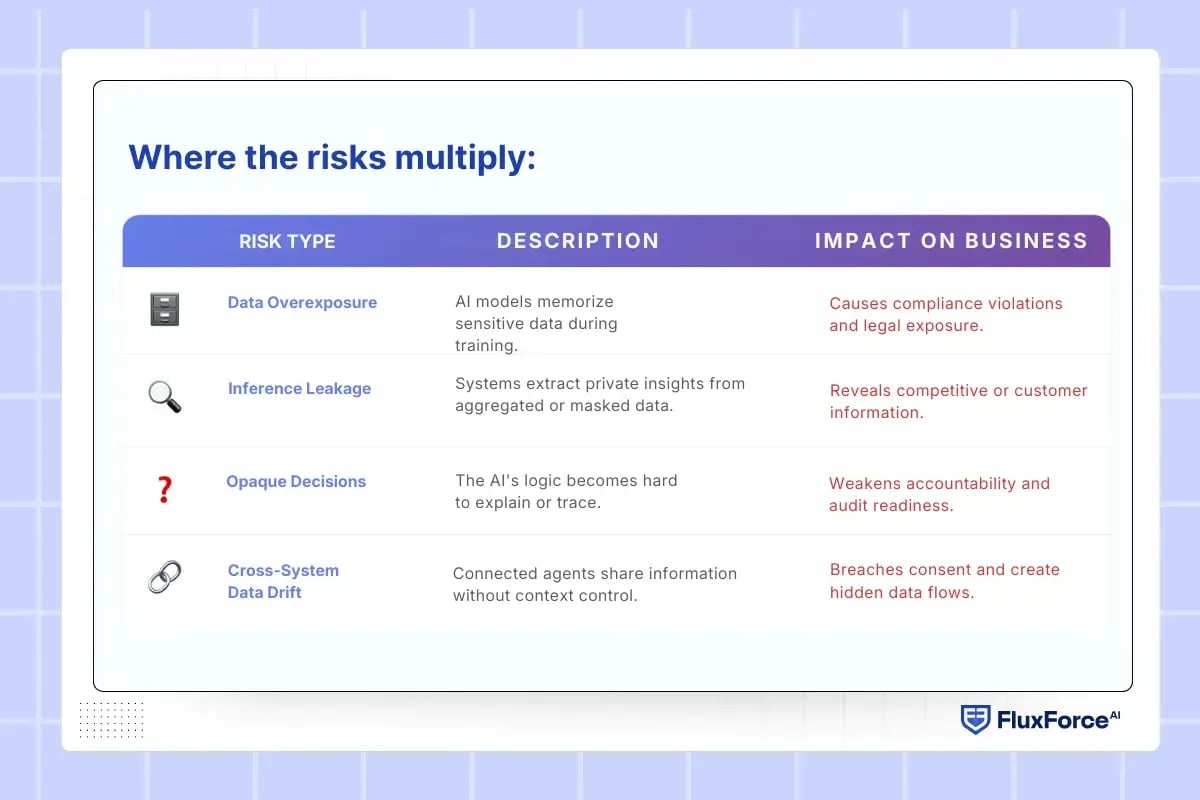

Where the risks multiply

In finance, these risks can grow quickly. Fraud detection, credit scoring, and compliance models often use shared data streams. Each data exchange becomes a new point of vulnerability. Without zero-trust AI security models or cryptographic controls, privacy management starts to fall apart as systems expand.

Most of these issues don’t come from bad intent. The AI is simply doing what it was built to do — learn, optimize, and adapt. It’s the governance structure that fails to keep up.

For leaders, this creates a clear challenge. The smarter your AI becomes, the harder it is to see what it’s doing behind the scenes. To maintain trust, organizations need a way to verify AI behavior without exposing the data itself.

The next section explores how zero-knowledge proofs in AI solve this problem. They bring verifiable privacy to autonomous systems and help businesses build secure autonomous AI that operates with transparency and confidence.

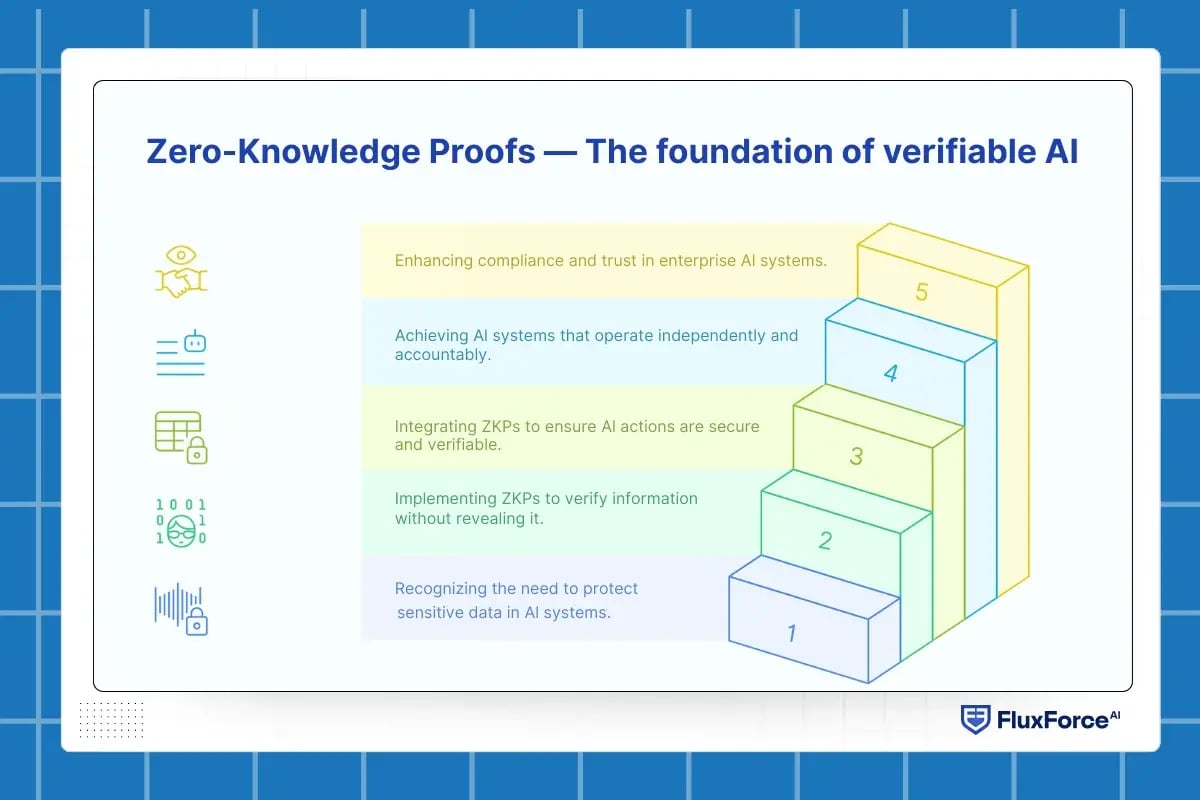

Zero-Knowledge Proofs — The foundation of verifiable AI

When data moves freely across systems, privacy becomes fragile. Zero-knowledge proofs (ZKPs) solve this challenge by proving that something is true without revealing the underlying data. In simple terms, ZKPs allow systems to verify facts without exposing the information behind them.

This principle forms the backbone of autonomous verifiable AI. Instead of relying on human oversight or periodic audits, zero-knowledge proofs add an AI confidentiality layer that ensures every action or prediction can be verified securely. The result is AI that operates independently yet remains fully accountable.

How ZKPs work in AI

Think of an AI system evaluating a financial rule, such as verifying loan eligibility. Normally, the model must access sensitive income and credit data to confirm the result. With zkp for data security, the AI can prove the rule was applied correctly without ever exposing that data.

Why ZKPs matter for enterprise systems

In large enterprises, especially finance and insurance, data moves through multiple AI-driven workflows. Each touchpoint is a potential privacy gap. By embedding privacy-preserving AI systems powered by ZKPs, verification becomes part of the process rather than an afterthought.

This architecture enhances compliance and trust. Every transaction or model output can carry a proof of correctness without disclosing the data used to generate it. In practice, this allows teams to automate confidently, knowing that every decision can be explained and validated when needed.

Building privacy-preserving AI workflows with zero-knowledge proofs

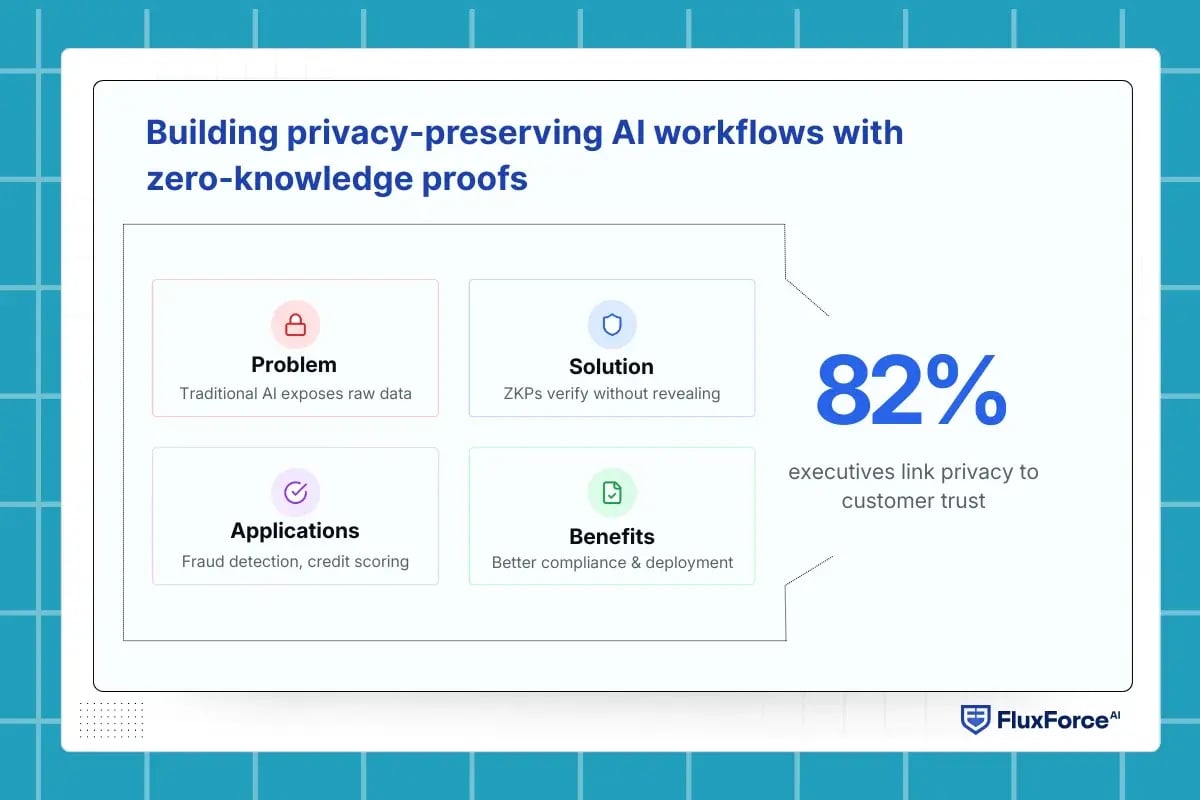

As organizations rely more on AI to make business decisions, data privacy has become a constant challenge. This is where zero-knowledge proofs (ZKPs) step in turning AI systems into verifiable and privacy-aware engines.

Making privacy part of the AI foundation

Most AI models today need to see raw data to make decisions. This creates exposure risks and compliance hurdles. With privacy-preserving AI workflows, sensitive data can stay hidden while still being used for analysis.

ZKPs make this possible by allowing an AI model to “prove” it has processed data correctly without actually revealing the data.

In the financial sector, this means a credit scoring system or audit tool can validate transactions or risk models securely. The process remains transparent and trustworthy, but customer data never leaves its protected layer.

Where to apply ZKPs in enterprise workflows

Implementation starts by identifying the touchpoints where data is most vulnerable such as fraud detection, credit evaluation, or third-party data exchange.

At these points, ZKPs act as verification layers, confirming the integrity of both the data and the AI model’s outcome.

When combined with federated learning or confidential computing, ZKPs form an end-to-end protection layer for collaborative ecosystems. This enables financial institutions, regulators, and partners to share verified outcomes — without sharing the actual information behind them.

Practical advantages for businesses

- Stronger compliance with privacy regulations.

- Lower audit overhead due to verifiable, traceable workflows.

- Faster deployment of AI models because data-sharing risks are reduced.

According to a 2023 PwC Digital Trust Insights report, 82% of executives say that improving data privacy and trust directly boosts customer retention and regulatory confidence.

How agentic AI uses Zero-Knowledge Proofs for secure autonomy

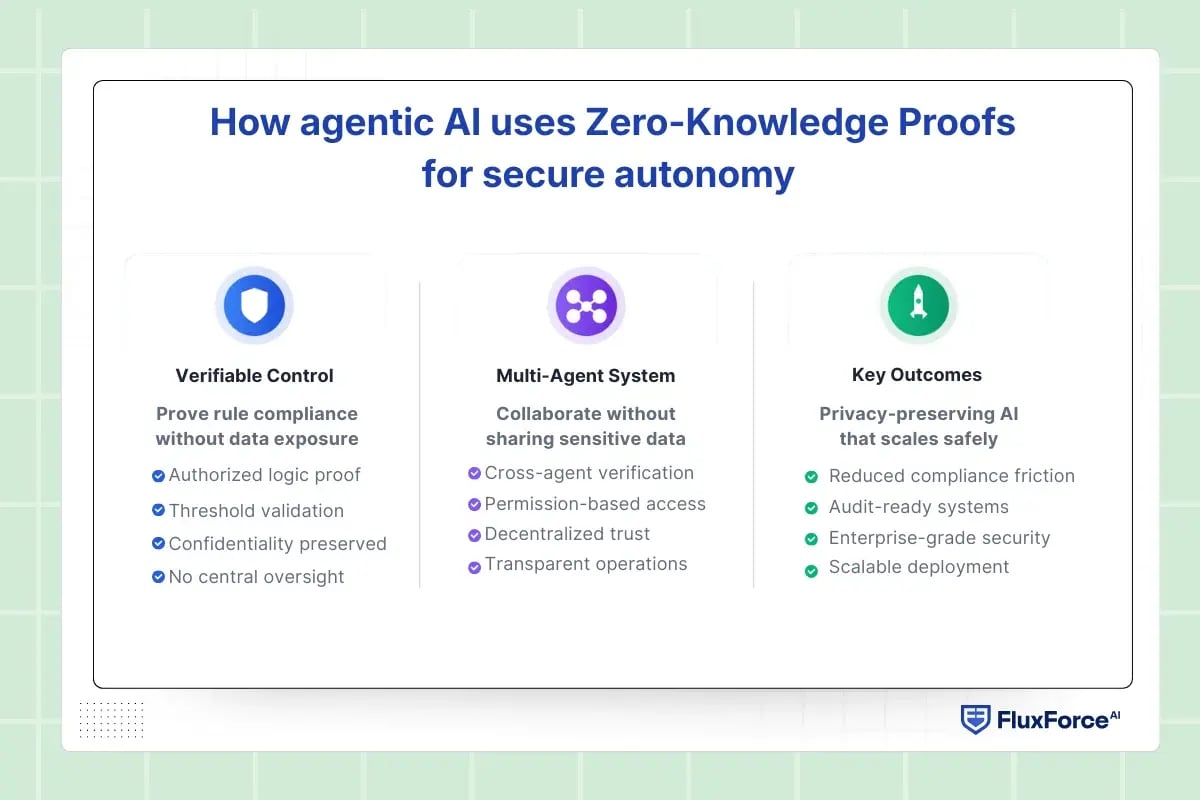

When organizations begin deploying Agentic AI to manage financial decisions, audit checks, or transaction workflows, the challenge shifts from building intelligence to ensuring control. The more autonomous the AI becomes, the harder it is to prove that every action was both compliant and secure. This is where zero-knowledge proofs (ZKPs) bring real operational value.

Adding verifiable control to AI decisions

ZKPs allow an AI agent to prove that it has followed a rule or made a decision correctly without revealing the data behind it.

For instance, in credit scoring or transaction monitoring, the AI can validate that it used authorized logic and thresholds while keeping all customer and financial details private.

For financial systems, that proof layer reduces compliance friction. It lets auditors and partners confirm accuracy while maintaining strict confidentiality. It also eliminates the dependency on centralized oversight, which often slows down automation and increases data exposure risks.

Creating secure collaboration Across AI agents

Agentic AI rarely operates in isolation. In large organizations or financial ecosystems, multiple AI agents interact with shared data, models, or workflows. ZKPs make these interactions verifiable without sharing the underlying data.

This enables permission-based collaboration between banks, regulators, and partners while maintaining privacy at every layer. The result is decentralized AI intelligence that operates transparently and remains aligned with internal governance rules.

This approach supports privacy-preserving AI systems that can scale safely. It ensures that sensitive data never leaves its boundary, yet the organization retains the ability to verify compliance and integrity at any time.

Impact of trustworthy AI

Zero-Knowledge proofs are crucial for security and privacy in agentic AI systems

Conclusion

As AI systems move toward full autonomy, the ability to prove trustworthiness without exposing sensitive data will define enterprise success. Zero-knowledge proofs (ZKPs) give organizations a framework to operate intelligent systems that are secure, compliant, and transparent. For industries like finance, where data control determines market credibility, ZKPs bridge the gap between innovation and regulation. The future of Agentic AI will not be built on open access to data, but on proof-based collaboration, where every process can be verified without compromising privacy.

Share this article