Listen To Our Podcast🎧

Introduction

How can security leaders trust AI decisions when 68% of access denials don’t provide a clear reason, per CSA 2025 report?

In today’s cybersecurity world, zero trust security is essential. Companies rely on continuous authentication and identity and access management (IAM) to keep systems safe. But many AI systems act like black boxes, hiding why a user is allowed or denied access. This causes confusion and audit failures under NIST ZTA rules.

Explainable AI (XAI) solves this problem by making AI decisions clear and understandable. With zero trust security with AI explainability, teams can see why AI makes decisions, reduce unnecessary alerts, and improve AI trust and security.

Next, we will look at how zero trust access management works with XAI to make access decisions clear and actionable.

XAI enhances biometric authentication in digital banking

boosting security and trust with FluxForce AI

Zero Trust Access Management with Explainable AI

Why is managing access in a zero trust model challenging without AI transparency?

Zero trust access management ensures every user, device, and session is verified before access is granted. Traditional AI systems often act like a “black box,” hiding why access is approved or denied. This can erode AI trust and security and make it hard for CISOs to explain decisions to boards or auditors. .webp?width=1200&height=800&name=zero%20trust%20security%20(2).webp)

AI-Powered Access Decisions

Modern AI-based access control systems analyze billions of signals in real time. By using zero trust authentication frameworks with explainable AI (XAI), organizations can enforce dynamic policies while making decisions understandable. According to CSA’s 2025 report, companies leveraging XAI in zero trust access control reduced false positives by 40% and resolved alerts faster.

Interpretable AI for Continuous Authentication

XAI supports continuous authentication by explaining why access is granted or revoked in real time. For instance, if a session is blocked, XAI highlights the risk factors that triggered the denial, allowing teams to act confidently and reduce unnecessary overrides. This strengthens zero trust security with AI explainability and ensures compliance with regulatory requirements.

Practical XAI Use Cases in Zero Trust

With XAI, AI-driven access decisions gain traceable audit trails. Security teams can use real-time dashboards to see the reasoning behind approvals and denials. These XAI use cases in zero trust architecture help reduce blind spots, improve decision-making, and maintain trust in AI-powered security.

Let’s explore how continuous authentication and XAI together prevent lateral movement and enhance enterprise-wide security.

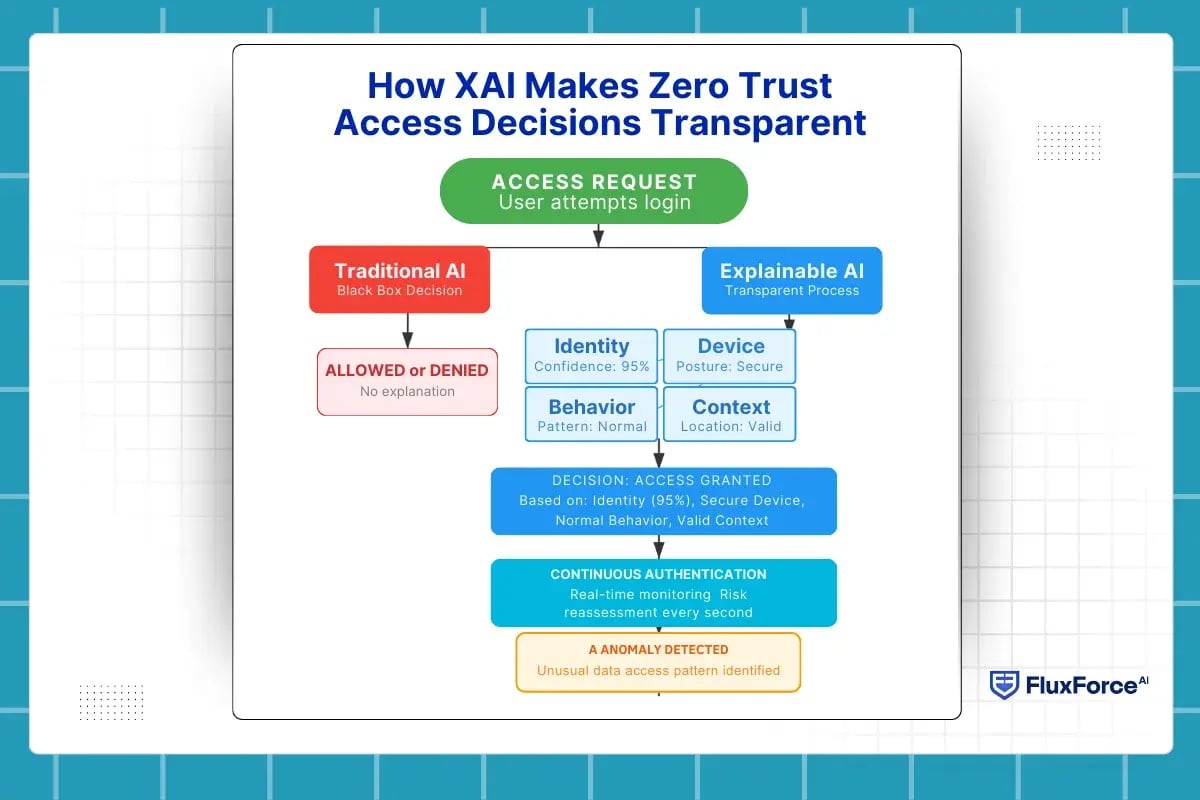

How XAI Makes Zero Trust Access Decisions Transparent ?

If Zero Trust decides access every second, who explains those decisions?

In a zero trust architecture, access is never permanent. Every request is evaluated in real time using AI in cybersecurity, across identity, device, behavior, and context. This makes enforcement scalable, but it also introduces a visibility gap.

Most zero trust access control systems still answer only one question:

- Was access allowed or denied?

They fail to answer the more important one:

- Why was that decision made?

This is where explainable AI (XAI) becomes essential.

From Black-Box Decisions to Transparent Access Control

Traditional AI-based access control relies on complex models that output risk scores. These scores drive decisions but rarely explain themselves.

With explainable AI in cybersecurity, access decisions are no longer opaque. XAI breaks down each decision into clear contributing factors, such as:

- Identity confidence level

- Device posture changes

- Abnormal session behavior

- Policy thresholds crossed during evaluation

This shift enables transparent AI decision making, where security teams can clearly see what influenced an access outcome.

That transparency is foundational to zero trust security, because decisions are made continuously, not just at login.

Transparency Across Continuous Authentication

In the zero trust model, access decisions evolve throughout a session. Continuous authentication constantly reassesses risk, often revoking or limiting access mid-session.

Without explanation, these changes feel arbitrary.

XAI brings visibility to continuous authentication, showing:

- What changed during the session

- Which risk signals increased

- Why access was downgraded or revoked

This level of insight strengthens AI transparency in identity access management (IAM) and ensures that access enforcement remains understandable and defensible.

Why Transparent Access Decisions Define Zero Trust Maturity ?

Zero trust is not just about denying access. It is about making correct, explainable, and auditable decisions.

When access decisions are transparent:

- Security teams respond faster

- False positives are reduced

- Overrides decrease

- Compliance reviews become simpler

This is the practical value of zero trust with explainable AI models. Explainable AI for access control decisions ensures that Zero Trust is not only enforced by AI, but also understood by humans.

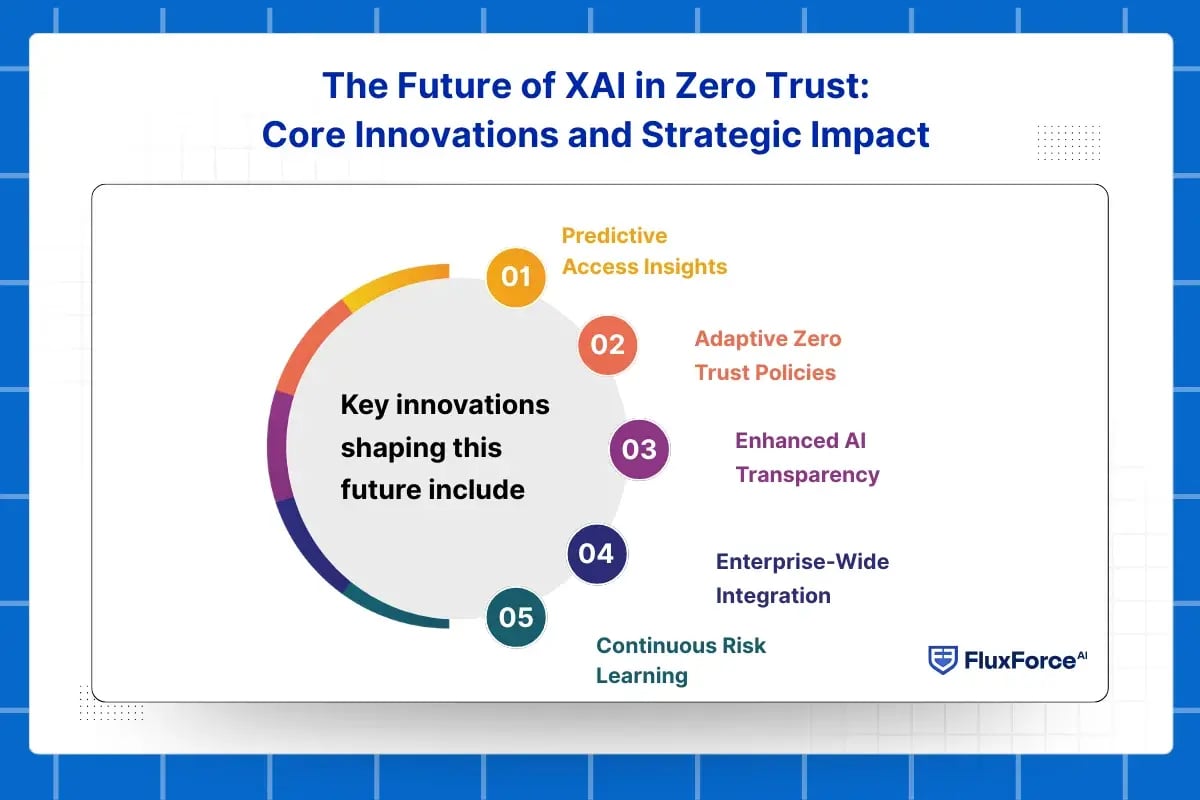

The Future of XAI in Zero Trust: Core Innovations and Strategic Impact

The future of zero trust security depends on integrating AI in cybersecurity not just for enforcement but for transparency and insight. Today, AI makes millions of access decisions across users, devices, and sessions. But without AI transparency, organizations risk blind enforcement, audit failures, and eroded trust in their zero trust architecture.

Explainable AI (XAI) is set to redefine the zero trust model by embedding clarity into every decision. Instead of just approving or denying access, future XAI-driven systems will show exactly why a request was flagged, tying it to identity confidence, device posture, behavioral signals, and policy thresholds. This level of insight strengthens security teams’ ability to act decisively while maintaining compliance.

Key innovations shaping this future include:

- Predictive Access Insights: Using AI in cybersecurity, XAI will predict high-risk access attempts before they happen, allowing proactive interventions within zero trust security frameworks.

- Adaptive Zero Trust Policies: By analyzing access patterns continuously, XAI will guide dynamic tuning of zero trust architecture, ensuring policies are optimized without manual intervention.

- Enhanced AI Transparency: Every decision within the zero trust model will be interpretable, giving security teams, auditors, and executives confidence in automated enforcement.

- Enterprise-Wide Integration: Future XAI systems will unify cloud, endpoint, and operational environments, making AI transparency standard across zero trust access management.

- Continuous Risk Learning: XAI will support learning from anomalous behaviors, enabling security teams to refine rules and detect emerging threats before breaches occur.

By focusing on the core of transparent, explainable AI in Zero Trust, organizations can transform enforcement into an intelligent, auditable, and adaptive system. Making AI access decisions transparent ensures that the zero trust model scales securely while maintaining trust across users, teams, and stakeholders.

XAI enhances biometric authentication in digital banking

boost security and trust with FluxForce AI

Conclusion

The future of zero trust security relies on AI transparency and explainable access decisions. XAI use cases in zero trust architecture are transforming enforcement into proactive risk management. Organizations implementing AI-driven access control can anticipate threats, optimize zero trust authentication frameworks, and maintain scalable, auditable, and transparent security. In future, explainable AI will be the cornerstone of resilient, trusted Zero Trust systems.

Share this article