Listen To Our Podcast🎧

Introduction

Fraud no longer operates within a single payment flow or customer touchpoint. Today’s financial crime moves across cards, digital wallets, online banking, and merchant platforms in seconds. This shift has turned payment fraud detection into a cross-channel problem rather than an isolated control function. Risk teams are now expected to connect signals across channels while making decisions in real time.

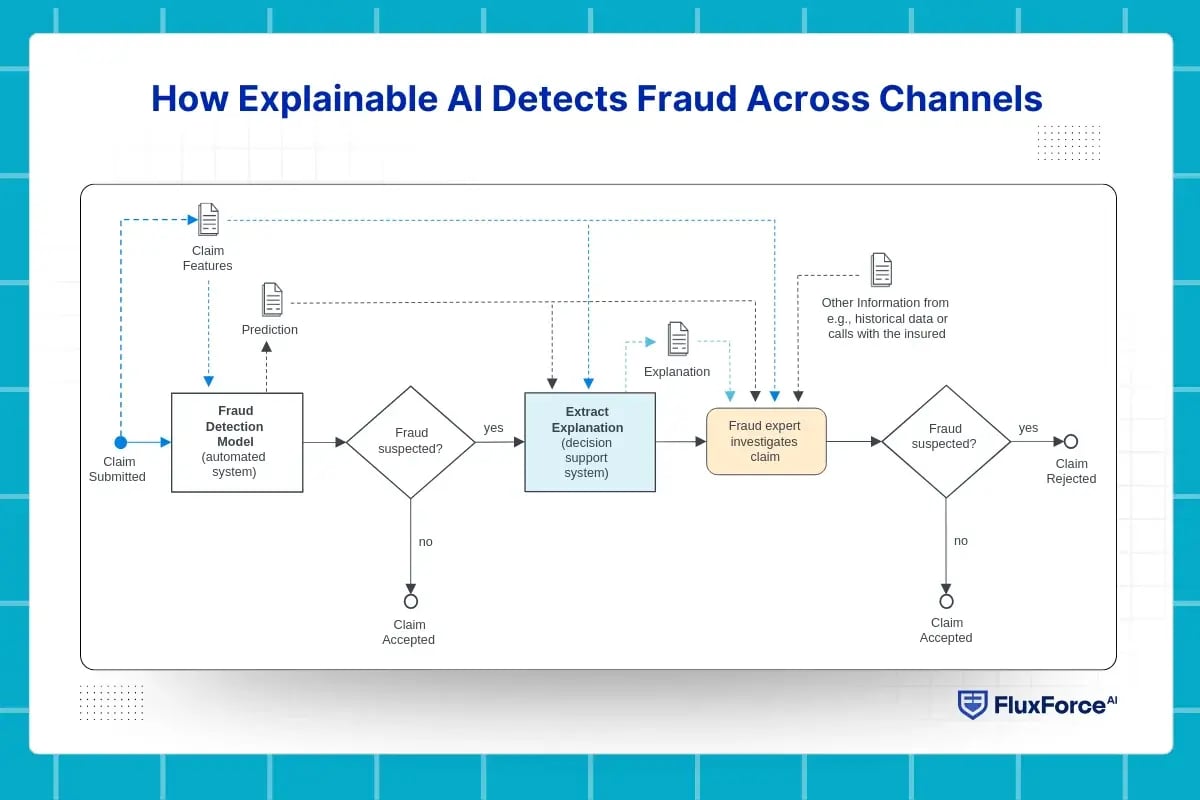

How Explainable AI Detects Fraud Across Channels ?

Fragmented transaction decisioning systems struggle to link behaviors across channels. Even with advanced AI fraud detection, patterns often go unnoticed until it’s too late. Cross-channel fraud detection and digital fraud management now require explainable AI that supports clear, trusted decisions.

In modern AI in financial services, prevention depends on clarity as much as accuracy. Omnichannel fraud prevention relies on systems that connect data, explain risk, and enable confident decisioning across teams and channels.

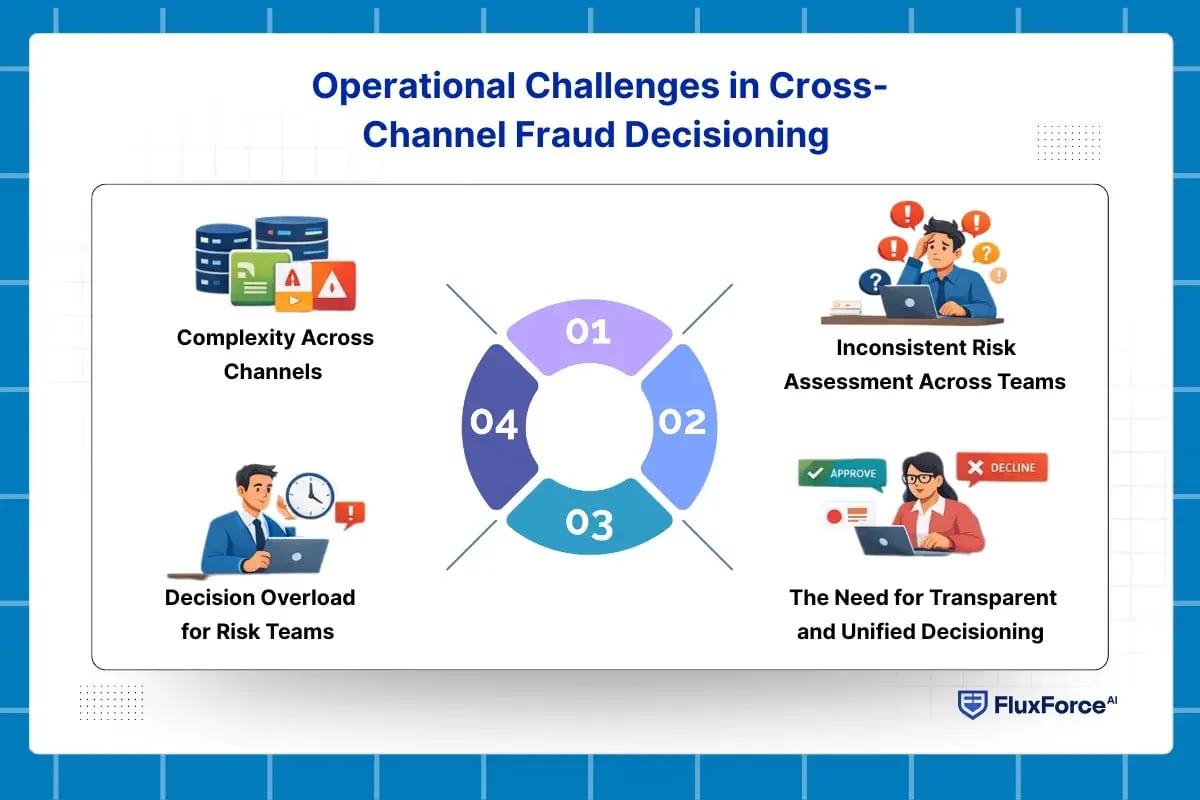

Operational Challenges in Cross-Channel Fraud Decisioning

Traditional payment fraud detection and separate alerts are no longer enough. Risk teams need to make clear and consistent decisions across all channels to prevent losses and comply with regulations.

Complexity Across Channels

Each channel produces a continuous flow of data, but separate systems struggle to combine and interpret it. Alerts from different sources often lack context, making it hard to spot patterns for cross-channel fraud detection. Even advanced AI fraud detection tools may not explain why a transaction was flagged, leaving investigators uncertain.

Decision Overload for Risk Teams

High volumes of alerts create stress for analysts. Without guidance, teams spend time checking false positives instead of focusing on real threats. Explainable AI helps show why alerts are raised, improving efficiency in digital fraud management.

AI Decision Support Systems Without Transparency Create Risk

Many institutions use AI decision support systems to assist analysts. If these tools lack AI decision transparency, they slow investigations, increase alert fatigue, and make it harder to file accurate suspicious activity report examples. Risk operations require actionable insight, not just alerts.

Inconsistent Risk Assessment Across Teams

Different teams may interpret the same alerts differently, leading to inconsistent decisions. This reduces the effectiveness of omnichannel fraud prevention and makes it harder to maintain alignment across the organization.

How Explainable AI Strengthens Cross-Channel Fraud Decisions ?

Explainable AI plays a direct role in improving fraud decisions across channels. It does not replace existing fraud prevention systems. Instead, it makes their decisions easier to understand, easier to trust, and easier to act on within live operations.

Explainable AI in Transaction Decisioning Systems

In cross-channel environments, transaction decisioning systems process card payments, account activity, wallet usage, and digital transfers at the same time. Traditional machine learning fraud detection can identify risk but often hides the reasoning behind decisions. Explainable AI exposes key factors such as unusual transaction timing, device behavior, location mismatch, or abnormal spending patterns.

This clarity improves fraud detection using AI by allowing teams to validate alerts faster and take action with confidence.

Unified Fraud Detection Through Transparent Signals

Explainable AI supports unified fraud detection by applying the same reasoning logic across all channels. Risk signals are no longer isolated. Instead, they are connected and explained using shared features and clear scoring logic. This consistency strengthens omnichannel fraud prevention and reduces conflicts between channel-specific fraud rules.

Teams gain a single view of risk rather than disconnected alerts.

Operational Value for Digital Fraud Management

In daily operations, explainable AI reduces friction. Analysts no longer need to manually justify decisions without evidence. Clear explanations support faster approvals, stronger declines, and better escalation handling. This directly improves digital fraud management and lowers operational costs.

Explainable outputs also support training and quality reviews which improves long-term accuracy.

Building Trust in AI-Driven Fraud Prevention

Trust is critical in regulated financial environments. Explainable AI improves AI model transparency and supports trustworthy AI practices. Every fraud decision can be traced back to understandable inputs. This strengthens audit readiness and supports AI risk management across fraud operations.

As a result, institutions can scale AI-driven fraud prevention without losing control or accountability.

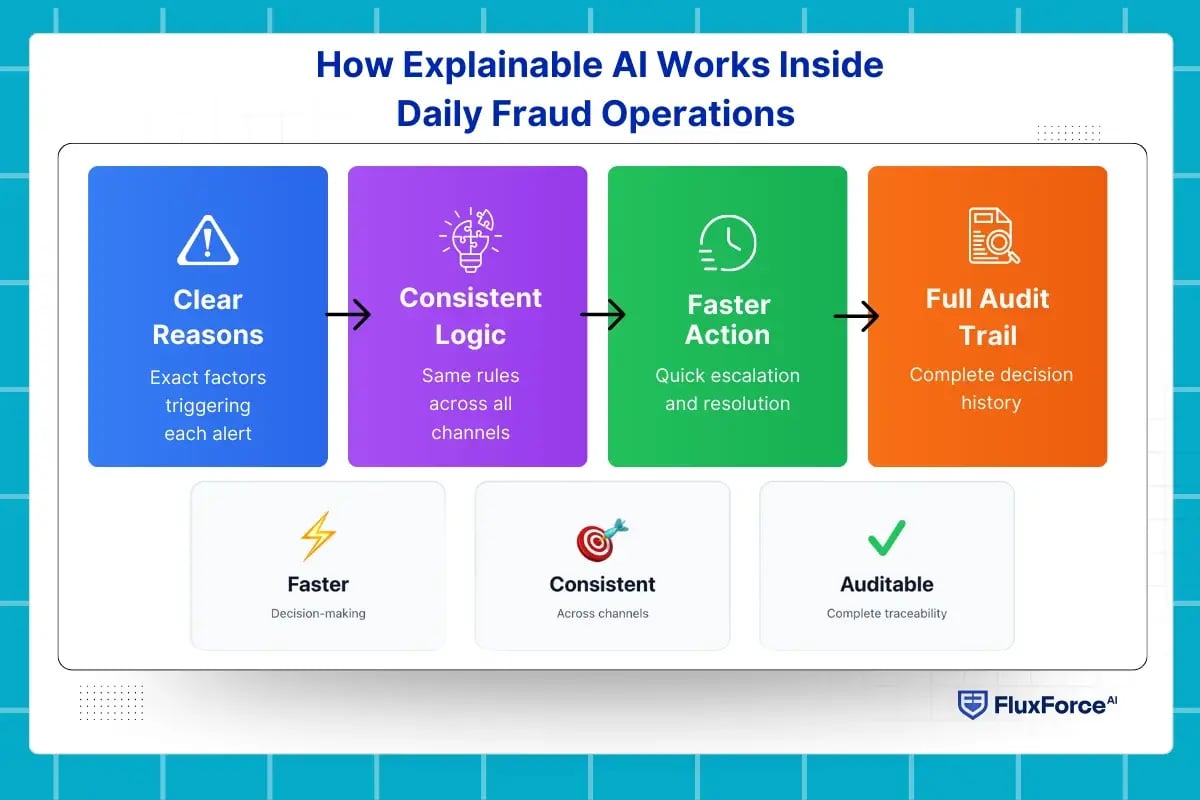

How Explainable AI Works Inside Daily Fraud Operations ?

In real fraud operations, decisions are made under pressure. Alerts arrive continuously from card payments, digital channels, and account activity. Teams must decide quickly whether to approve, block, or escalate a transaction. Explainable AI supports this process by fitting directly into how fraud teams already operate.

Clear Reasons Behind Each Fraud Alert

When a transaction is flagged, explainable AI shows the exact factors that triggered the alert. These may include unusual device behavior, spending outside normal patterns, or activity across multiple channels in a short time. Instead of reviewing raw scores, analysts see clear drivers that support fraud detection using AI. This reduces time spent validating alerts and improves accuracy in payment fraud detection.

Consistent Decisions Across Review Teams

In many organizations, different teams handle different channels. Without explainability, the same customer behavior may be treated differently depending on the channel. Explainable AI ensures that cross-channel fraud detection uses the same logic and risk signals everywhere.

This consistency improves omnichannel fraud prevention and reduces internal disagreements during reviews.

Faster Escalation and Resolution

Explainable AI helps analysts decide when to escalate a case or close it quickly. Clear explanations support better handoffs between frontline analysts and senior reviewers. This improves response time in real-time fraud detection and lowers backlog during high-volume periods. It also strengthens daily digital fraud management processes.

Supporting Audits and Post-Incident Reviews

After a fraud event, teams must explain what happened and why a decision was made. Explainable AI stores decision logic alongside outcomes. This supports internal audits, regulatory checks, and model reviews without reprocessing historical data.

This practical traceability strengthens AI risk management and reinforces trustworthy AI use in financial services.

Implementing Explainable AI in Live Cross-Channel Fraud Decisioning

Explainable AI delivers value only when it is embedded directly into fraud workflows. In production environments, models must support fast decisions, consistent outcomes, and clear accountability across channels. Implementation requires structure, not experimentation.

Embedding Explainability into Transaction Decisioning Systems

Before a suspicious activity report is filed, risk teams must separate genuine threats from normal behavior. Explainable AI reveals which risk indicators and behavioral patterns contributed to an alert.

This transparency helps investigators understand the basis of escalation and reinforces a practical definition of what is a suspicious activity report within day-to-day operations.

Applying Unified Logic Across All Channels

Cross-channel fraud detection fails when models behave differently across payment types. Explainable AI enables shared features and consistent risk logic across cards, digital banking, and alternative payment channels. This creates unified fraud detection without rebuilding systems for each channel.

The result is stronger omnichannel fraud prevention and fewer conflicting decisions.

Reducing Review Time and False Positives

Explainable AI highlights the strongest risk signals for each alert. Analysts focus on high-impact cases instead of reviewing low-risk noise. This reduces false positives and improves throughput in payment fraud detection operations.

Clear explanations also shorten training cycles for new analysts.

Creating Audit-Ready Fraud Decisions

Every fraud action must be traceable. Explainable AI records decision drivers alongside outcomes. This supports audits, regulatory reviews, and internal model governance without manual reconstruction. Teams maintain control while scaling AI-driven fraud prevention.

This directly strengthens AI model transparency, AI risk management, and long-term operational trust.

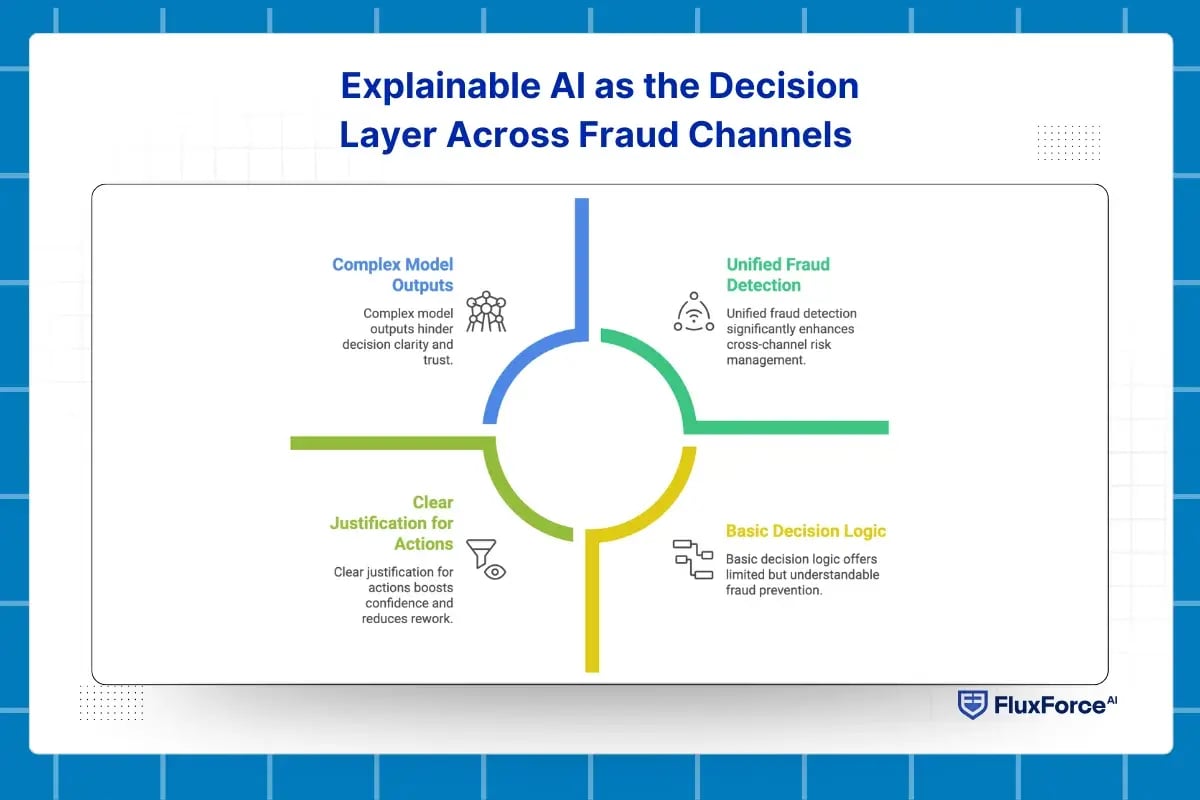

Explainable AI as the Decision Layer Across Fraud Channels

Cross-channel fraud decisioning is not only about stopping fraud. It is about making decisions that teams can explain, defend, and repeat at scale. Explainable AI directly supports this need by turning complex model outputs into clear decision logic that works across channels.

Single Decision View Across All Fraud Channels

Risk teams need one answer to one question. Should this activity be approved, blocked, or escalated. Explainable AI creates a shared decision view across cards, accounts, wallets, and digital transactions. Instead of separate alerts, teams see how risk builds across channels.

This improves cross-channel fraud detection and enables unified fraud detection in live operations.

Clear Justification for Every Fraud Action

Every fraud decision has consequences. Customers are declined. Transactions are blocked. Accounts are reviewed. Explainable AI provides clear reasons behind each action. These reasons are visible to analysts, supervisors, and compliance teams.

This clarity strengthens payment fraud detection and reduces rework caused by unclear or disputed decisions.

Consistent Outcomes Across Teams and Shifts

Without explainability, decisions change based on who is reviewing the case. Explainable AI ensures that the same behavior produces the same outcome regardless of channel or reviewer. This consistency improves omnichannel fraud prevention and stabilizes daily digital fraud management.

Teams spend less time debating decisions and more time resolving real risk.

Confidence During Reviews, Audits, and Escalations

When fraud events are reviewed later, teams must explain what happened and why. Explainable AI preserves the reasoning behind every decision. This supports internal reviews, regulatory audits, and executive reporting.

XAI enhances cross-channel fraud decisioning, improves accuracy,

transparent insights and smarter prevention strategies!

Conclusion

Detecting fraud is only part of the challenge. The real test lies in making decisions that can be trusted across teams, customers, and regulators. Explainable AI strengthens cross-channel fraud decisioning by turning complex model outputs into clear and consistent actions. It supports faster reviews, fewer disputes, and stronger governance. As AI-driven fraud prevention continues to scale, institutions that prioritize explainability will be better positioned to manage risk, maintain control, and sustain long-term trust.

Share this article