Listen To Our Podcast🎧

Introduction

“Trust is earned, not given.”

In digital banking security, this saying has never felt more real.

Every day, customers unlock their bank accounts using their face, voice, or fingerprint. Biometric authentication has quietly become the first line of defense for digital banking security. It decides who gets access, who gets blocked, and when a transaction is flagged as risky. Yet most users never see how those decisions are made.

Why Biometric Security Raises New Trust Questions ?

Banks today rely heavily on AI-based authentication systems to verify identities and prevent fraud. Facial recognition, behavioral biometrics, and voice verification are now standard across mobile banking apps.

According to recent industry reports, 87% of global banks now use biometric authentication as of 2025, with 70% of US financial institutions integrating it into payment systems. At the same time, biometric fraud and identity spoofing attacks are increasing, not decreasing.

So, the real question is not whether biometric security works.

The real question is whether these AI decisions can be trusted.

When AI Decisions Lack Transparency ?

When a legitimate customer is locked out or a transaction is blocked, both users and risk teams ask the same thing. Why did this happen?

Traditional AI models often provide an answer without a reason. That creates friction for customers, operational risk for banks, and serious challenges for fraud prevention in banking and regulatory compliance AI. In a regulated environment, decisions must support AI decision transparency, accountability, and fairness.

How Explainable AI Changes Biometric Authentication ?

Explainable AI changes this dynamic.

Explainable AI in banking brings transparency to biometric authentication by showing how and why identity decisions are made. It connects AI model explainability with real-world operations, allowing customers to understand which signals influenced an outcome. This directly supports audit-ready AI systems and stronger AI risk management banking.

Building Trustworthy AI for Digital Banking

As XAI in banking continues to mature, banks face a growing responsibility. They must protect users from fraud while proving that biometric authentication systems are accurate, ethical, and compliant. Explainable AI becomes the bridge between biometric security and trustworthy AI in banking.

Next, we explore how explainable AI improves biometric authentication, strengthens fraud prevention in banking, supports AI fraud detection banking, and enables audit-ready AI systems without slowing down real-time decisions.

XAI enhances biometric authentication in digital banking

boosting security and trust with FluxForce AI

How Explainable AI Improves Biometric Authentication in Digital Banking ?

In modern digital banking security, simply using biometric authentication is not enough. Even advanced biometric verification systems fail under real-world conditions like device changes or unusual behavior.

Without AI decision transparency, risk teams struggle to distinguish fraud from legitimate activity, increasing operational costs and customer friction.

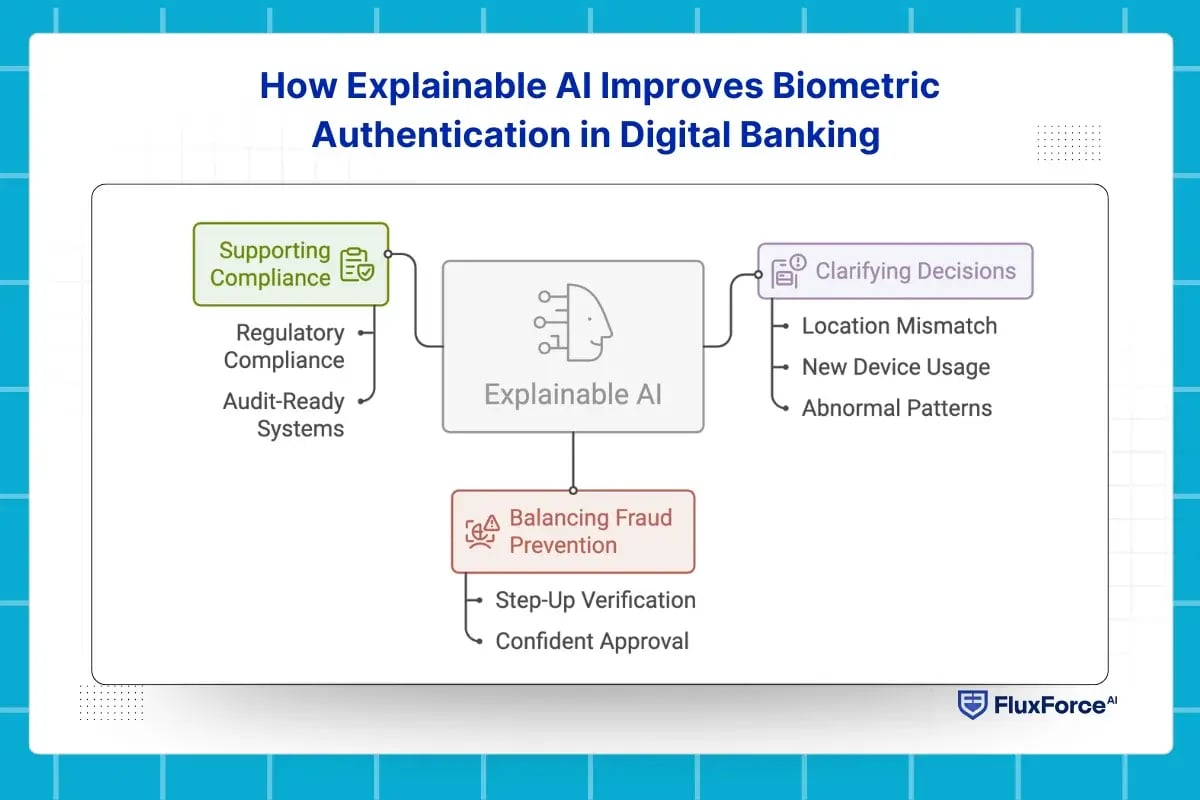

Clarifying Decisions with Explainable AI

Explainable AI (XAI) in banking reveals why transactions are flagged. Signals like location mismatch, new device usage, or abnormal patterns improve biometric security. Risk teams can focus on real threats, reducing false positives and streamlining AI fraud detection banking.

Balancing Fraud Prevention and User Experience

XAI allows nuanced responses to alerts. Legitimate flagged logins can undergo step-up verification or be approved confidently. This strengthens fraud prevention in banking while maintaining smooth user experience.

Supporting Compliance and Trust

Explainable AI in banking ensures regulatory compliance AI and audit-ready AI systems by tracing every decision. Teams gain operational clarity, reduce disputes, and strengthen AI risk management banking.

AI Transparency in Biometric Authentication for Digital Banking

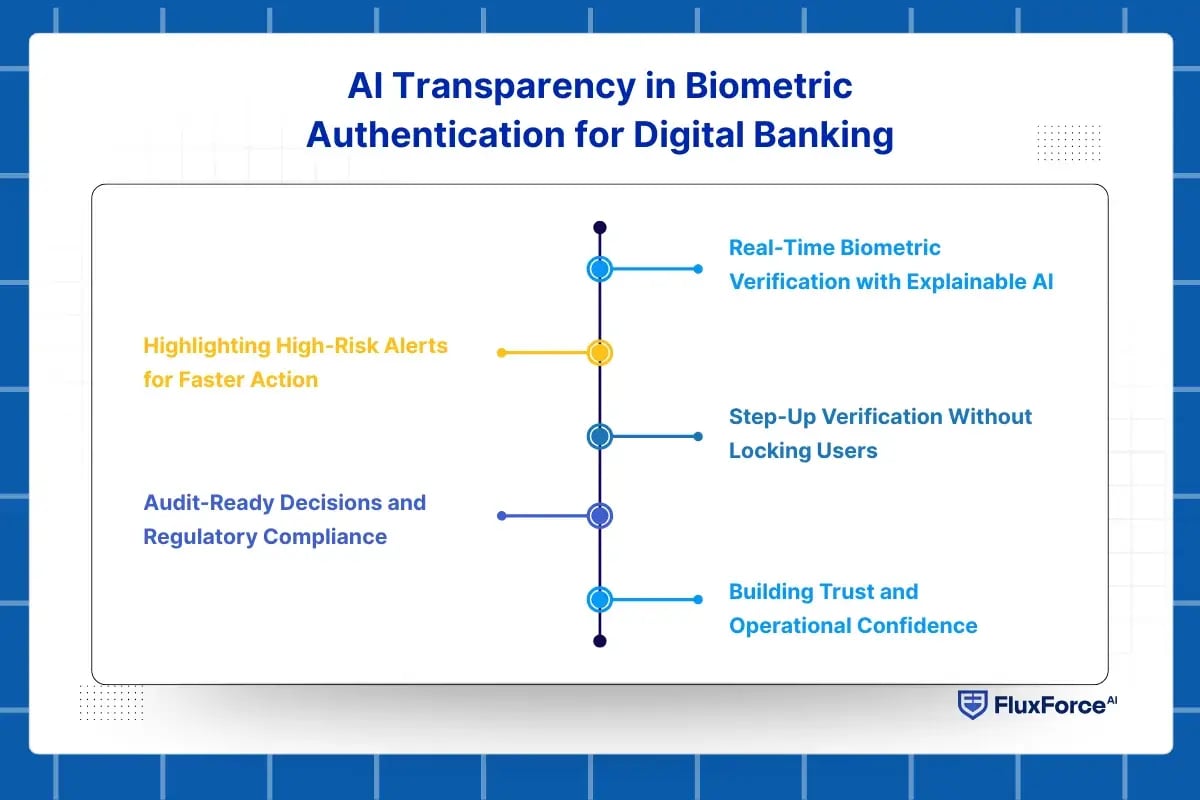

In digital banking security, every biometric login—face, fingerprint, or voice—needs clear reasoning. Explainable AI in banking shows why an authentication passes or fails, improving biometric security, speeding decisions, and supporting compliance.

1. Real-Time Biometric Verification with Explainable AI

At a mid-size bank, analysts noticed that 15% of login attempts were falsely flagged due to device changes or minor behavioral shifts. Traditional AI models provided scores without explanations. With Explainable AI in banking, every failed authentication now shows drivers such as device ID mismatch, facial angle deviation, or typing pattern irregularities. Analysts immediately understand the reason, allowing faster, confident decisions while maintaining biometric security.

2. Highlighting High-Risk Alerts for Faster Action

AI-based authentication generates numerous alerts daily. XAI identifies the highest-risk events, such as multiple failed voice logins or rapid device switching. Analysts can prioritize these cases instead of reviewing low-risk attempts. A regional bank reported a 35% reduction in manual reviews after using Explainable AI for biometric security in banks, improving both AI fraud detection banking and operational efficiency.

3. Step-Up Verification Without Locking Users

In daily operations, explainable AI reduces friction. Analysts no longer need to manually justify decisions without evidence. Clear explanations support faster approvals, stronger declines, and better escalation handling. This directly improves digital fraud management and lowers operational costs.

Explainable outputs also support training and quality reviews which improves long-term accuracy.

4. Audit-Ready Decisions and Regulatory Compliance

Every biometric authentication outcome is logged with explanatory factors such as device ID, location, and behavioral anomalies. During audits, teams can demonstrate why each transaction was flagged, supporting regulatory compliance AI and creating audit-ready AI systems.

5. Building Trust and Operational Confidence

Transparency builds trust. Explainable AI in banking allows analysts, auditors, and even customers to see why a login or transaction is approved, escalated, or denied. Clear reasoning reduces false positives, accelerates authentication, and strengthens AI model explainability and trustworthy AI in banking, enabling biometric systems to scale safely.

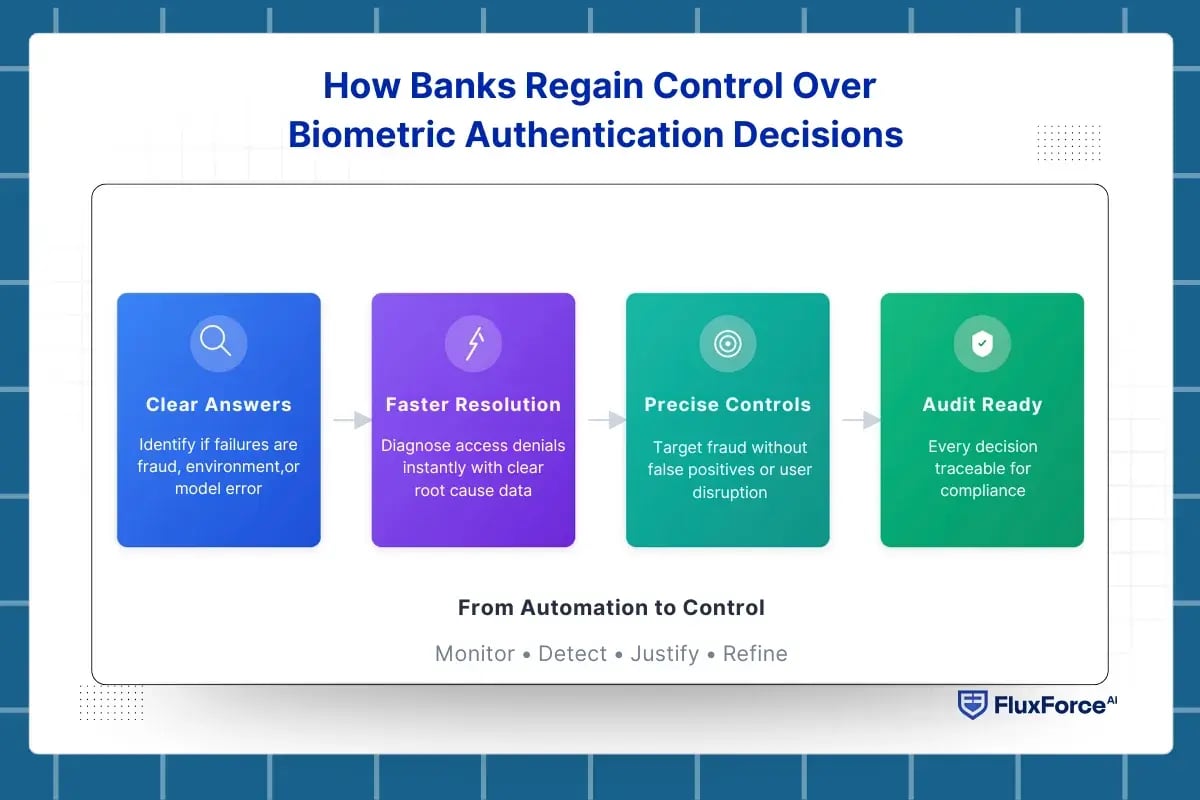

How Banks Regain Control Over Biometric Authentication Decisions ?

Banks do not adopt biometric authentication to experiment with AI. They adopt it to reduce fraud, speed access, and lower operational cost. When biometric decisions cannot be explained, those goals break down. Explainable AI in banking exists to fix that exact gap by turning biometric outcomes into decisions teams can act on, defend, and improve.

Clear Answers When Biometric Authentication Fails

From a user’s perspective, biometric authentication either works or it doesn’t. From a bank’s perspective, every failure triggers risk. Without explainability, teams cannot tell whether a failed login was caused by fraud, environmental issues, or model error.

Explainable AI for biometric authentication provides immediate clarity by exposing what influenced the decision. This allows support and risk teams to respond accurately instead of defaulting to account blocks or escalations that increase customer friction.

Faster Resolution for Customer Access Issues

Customer lockouts are one of the most expensive outcomes of biometric security failures. Explainable AI shortens resolution time by showing why access was denied. Instead of reopening investigations, teams can see whether confidence thresholds dropped, biometric quality degraded, or device behavior changed.

This directly improves digital banking security while protecting customer experience and reducing support workload.

Stronger Fraud Decisions Without Overcorrecting

Explainable AI helps analysts decide when to escalate a case or close it quickly. Clear explanations support better handoffs between frontline analysts and senior reviewers. This improves response time in real-time fraud detection and lowers backlog during high-volume periods. It also strengthens daily digital fraud management processes.

Decisions That Hold Up Under Audit and Review

When biometric authentication affects account access, regulators expect justification. Explainable AI ensures that each decision is traceable to clear inputs and logic. This removes ambiguity during audits and supports regulatory compliance AI without slowing authentication flows.

Banks gain audit-ready AI systems by design, not through manual reconstruction.

A Practical Shift from Automation to Control

The real value of explainable AI is not transparency alone. It is control. Banks gain the ability to monitor biometric performance, detect drift, justify outcomes, and refine thresholds continuously.

This is how trustworthy AI in banking is built in practice, not theory.

Implementing Explainable AI in Live Cross-Channel Fraud Decisioning

Explainable AI delivers value only when it is embedded directly into fraud workflows. In production environments, models must support fast decisions, consistent outcomes, and clear accountability across channels. Implementation requires structure, not experimentation.

Embedding Explainability into Transaction Decisioning Systems

Before a suspicious activity report is filed, risk teams must separate genuine threats from normal behavior. Explainable AI reveals which risk indicators and behavioral patterns contributed to an alert.

This transparency helps investigators understand the basis of escalation and reinforces a practical definition of what is a suspicious activity report within day-to-day operations.

Applying Unified Logic Across All Channels

Cross-channel fraud detection fails when models behave differently across payment types. Explainable AI enables shared features and consistent risk logic across cards, digital banking, and alternative payment channels. This creates unified fraud detection without rebuilding systems for each channel.

The result is stronger omnichannel fraud prevention and fewer conflicting decisions.

Reducing Review Time and False Positives

Explainable AI highlights the strongest risk signals for each alert. Analysts focus on high-impact cases instead of reviewing low-risk noise. This reduces false positives and improves throughput in payment fraud detection operations.

Clear explanations also shorten training cycles for new analysts.

Creating Audit-Ready Fraud Decisions

Every fraud action must be traceable. Explainable AI records decision drivers alongside outcomes. This supports audits, regulatory reviews, and internal model governance without manual reconstruction. Teams maintain control while scaling AI-driven fraud prevention.

This directly strengthens AI model transparency, AI risk management, and long-term operational trust.

XAI enhances biometric authentication in digital banking

boost security and trust with FluxForce AI

Conclusion

Biometric authentication is now the main gateway to digital banking. As its use grows, banks must ensure that every decision is clear, fair, and reliable. Speed alone is not enough if teams cannot explain why access was approved or denied.

Explainable AI in banking brings that clarity. It helps teams understand biometric decisions, separate real fraud from technical issues, and keep models accurate over time. It also makes these decisions easier to review during audits and customer disputes. As digital banking scales, trust depends on transparency. Banks that use explainable AI in biometric authentication can strengthen security while protecting customer experience. Those that rely on black-box systems risk losing control.

Share this article