Listen To Our Podcast🎧

.jpeg)

Introduction

Identity theft continues to rise despite widespread deployment of fraud detection systems. Financial institutions face billions in losses annually, with the Federal Trade Commission reporting over $32 billion in fraud-related losses in 2023.

The core problem is not detection accuracy but decision transparency. Systems flag suspicious accounts, block transactions, or reject onboarding attempts, yet fail to provide a clear explanation for why those decisions occur.

Without traceable logic, fraud teams cannot justify actions to regulators, resolve customer disputes efficiently, or refine detection models effectively.

Strengthening identity theft prevention requires building systems where every decision is auditable, explainable, and operationally accountable. This article explains the core importance of clear reasoning in identifying fraudulent activity and ensuring effective, accountable decision-making across financial systems.

Discover how clear decision logic strengthens identity fraud prevention

with FluxForce AI’s explainability tools.

How Identity Fraud Detection Fails Inside Most Banks ?

The reality of most identity fraud detection software is it fails drastically when decisions require explanation. Systems flag suspicious accounts, block transactions, or reject onboarding attempts, but tracing why a decision occurred across multiple platforms is nearly impossible.

Here's what creates the gap:

Fragmented decision paths across layers-

Most banks rely on multiple detection layers: legacy rule engines, vendor solutions, and AI/ML models. Each layer generates risk signals independently. According to a 2024 ACFE study, 61% of institutions cannot map the end-to-end logic of automated fraud decisions.

Limited explainability in AI models-

Machine learning models in identity fraud detection often produce high-risk scores without revealing causal reasoning. Research from MIT Sloan (2023) shows that 38% of AI-driven fraud alerts cannot be fully interpreted by human analysts.

Outcome logging without context-

Transaction logs record timestamps, scores, and alert categories but omit the sequence of thresholds and rule applications. The lack of documented logic makes it difficult for teams to reproduce decisions during compliance reviews or internal audits.

Dependence on manual justification-

Analysts often reconstruct decisions post hoc to satisfy regulators or respond to customer disputes. Industry surveys indicate that institutions relying on manual explanations experience up to 40% longer resolution times for identity verification cases.

Why Explainability is important for identity theft prevention ?

Digital identity fraud has grown from approximately $23 billion in losses in 2022 to over $28 billion in 2024. With synthetic identities and AI-driven attacks powering majority of losses in financial services, explainable fraud prevention systems become essential for:

1. Building customer trust and reducing abandonment

False positives during onboarding create measurable revenue loss. Aite-Novarica Group 2024 reports U.S. financial institutions lose over $120 billion annually due to abandoned accounts from unexplained blocks. Transparent decision logic allows teams to provide specific reasons, whether it is velocity anomalies, device inconsistencies, or geolocation mismatches.

2. Meeting compliance requirements across jurisdictions

Regulators require transparency in automated identity decisions. The EU AI Act classifies high-risk identity systems as requiring explainable logic. FFIEC 2024 guidance mandates demonstrable governance over AI-driven fraud decisions. U.S. fair lending regulations require clear rationale for adverse actions. Systems lacking explainability create audit gaps and regulatory risk.

3. Preventing fraud from AI-powered attacks

Generative AI and deepfake techniques have escalated attack complexity. Onfido 2024 reports a 3,500% increase in deepfake fraud attempts. Transparent decision logic ensures fraud teams can adapt to evolving attack patterns and identify which signals indicate synthetic identities.

4. Improving model effectiveness through feedback

Explainable systems allow precise operational feedback. The Journal of Financial Crime 2024 shows organizations with transparent AI reduced false positives by 32% within six months. Analysts can pinpoint whether alerts result from logic gaps, outdated rules, or anomalous patterns. Opaque systems slow model refinement due to lack of traceability.

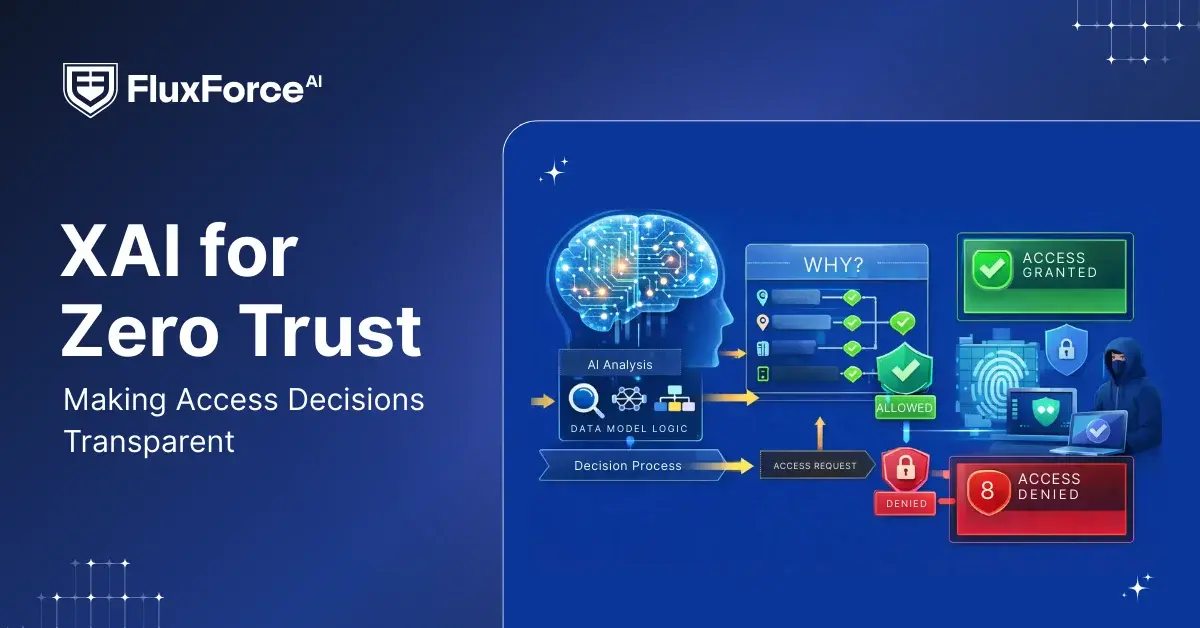

Explainable AI vs rule-based fraud detection: The Major Gap

For most banking institutions, fraud prevention technology is only limited to static rule-based systems that apply fixed thresholds and conditions. However, explainable AI ensures that every decision is traceable, auditable, and operationally actionable.

How Clear Decision Logic Strengthens Fraud Risk Management ?

Managing financial fraud risk demands speed, accountability, and defensibility. Clear decision logic enables rapid action by:

Providing teams with diagnostic context-

Fraud analysts require visibility into why alerts triggered. Clear decision logic surfaces breached thresholds, anomalous behaviour windows, and conflicting identity signals. ACFE research shows documented frameworks reduce investigation time by over 40%.

Enabling threshold adjustments without engineering dependency-

I Fraud patterns shift regionally and seasonally. Transparent logic allows risk teams to modify parameters without development cycles. Federal Reserve data shows business-controlled adjustments respond to new fraud vectors 60% faster.

Reducing escalations-

Unclear outcomes force escalation to senior investigators. Clear logic enables first-line resolution through traceable reasoning. LexisNexis research shows organizations reduce escalation rates by nearly 40% through transparent fraud decisioning.

Strengthening governance accountability-

Clear ownership of thresholds and overrides aligns responsibility across fraud operations. Governance improves when decisions remain explainable under scrutiny from regulators and internal committees.

Best Practices for Identity Fraud Prevention Amid AI Spoofs and Synthetic IDs

Even advanced fraud prevention software across banks is extremely vulnerable to smarter, AI-generated synthetic identities and automated account takeovers. The steps below provide practical ways to strengthen identity theft prevention:

1. Build multi-layered verification

Relying on a single check leaves systems vulnerable. Combine behavioural biometrics, device intelligence, document verification, and network signals into clear decision paths. Document how each signal contributes so fraud cannot bypass partial defences.

2. Use explainability tools to clarify decisions

Tools such as SHAP and LIME help translate AI predictions into understandable insights. Analysts can see why an application or transaction was flagged, validate decisions against business rules, and provide explanations for customers or regulators.

3. Test systems with adversarial simulations

Simulate attacks such as synthetic identity creation, deepfake account takeovers, and credential-stuffing campaigns. Quarterly red-team exercises reveal weaknesses in decision paths and strengthen the system before real attackers exploit gaps.

4. Share and integrate cross-institution intelligence

Fraud often spans multiple organizations. Integrate consortium data and industry threat intelligence to identify patterns invisible to a single institution. Keep documentation transparent so regulators can see how external data informed decisions.

5. Establish analyst feedback loops

Encourage analysts to record false positives, missed cases, and suggestions for rule updates. Feeding these insights back into the system improves detection, reduces errors, and ensures decision logic evolves as fraud tactics change.

Discover how clear decision logic strengthens identity fraud prevention

with FluxForce AI’s explainability tools.

Conclusion

Identity fraud prevention fails when decisions lack clarity rather than accuracy. Fraud prevention technology detects risk at scale yet trust collapses when outcomes cannot be explained. Clear decision logic restores control across identity fraud detection, compliance, and customer experience.

Organizations facing digital identity fraud require more than advanced models. Effective identity theft prevention demands decisions that remain traceable, defensible, and auditable. In an era of AI-powered fraud, the strongest advantage lies in clarity at the moment decisions occur.

Clear decision logic transforms identity fraud prevention from reactive detection into accountable risk management. -

Share this article