Listen To Our Podcast🎧

Introduction

Accurately identifying entities during anti-money laundering (AML) checks remains a challenge for financial institutions. Even well-established AML programs struggle to separate genuine risk from legitimate customers who share similar identifiers.

Traditional entity resolution methods often generate false positives. Lawful customers or transactions are flagged as suspicious. These errors slow investigations, raise compliance costs, and create avoidable regulatory risk. At scale, they reduce operational efficiency and weaken analyst confidence.

Explainable entity resolution helps by showing why two records are treated as a match. Instead of relying on opaque scores, the system highlights the specific data points used in the decision. Analysts can see which attributes mattered and how much each one contributed.

In this article, we explore how explainable entity resolution reduces false matches and strengthens AML processes.

Explainable Entity Resolution reduces false matches in AML

ensuring accuracy and compliance with FluxForce AI

The Hidden Cost of False Matches in AML

Traditional AML programs, in 2024, produced 95% false matches. Organizations spent an average of two hours filing each Suspicious Activity Report (SAR). At scale, these false matches create serious challenges for operations, costs, compliance, and customer experience.

1. Operational Burden

False matches create unnecessary work for compliance teams:

- Analysts must investigate alerts that have no real risk.

- Manual reviews delay the assessment of genuine suspicious activity.

- Repeated checks across multiple systems increase fatigue and reduce efficiency.

In large institutions, even a small false-positive rate can generate hundreds of unnecessary cases reviews every day.

2. Increased Compliance Costs

Handling false alerts drives up operational costs:

- Additional staff is needed to investigate non-risky alerts.

- Workflow efficiency drops because analysts spend time on false cases.

- Systems and rules must be frequently tuned to manage alert overload

3. Regulatory and Audit Risk

When AI explains why a customer is flagged, such as a document mismatch or record inconsistency, customers:

- Better understand the reason behind KYC delays or rejections

- Experience less frustration during identity verification

- Develop greater trust in the bank’s digital KYC process

4. Customer Impact

Legitimate customers may experience delays or additional verification requests, harming trust and satisfaction. A series of false matches could push clients toward competitors with more efficient compliance screening.

Explainability as the missing link in AML accuracy

Traditional entity resolution in AML often operates as a “black box.” With explainability in place, decisions process transparently and with interpretability.

How Explainability Helps:

- Visible reasoning: Instead of a “suspicious” only label, explainability lets analysts see which attributes influenced the match. Key checked factors include shared tax IDs, similar addresses, or linked transactions.

- Prioritized alerts: Advanced AML systems (with explainable scoring and AI) allow teams to address high-confidence matches first while efficiently reviewing lower-risk cases.

- Data quality improvements: Explainable systems highlight data issues such as outdated information, misspellings, or incomplete customer profiles that often lead to false matches. When the system shows specific data points, teams can correct discrepancies and prevent repeat false positives.

By making entity resolution explainable, financial institutions improve both operational efficiency and compliance confidence.

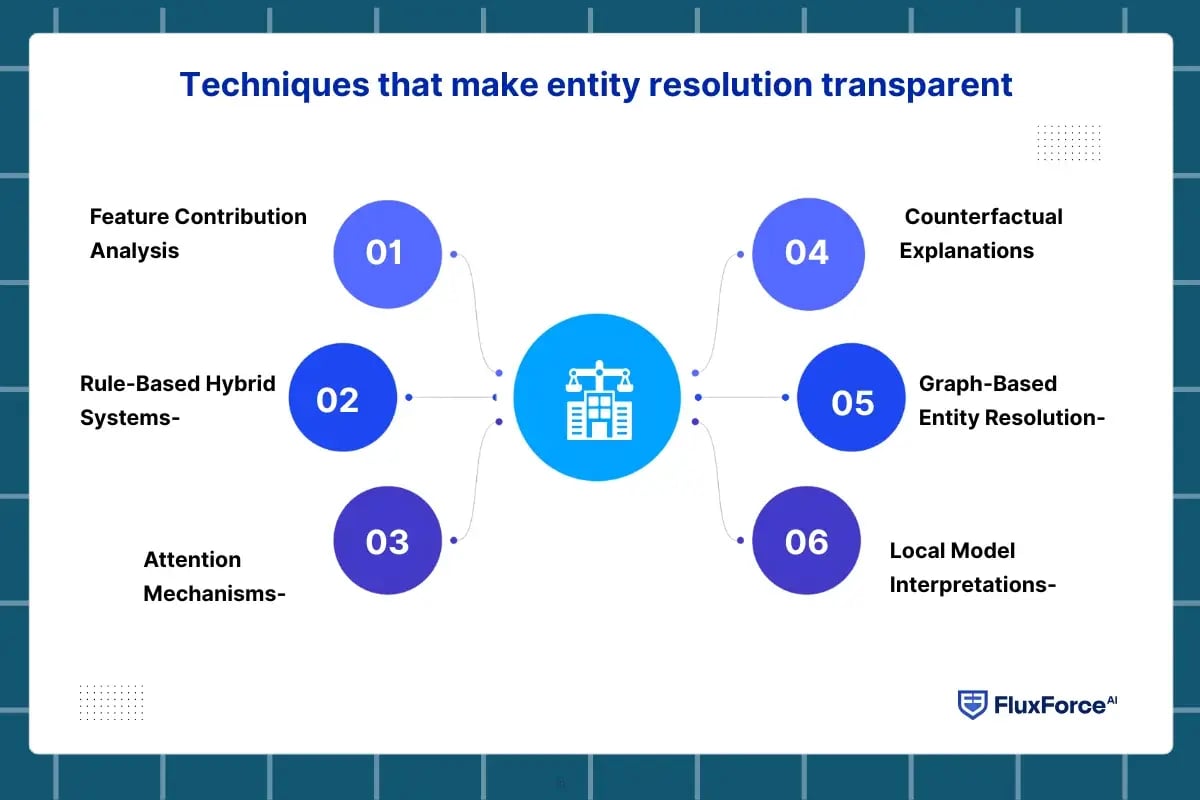

Techniques that make entity resolution transparent

Several approaches enable transparency in entity matching for AML compliance. Each technique addresses specific aspects of the explainability challenge.

1. Feature Contribution Analysis- This technique ranks data attributes by their influence on a match decision.

The system may show that address similarity contributed 55 percent to the score, name similarity added 30 percent, and date-of-birth proximity added 15 percent. Analysts immediately understand both the strength and balance of evidence supporting the match.

2. Rule-Based Hybrid Systems- Hybrid systems combine machine learning with explicit business rules.

AI identifies complex patterns across large datasets. Deterministic rules enforce compliance logic. Identical tax identifiers always trigger a strong match. Records from different jurisdictions without shared identifiers do not match without supporting signals. This structure improves trust while preserving detection accuracy.

3. Attention Mechanisms- Attention mechanisms reveal which input fields influenced the model most.

When screening names against sanctions lists, the system may focus on surnames and patronymics while discounting common initials. Analysts can see exactly which attributes drove similarity judgments.

4. Counterfactual Explanations- Counterfactual explanations address a critical investigative question. What would need to change for this match to be rejected?

The system may indicate that increasing the birth-year difference beyond three years would drop confidence below the alert threshold. Analysts can determine whether a match is robust or marginal.

5. Graph-Based Entity Resolution- Graph-based entity resolution AML systems visualize relationships between entities.

Rather than reviewing records in isolation, analysts see networks of shared addresses, devices, IPs, corporate officers, or transaction counterparties. When multiple connections converge, the relationship becomes immediately clear. This approach uncovers hidden links while reducing coincidental matches.

6. Local Model Interpretations- Local model interpretations test small variations around a specific decision.

The system adjusts input features and observes how match probability changes. For AML alerts, this reveals whether confidence depends on strong signals or fragile similarities that often lead to false positives.

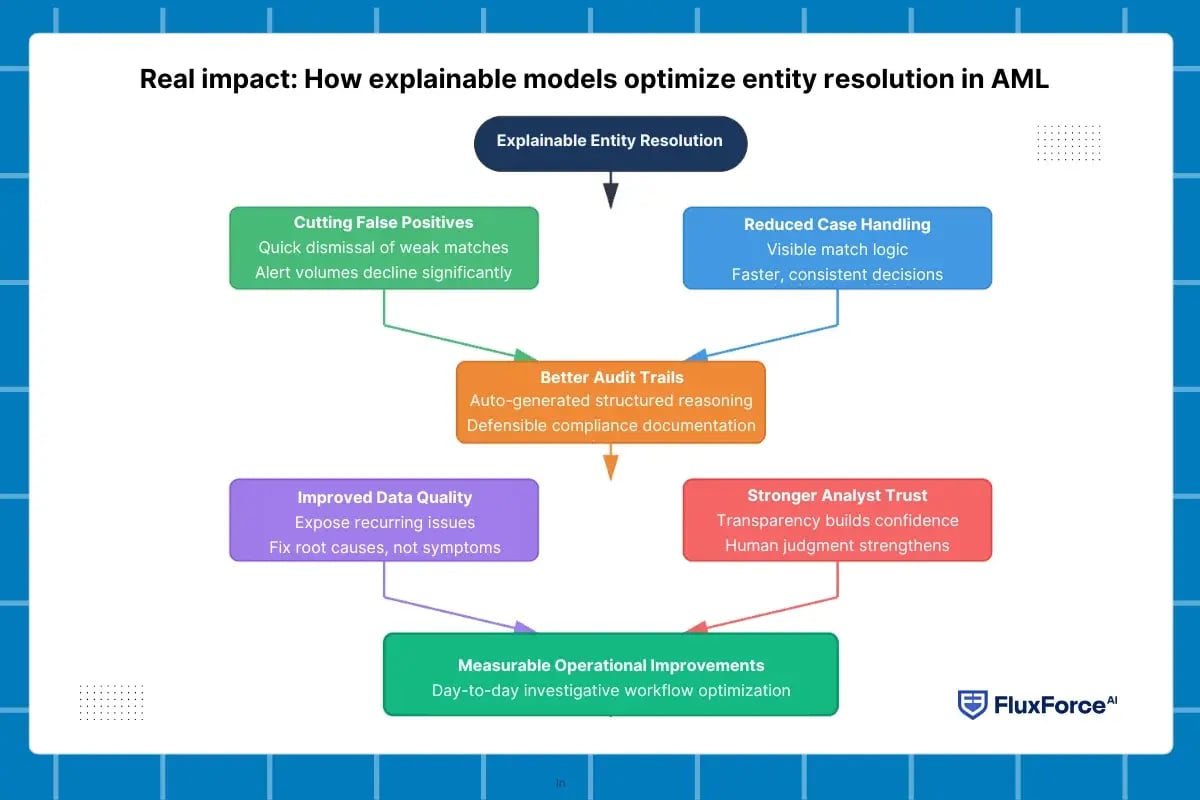

Real impact: How explainable models optimize entity resolution in AML

When entity resolution becomes explainable, it delivers measurable improvements across AML operations. The benefits extend beyond model performance into day-to-day investigative workflows.

1. Customer support teams feel confident- Explainable AI gives customer-facing teams clear answers. When customers question KYC rejections, support teams can explain decisions confidently. This reduces frustration and improves customer experience in digital KYC journeys.

2. Bank’s trustworthiness increases- Transparent KYC verification builds long-term trust. Customers are more willing to share sensitive information when decisions feel fair and understandable. Explainable AI supports ethical and responsible AI use in banking.

3. Reduction in false rejections- Explainability helps identify unnecessary flags. By understanding why customers are rejected, banks can fine-tune rules and models. This directly reduces false positives in KYC using AI.

4. Enhanced AI auditability in the KYC process- Every explainable AI decision creates a clear audit trail. Auditors can trace how data, rules, and risk factors influenced outcomes. AI auditability in the KYC process becomes straightforward and reliable.

5. Right justification to regulators- KYC explainability matters for regulators. Explainable AI provides structured decision logic aligned with KYC regulations. This enables institutions to justify actions confidently during regulatory reviews.

Key considerations when adopting explainable entity resolution

Adopting explainable entity resolution in AML requires more than model accuracy. Institutions must balance transparency, operational efficiency, and regulatory expectations while ensuring explanations genuinely support investigator decisions.

.webp?width=1200&height=800&name=Techniques%20that%20make%20entity%20resolution%20transparent%20(2).webp)

1. Align explainability with analyst decision-making

Explainability should help analysts understand why an entity match occurred, not overwhelm them with raw model outputs. Explanations must be concise, relevant, and directly tied to investigative actions.

2. Ensure explanations are consistent across AML workflows

Explainability allows teams to identify weak signals and biases. Continuous monitoring improves KYC risk assessment accuracy and long-term reliability.

3. Balance model transparency with detection performance

Highly interpretable models must still capture complex entity relationships. The goal is not maximum transparency alone, but explainable accuracy that meaningfully reduces false positives without making AML coverage vulnerable.

4. Support auditability and regulatory review

Explainable entity resolution should generate clear audit trails. Regulators expect documented reasoning behind entity matches, especially when alerts are dismissed or escalated based on AI-driven decisions.

5. Design for scalability and continuous learning

As entity data grows and financial crime patterns evolve, explainable models must adapt. Continuous model tuning and explanation updates are essential to prevent explanation drift and rising false matches.

Conclusion

Explainable entity resolution transforms AML compliance from pattern matching into evidence-based investigation. By revealing why systems flag matches, it empowers analysts to act with confidence and speed while reducing false positives.

The challenge is not matching entities alone. AML requires both advanced detection and human judgment. Black-box systems deliver scale but obscure reasoning. Transparent models deliver both accuracy and clarity.

As regulatory expectations around model risk management and algorithmic transparency continue to rise, explainable approaches will shift from advantage to necessity. Institutions that invest early position themselves to meet these expectations while realizing immediate operational gains.

Share this article