Listen To Our Podcast🎧

Introduction

“If you can’t explain your decision, people won’t trust it.”

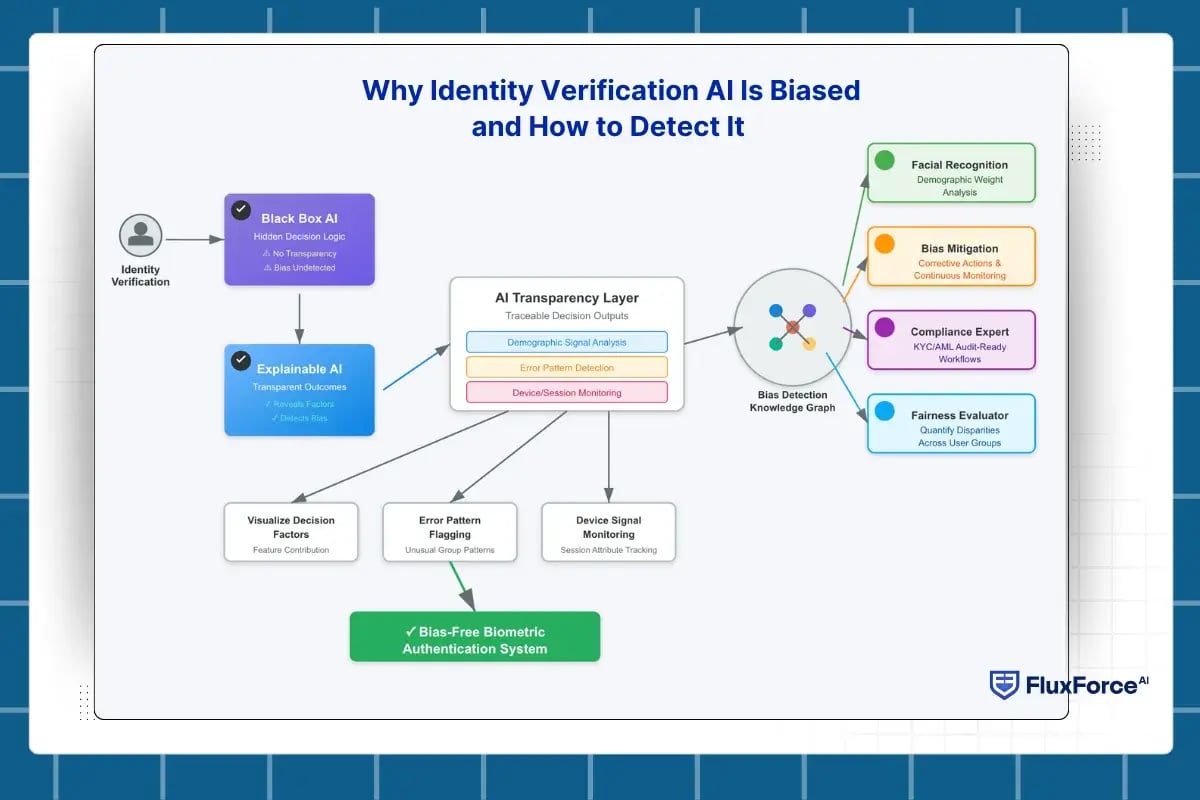

This saying perfectly captures the challenge with modern identity verification AI. Even as AI systems improve, AI bias in facial recognition and bias in biometric verification still create unfair outcomes for users in KYC and AML workflows. Traditional black-box models hide the reasoning behind decisions, making it difficult to identify and fix bias.

Explainability in AI models solves this problem by revealing how each verification decision is made. With transparent AI decision making, security and compliance teams can see the factors driving approvals or denials, ensuring AI bias in identity verification is detected early.

Using explainable systems helps organizations focus on reducing bias in AI models, improving AI fairness in KYC and AML, and maintaining identity verification AI compliance. Embedding bias mitigation in biometric systems enables bias-free biometric authentication, giving teams confidence that identity checks are fair and defensible.

Explainability reduces bias in identity verification, ensuring fairer outcomes

with FluxForce AI

Why Identity Verification AI Is Biased and How to Detect It ?

Explainability vs Black Box AI in KYC

Traditional black-box AI hides decision logic. Explainability in AI models makes outcomes transparent, revealing factors like demographics, behavior, or device signals. This improves AI fairness in KYC and AML and ensures identity verification AI compliance.

AI Transparency in Identity Verification Systems

Transparent AI decision making provides traceable outputs for every verification attempt. Teams can see which features contribute most to denials or approvals, helping detect bias early, correct errors, and maintain audit-ready workflows. Examples include:

- Highlighting demographic signal weights in facial recognition

- Flagging unusual error patterns across user groups

- Monitoring device or session attributes that consistently trigger denials

Explainable AI for Fair Identity Verification

Explainable models reveal drivers of failed verifications, enabling bias mitigation in biometric systems and supporting bias-free biometric authentication. By visualizing decision factors, teams can quantify disparities, implement corrective actions, and continuously monitor fairness.

How to Implement Explainable AI to Reduce Bias in Identity Verification ?

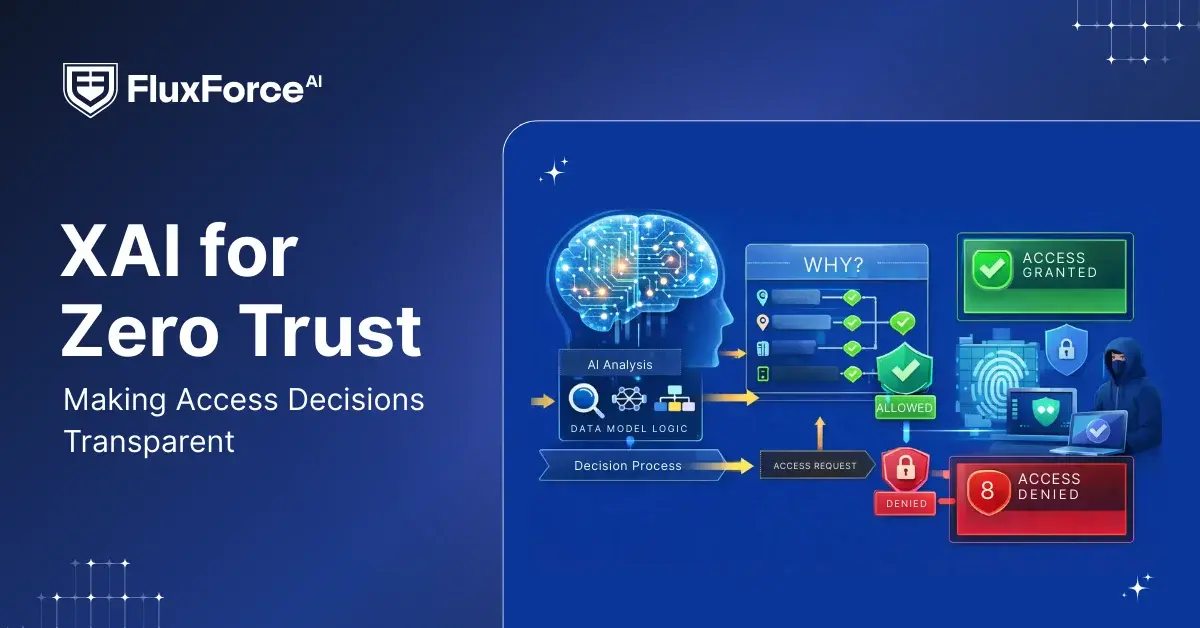

If Zero Trust decides access every second, who explains those decisions?

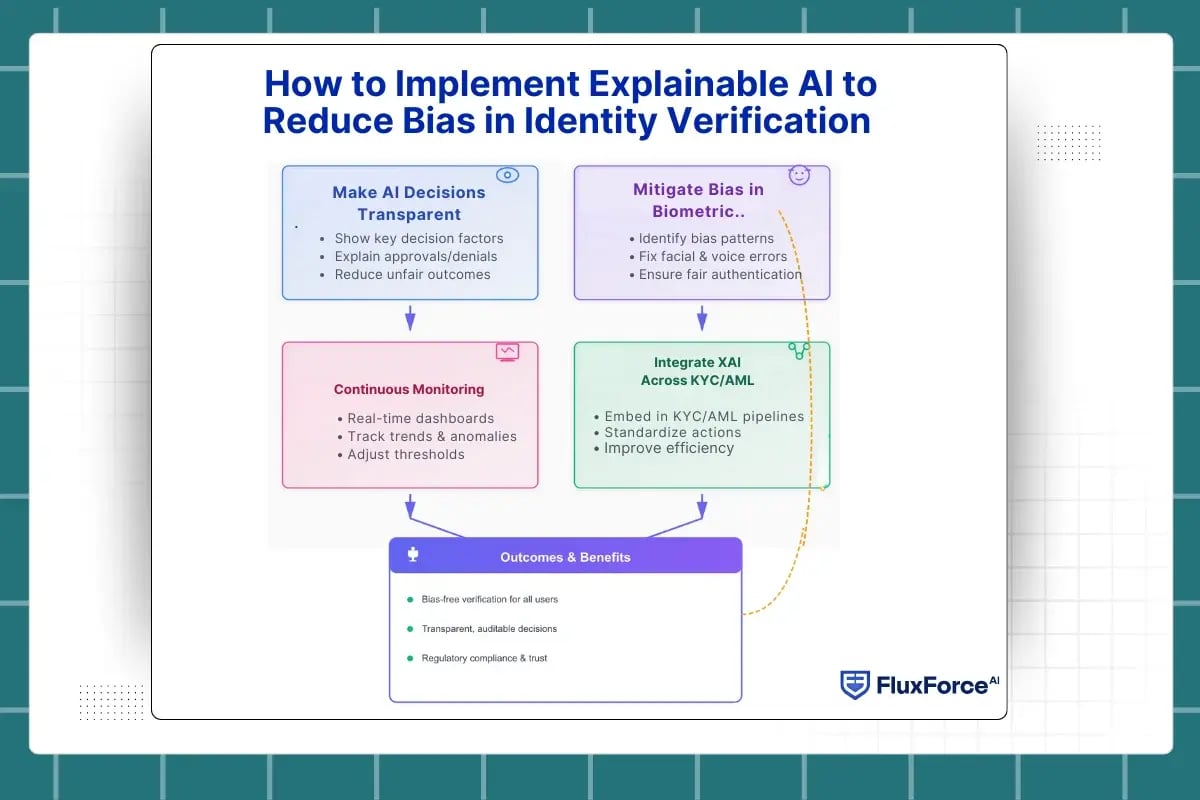

Make AI Decisions Transparent

Implementing explainability in AI models starts with making verification decisions visible. Every approval or denial should show the key factors influencing it—such as demographics, device posture, session behavior, or unusual patterns. Transparent AI decision making ensures that teams understand why a decision occurred, helping reduce unfair denials and reinforcing AI fairness in KYC and AML workflows.

Mitigate Bias in Biometric Systems

XAI enables teams to pinpoint repeated errors in facial recognition, voice verification, or other biometric checks. By identifying patterns of bias, organizations can implement bias mitigation in biometric systems and achieve bias-free biometric authentication. This ensures all users experience fair and consistent verification.

Continuous Monitoring and Feedback Loops

Dashboards and real-time explanations allow security teams to monitor AI decisions continuously. With identity verification AI compliance in mind, teams can track trends, flag anomalies, and adjust thresholds to maintain fairness. This approach reduces false positives, strengthens reducing bias in AI models, and provides audit-ready documentation for regulators.

Integrate XAI Across KYC and AML Workflows

Embedding explainable AI for KYC into existing verification pipelines ensures that fairness is built into every stage of the process. Teams can leverage AI transparency in identity verification systems to standardize corrective actions, improve efficiency, and maintain confidence in automated decision-making.

What Changes When Explainability Is Applied to Identity Verification ?

Explainability does more than clarify individual AI decisions. It fundamentally changes how identity verification systems are governed, reviewed, and trusted.

This is where explainability reduces bias at scale.

From Outcome Review to Decision Accountability

In traditional identity verification AI, teams review outcomes after complaints or audit failures. Bias is discovered late, often through customer friction.

With explainable AI in identity verification:

- Every decision is attributable

- Responsibility shifts from “model behavior” to decision ownership

- Bias is treated as a measurable system issue, not an isolated error

This accountability forces earlier intervention and prevents bias from becoming systemic.

Human Oversight Becomes Informed, Not Reactive

Human review exists in most KYC systems, but without explainability, reviewers override decisions blindly. This creates inconsistency and hidden bias.

Explainability in AI models changes this by giving reviewers:

- Context behind each decision

- Clear reasons for failure instead of abstract risk scores

- Confidence to correct bias without weakening security

As a result, human oversight actively contributes to fair AI identity verification instead of masking model flaws.

Bias Mitigation Moves Into Policy, Not Postmortems

Without explainability, bias mitigation in biometric systems happens after damage is done. With explainable AI, bias patterns inform policy adjustments.

Organizations begin to:

- Adjust verification rules based on explainable trends

- Align AI decisions with ethical AI identity verification goals

- Proactively support bias-free biometric authentication

Bias reduction becomes operational, not reactive.

Compliance Teams Gain Continuous Visibility

Regulators increasingly expect proof, not promises. Explainable machine learning in fintech provides continuous evidence that decisions are fair, traceable, and justified.

This strengthens:

- Identity verification AI compliance

- AI fairness in KYC and AML

- Long-term regulatory confidence

Explainability ensures bias reduction is demonstrable, not assumed.

How Explainable AI Strengthens Regulatory Readiness in Identity Verification ?

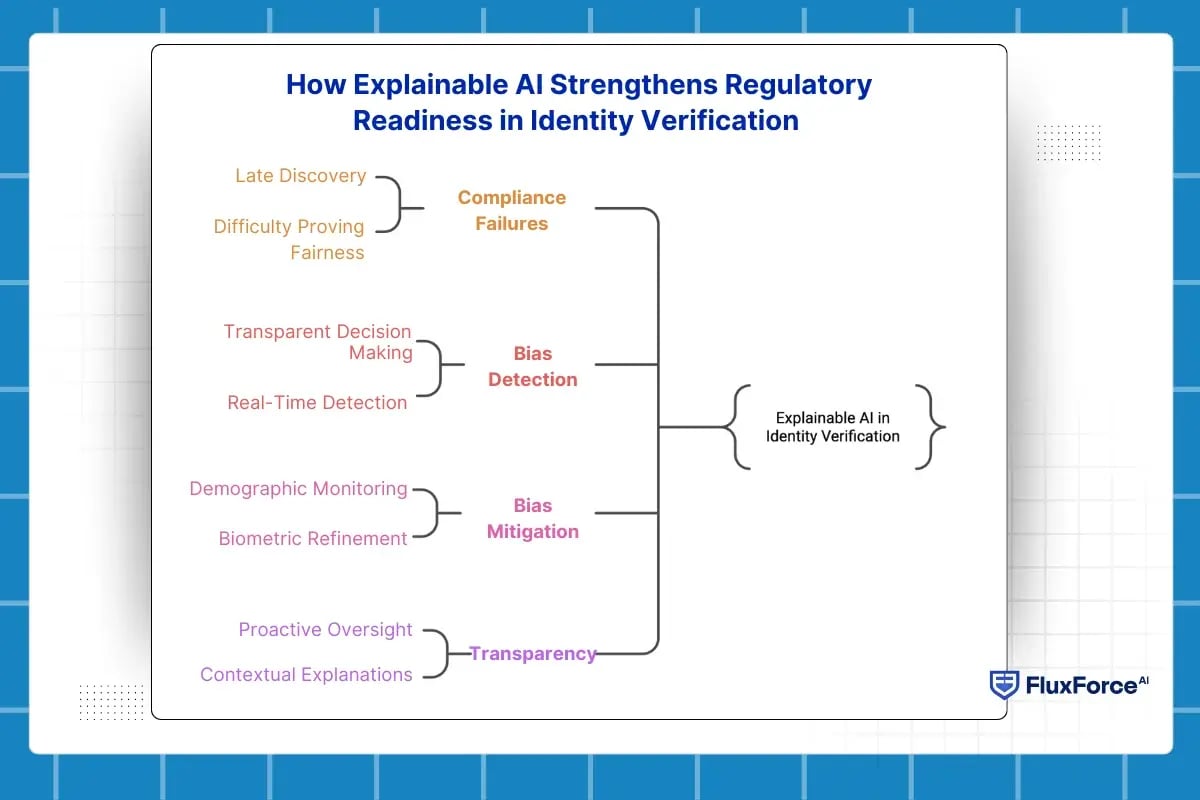

Why Compliance Fails Without Explainability in AI Models

As identity verification AI becomes central to KYC and AML workflows, regulators now require clear evidence showing how decisions are made and whether bias exists. This is where explainability in AI models directly impacts regulatory readiness.

In traditional systems, compliance teams often review outcomes without seeing the reasoning behind them. When AI bias in facial recognition or bias in biometric verification affects users, these issues are usually discovered late, during audits or customer complaints. Without explainable systems, proving fairness becomes difficult.

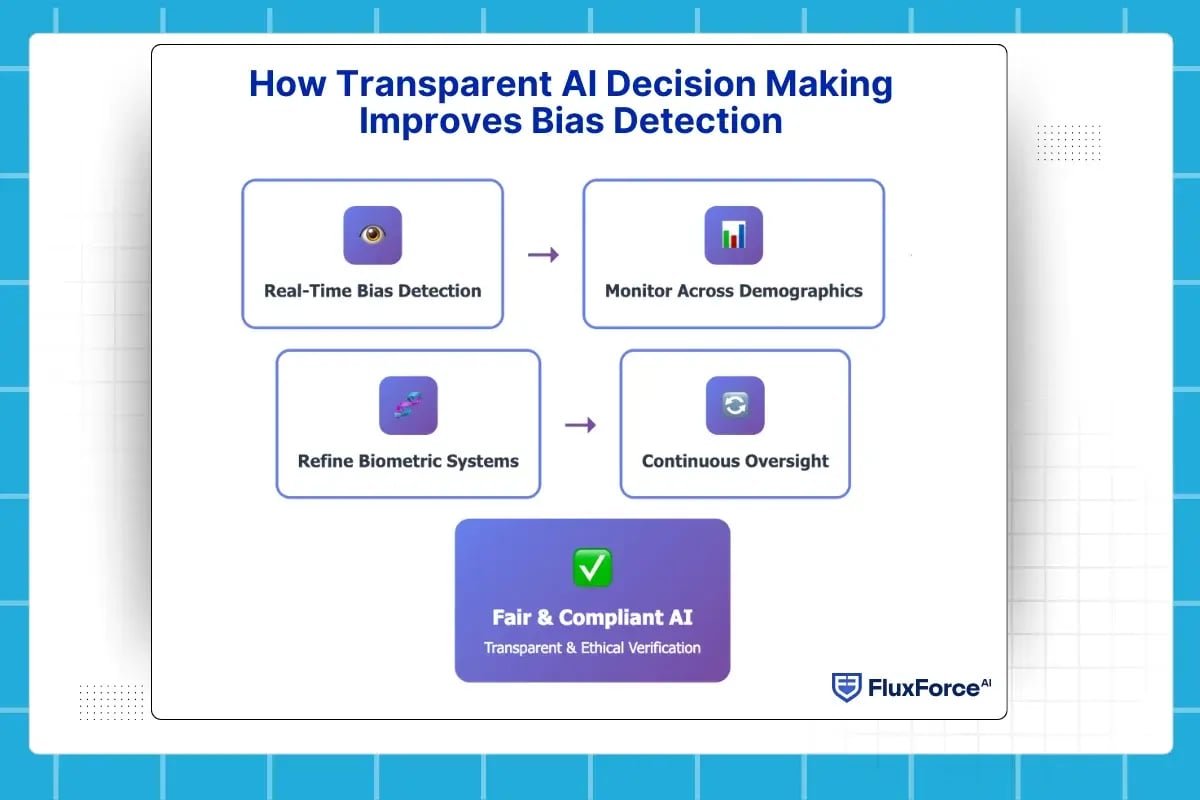

How Transparent AI Decision Making Improves Bias Detection ?

Transparent AI decision making allows teams to see the factors behind every approval or denial. This makes AI bias in identity verification detectable in real time instead of only after incidents occur.

With explainable systems, organizations can address issues as they arise, improving AI fairness in KYC and AML while maintaining identity verification AI compliance. Decisions become understandable and actionable, reducing errors and increasing confidence in automated workflows.

Reducing Bias in AI Models Across Verification Workflows

Explainability transforms how teams approach reducing bias in AI models. Instead of relying on overall accuracy metrics, teams can monitor how decisions vary across demographics, device types, and behavior patterns.

This insight enables precise corrections and continuous monitoring. By targeting specific bias drivers, organizations can enhance fairness and strengthen regulatory alignment without compromising verification security.

Bias Mitigation in Biometric Systems Through Explainable AI

Explainable AI plays a key role in bias mitigation in biometric systems. Patterns of errors in facial recognition, voice verification, or other biometric checks are revealed, allowing teams to refine thresholds and retrain models effectively.

Over time, this approach produces bias-free biometric authentication. Users experience consistent and equitable verification, which supports ethical and fair AI practices.

AI Transparency in Identity Verification Systems for Continuous Oversight

With AI transparency in identity verification systems, oversight becomes proactive rather than reactive. Each verification outcome includes contextual explanations, enabling compliance teams to review, understand, and justify decisions.

Continuous visibility replaces ad hoc audits and ensures that bias is identified, corrected, and documented continuously.

Embedding Explainable AI for Ethical and Compliant Identity Verification

By integrating explainable AI for KYC into verification workflows, organizations make fairness operational. Bias reduction becomes measurable, transparent, and defensible. This strengthens AI fairness in KYC and AML, ensures identity verification AI compliance, and builds long-term trust in ethical AI identity verification systems.

Explainability reduces bias in identity verification, ensuring fairer outcomes

with FluxForce AI

Conclusion

Reducing bias in AI models is most effective when explainability is embedded from the start. Explainable AI for KYC and bias mitigation in biometric systems allows teams to address unfair patterns in real time, ensuring bias-free biometric authentication. This approach moves organizations from reactive audits to proactive governance, strengthening AI transparency in identity verification systems and supporting ethical, compliant operations.

Share this article