Listen To Our Podcast🎧

Introduction

A suspicious activity report is one of the most important signals in financial crime compliance. Banks rely on it to report suspicious activity that could indicate fraud, money laundering, or other financial risks. In today’s suspicious activity report banking surveillance environments, regulators expect these reports to be precise, consistent, and clearly reasoned.

That expectation is becoming harder to meet. Risk operations teams now review massive volumes of transactions through automated monitoring systems. While these systems flag unusual behavior quickly, they often fail to explain why something looks risky. Investigators face alert fatigue and inconsistent conclusions, which weakens overall SAR quality.

AI was introduced to improve efficiency, but many models still operate without clarity. When decisions lack context, explainability in AI predictions becomes a major gap. Without AI decision transparency, accuracy suffers and SAR narratives lose strength.

This is why the XAI impact on operational accuracy matters and why XAI in risk management is becoming essential for modern risk operations.

Explainable Entity Resolution reduces false matches in AML

ensuring accuracy and compliance with FluxForce AI

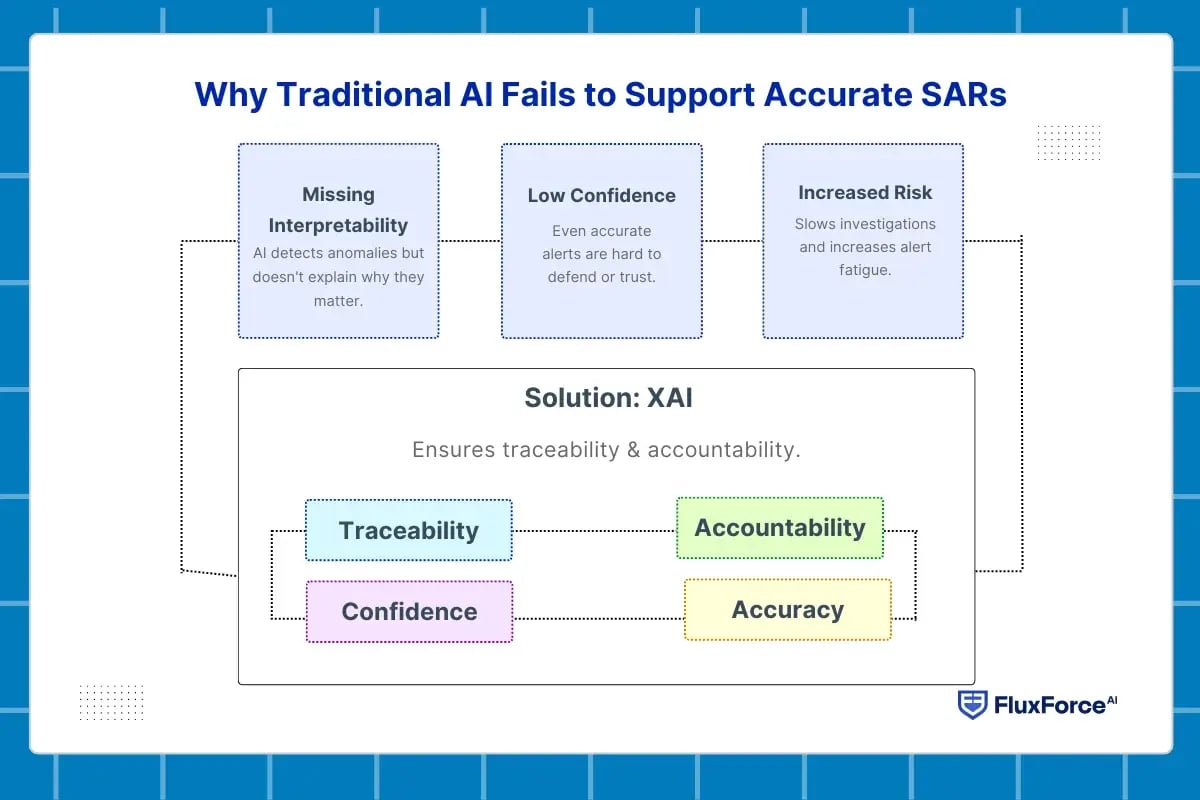

Why Traditional AI Fails to Support Accurate SARs ?

Investigators in modern risk operations rely on AI to help detect suspicious activity. However, not all AI is created equal. Many systems flag anomalies without explaining why they matter. This gap in transparency can make even correct alerts difficult to trust or act upon. Understanding the limitations of traditional AI helps explain why Explainable AI for SAR accuracy is becoming essential.

AI Model Interpretability Is Often Missing

Most AI risk operations tools in suspicious activity report banking surveillance focus on detecting unusual transactions but rarely provide insight into the reasoning behind the alerts. When AI model interpretability is missing, investigators must guess which signals drove the decision, leading to inconsistent outcomes and weaker SAR narratives.

Explainability in AI Predictions Drives Confidence

A high-quality suspicious activity report requires clarity on why a transaction is suspicious, which risk factors mattered most, and why escalation is necessary. Without explainability in AI predictions, even accurate alerts are hard to defend and can reduce compliance confidence across teams.

AI Decision Support Systems Without Transparency Create Risk

Many institutions use AI decision support systems to assist analysts. If these tools lack AI decision transparency, they slow investigations, increase alert fatigue, and make it harder to file accurate suspicious activity report examples. Risk operations require actionable insight, not just alerts.

The Need for XAI in Risk Management

AI alone cannot guarantee accurate SARs. XAI in risk management ensures traceability, accountability, and stronger alignment with internal policies. Explainable AI helps investigators make confident decisions and delivers a measurable XAI impact on operational accuracy across the enterprise.

How Explainable AI Actually Improves SAR Accuracy in Risk Operations ?

Traditional entity resolution in AML often operates as a “black box.” With explainability in place, decisions process transparently and with interpretability.

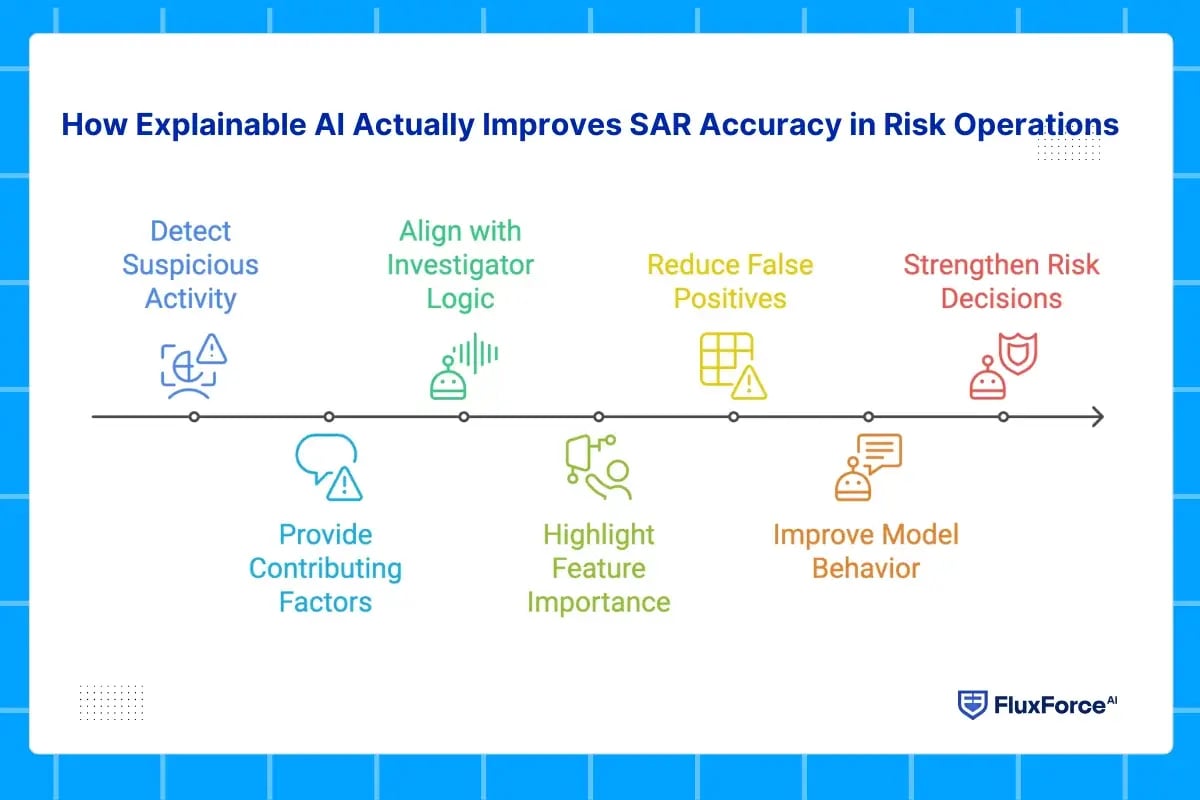

Explainable AI Use Cases That Match Real SAR Workflows

In production environments, explainable AI use cases are embedded directly into transaction monitoring and case management systems. Instead of a single risk score, investigators see contributing factors such as transaction velocity, counterparty risk, geographic exposure, and behavioral deviation.

This shows how compliance teams already think. Explainability aligns AI output with investigator logic, making it easier to report suspicious activity with confidence rather than relying on gut instinct or defensive over-reporting.

AI Explainability Techniques That Investigators Actually Use

In practice, not every explainability method adds value. The most effective AI explainability techniques highlight feature importance and rule alignment in plain language. Investigators need to know which signals mattered most and which did not.

This directly improves suspicious activity report narrative examples, where clarity and traceability are essential. Clear reasoning shortens review cycles, reduces back-and-forth with compliance leads, and improves regulator readiness.

Explainability in AI Predictions Reduces False Positives

High alert volumes remain one of the biggest challenges in suspicious activity report banking surveillance. Explainability in AI predictions allows teams to quickly discard alerts driven by weak or irrelevant signals.

Over time, this feedback loop improves model behavior and leads to a measurable XAI impact on operational accuracy. Fewer low-quality alerts mean investigators spend more time on genuinely suspicious behavior.

Explainable AI Strengthens Risk Management Decisions

From a risk leadership perspective, XAI in risk management provides accountability. When decisions are explainable, they can be audited, challenged, and improved. This supports stronger governance and aligns with AI transparency and accountability expectations increasingly seen in regulatory reviews.

Explainable systems also integrate well with AI decision support systems, helping teams understand not just what action to take, but why it is justified.

How Explainable AI Supports the Full Suspicious Activity Report Process ?

Explainable AI strengthens SAR accuracy by embedding clarity into every stage of the reporting workflow. Instead of isolated alerts, investigators receive reasoning that aligns with regulatory expectations and operational realities.

Clear Identification of Suspicious Behavior

Before a suspicious activity report is filed, risk teams must separate genuine threats from normal behavior. Explainable AI reveals which risk indicators and behavioral patterns contributed to an alert.

This transparency helps investigators understand the basis of escalation and reinforces a practical definition of what is a suspicious activity report within day-to-day operations.

Confident Reporting Decisions Across Risk Teams

Explainable AI supports consistent decision-making when teams report suspicious activity. Investigators can trace how risk signals evolve across transactions and counterparties.

This clarity reduces hesitation and improves accuracy in workflows related to how to report suspicious activity, especially in complex cases with overlapping risk factors.

Stronger and More Defensible SAR Narratives

High-quality documentation depends on structured reasoning. Suspicious activity report narrative examples improve when investigators can link behavior directly to explainable risk signals.

Explainable AI highlights relevant features and patterns, allowing narratives to reflect actual system logic rather than assumptions or generic descriptions.

Ongoing Value After SAR Submission

SAR accuracy does not end at filing. Understanding what happens after a suspicious activity report is filed highlights the importance of traceability.

Explainable AI preserves decision context over time, supporting audits, reviews, and continuous operational risk analytics with AI across the organization.

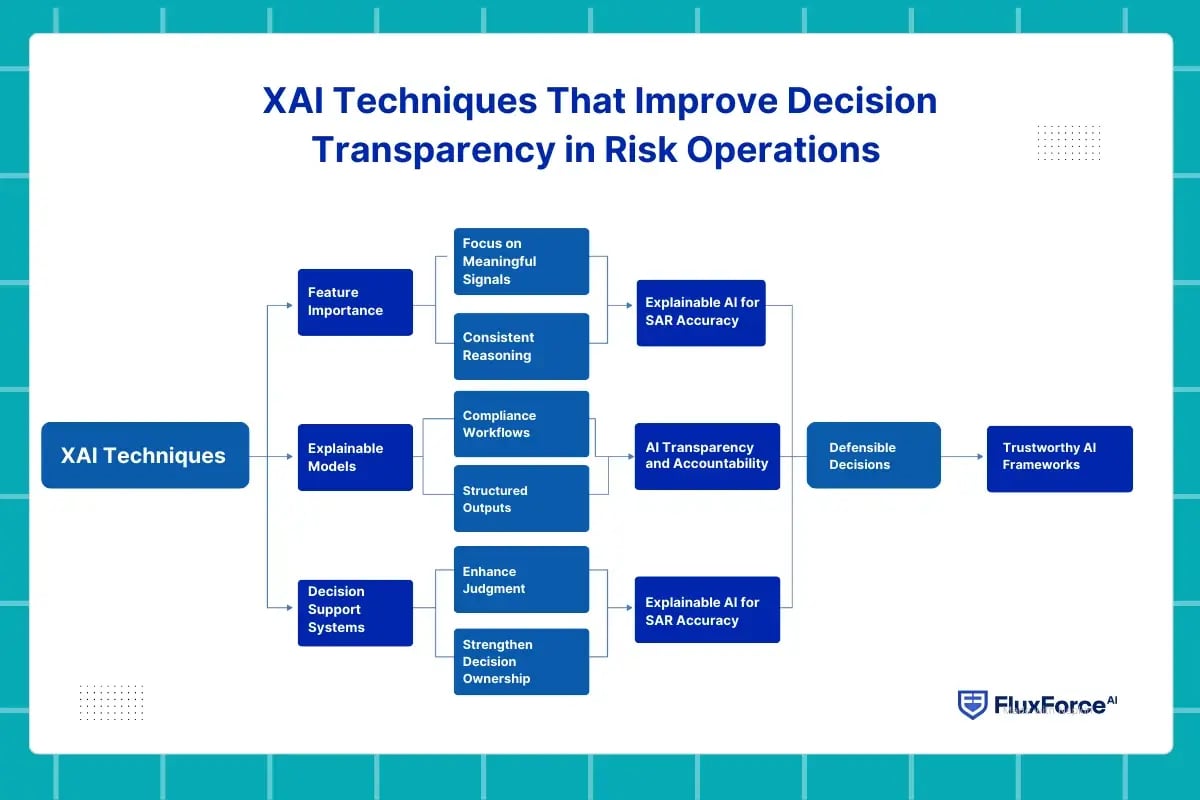

XAI Techniques That Improve Decision Transparency in Risk Operations

Accurate SAR outcomes depend on more than model performance. They depend on whether decisions can be understood, reviewed, and defended. This is where AI explainability techniques become central to modern risk operations.

Feature Importance as a Foundation for Trust

Feature importance is one of the most effective ways to support explainability in AI predictions. It shows which data points influenced a decision and which had minimal impact.

In suspicious activity report banking surveillance, this clarity helps investigators focus on meaningful risk signals. It also supports consistent reasoning across teams and reduces interpretation gaps.

Explainable Models in Enterprise AI Environments

Many institutions are shifting toward explainable models in enterprise AI because they align better with compliance workflows. These models provide structured outputs that can be reviewed by investigators and risk leaders.

This approach improves AI decision transparency and supports AI transparency and accountability across the organization.

Decision Support Systems That Enable Human Oversight

Effective AI decision support systems do not replace investigators. They enhance judgment. Explainable systems guide analysts by showing how conclusions are formed.

This visibility strengthens decision ownership and improves explainable AI for SAR accuracy in high-volume environments.

Explainable AI vs Black-Box Models in Risk Analysis

Black-box models may deliver high detection rates but offer little insight. In contrast, explainable approaches support traceability and governance. For institutions focused on XAI in risk management, explainability ensures decisions remain defensible and aligned with trustworthy AI frameworks used in regulated environments.

Conclusion

The future of SAR operations will not be defined by faster alerts or more complex models. It will be defined by clarity. Explainable AI enables risk teams to understand decisions in real time and justify them long after a report is filed.

By embedding transparency into AI-driven risk operations, institutions gain more than compliance benefits. They gain control, consistency, and confidence. Explainable systems support better prioritization, clearer narratives, and improved regulatory alignment. As financial crime risks grow more complex, explainability becomes the defining capability of effective SAR programs.

Share this article